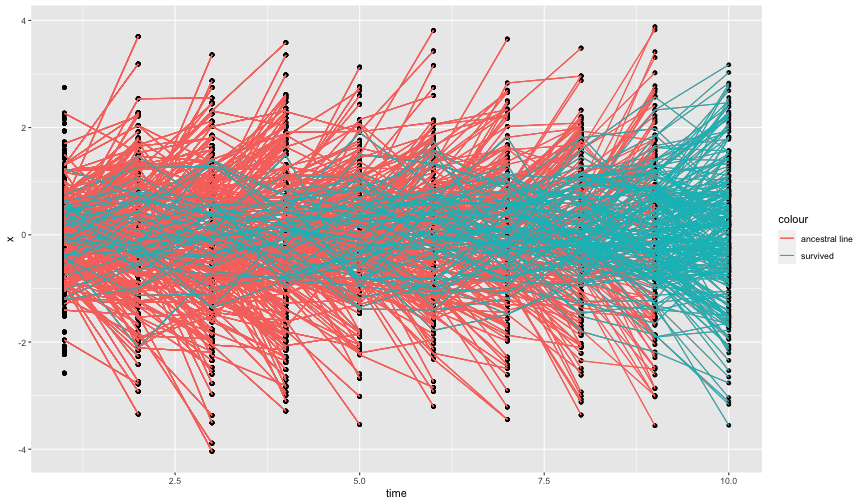

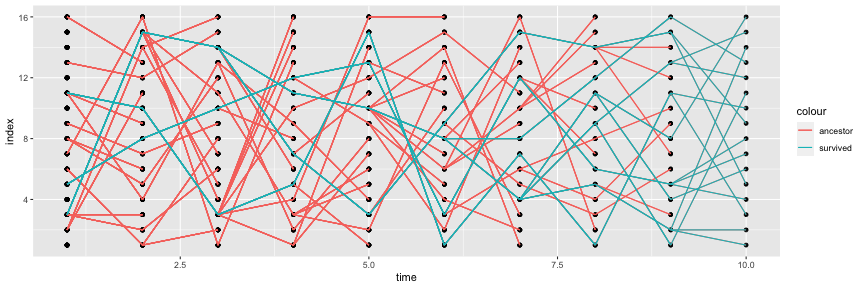

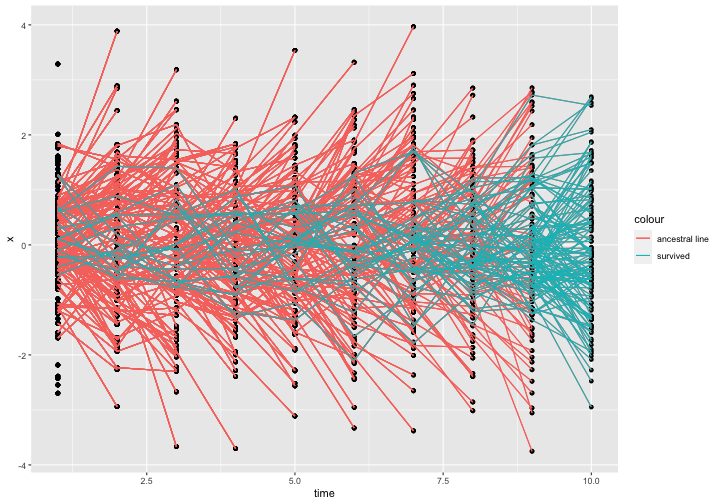

class: center, middle, inverse, title-slide # Sequential Monte Carlo in Statistics ## July 2019 ### Anthony Lee <br/><br/> University of Bristol & Alan Turing Institute <br/><br/> With many thanks to the Statistical Society of Australia and ACEMS --- # Outline - Introduction: Classical Monte Carlo & Importance Sampling - Sequential Monte Carlo & hidden Markov models - Arbitrary target distributions - Twisted flows - Variance estimation <br/> For more details, and references, please see the recent survey Doucet & Lee. Sequential Monte Carlo methods. In Handbook of Graphical Models, 2018. A preprint is linked to from my website. --- class: inverse, center, middle # Introduction ## Classical Monte Carlo & Importance Sampling --- # Classical Monte Carlo: integral Main idea: approximate sums/integrals with random variables. Notation: measurable space `\((\mathsf{X}, \mathcal{X})\)`, `\(f:\mathsf{X}\rightarrow\mathbb{R}\)` and `\(\mu\)` a measure on `\(\mathcal{X}\)`, `$$\mu(f):=\int_{\mathsf{X}}f(x)\mu({\rm d}x).$$` -- For example, consider - `\(\mathsf{X} = \{1,\ldots,s\}\)` so `\(\mu(f)\)` is a sum, - `\(\mathsf{X} = \mathbb{N}\)` so `\(\mu(f)\)` is an infinite sum, - `\(\mu\)` having a density w.r.t. Lebesgue measure on `\(\mathbb{R}^d\)`. -- If `\(\mu\)` is a probability measure / distribution, `\(\mu(1)=1\)` and `$$\mu(f)=\mathbb{E}\left[f(X)\right],\qquad X\sim\mu.$$` --- # Classical Monte Carlo: approximation Assume `\(\mu\)` is a probability measure / distribution. Monte Carlo (particle) approximation of `\(\mu\)` is a discrete probability measure `$$\mu^{N} := \frac{1}{N} \sum_{i=1}^N \delta_{\zeta_i},\qquad\zeta_{i}\overset{\rm iid}{\sim}\mu$$` -- So the Monte Carlo approximation of `\(\mu(f)\)` is the integral of `\(f\)` w.r.t. `\(\mu^N\)` `$$\mu^{N}(f):=\frac{1}{N}\sum_{i=1}^{N}f(\zeta_{i}).$$` -- **Law of large numbers**: `\(\mu^{N}(f)\overset{\rm a.s.}{\rightarrow}\mu(f)\)` as `\(N\rightarrow\infty\)`. Fundamental justification, but no handle on accuracy for finite `\(N\)`. --- # Classical Monte Carlo: accuracy Assume `\(\mu(f^2) < \infty\)`, i.e `\(f \in L_2(\mathsf{X}, \mu)\)`. The variance of `\(\mu^N(f)\)` is `$${\rm var}(\mu^N(f)) = \frac{1}{N} {\rm var}(f(X)) = \frac{1}{N}\mu(\bar{f}^2),$$` where `\(\bar{f} := f - \mu(f)\)`. -- **Central Limit Theorem**: as `\(N \to \infty\)` we have asymptotic normality `$$N^{1/2} ( \mu^N(f) - \mu(f) ) \overset{L}{\to} N(0, \mu(\bar{f}^2)).$$` -- Good news: `\(|\mu^N(f) - \mu(f)|\)` is `\(\mathcal{O}_P(N^{-1/2})\)`. Bad news: `\(\mu(\bar{f}^2)\)` may be very large, e.g. when `\(\mathsf{X}\)` is "large". --- # Importance sampling Say we want to approximate `\(\pi(f)\)` but we can only simulate according to `\(\mu\)`? -- If `\(\mu\)` dominates `\(\pi\)`, i.e. `\(\pi(x) > 0 \Rightarrow \mu(x) > 0\)`, then we can write `$$\pi(f) = \mu(w \cdot f) = \int f(x) w(x) \mu({\rm d}x),$$` where `\(w = {\rm d} \pi / {\rm d} \mu\)` is the ratio of densities of `\(\pi\)` and `\(\mu\)`. -- So we can approximate `\(\pi(f)\)` by `\(\mu^N(w \cdot f)\)`. This is an unbiased approximation with variance `$${\rm var}(\mu^N(w \cdot f)) = \frac{\mu(w^2 \cdot f^2) - \pi(f)^2}{N} = \frac{\pi(w \cdot f^2) - \pi(f)^2}{N}.$$` --- # Self-normalized importance sampling We can also "self-normalize" the approximation, i.e. compute `$$\pi^N_{\rm SN}(f) = \frac{\mu^N(w \cdot f)}{\mu^N(w)} = \frac{\sum_{i=1}^N w(X_i)f(X_i)}{\sum_{i=1}^N w(X_i)} = \frac{\mu^N \cdot w}{\mu^N(w)} (f).$$` Useful when `\(w\)` can be computed only up to an unknown normalizing constant, e.g. when `\(\pi\)` is a posterior distribution. -- This is biased but strongly consistent and asymptotically normal as `\(N \to \infty\)`. The asymptotic variance is `$$\lim_{N \to \infty} N ~ {\rm var}(\pi^N_{\rm SN}(f) ) = \pi(w \cdot \bar{f}^2),$$` where `\(\bar{f} = f - \pi(f)\)`. The asymptotic variance can be smaller than the asymptotic variance for IS. --- class: inverse, center, middle # Sequential Monte Carlo ## Motivated by Hidden Markov Models --- # Hidden Markov model Let `\((X_1,\ldots,X_n)\)` be a Markov chain with transition kernels `\(M_2,\ldots,M_n\)` and initial distribution `\(\mu\)`. So `\(X_1 \sim \mu\)` and `\(X_p \mid X_{p-1} \sim M_p(X_{p-1}, \cdot)\)`. Let `\(Y_p \mid (X_1,\ldots,X_n) \sim g(X_p,\cdot)\)`. In an HMM, the observations are `\(Y_1,\ldots,Y_n\)`. <center> <p style="margin-bottom:-3cm"> <div id="htmlwidget-aa7f6bffed4abb07491a" style="width:504px;height:504px;" class="grViz html-widget"></div> <script type="application/json" data-for="htmlwidget-aa7f6bffed4abb07491a">{"x":{"diagram":"\ndigraph hmm {\n\n # Graph statements\n graph [layout = dot, overlap = false]\n\n # several \"node\" statements\n node [shape = circle]\n X1 [label = <<I>X<\/I><SUB>1<\/SUB>>]\n X2 [label = <<I>X<\/I><SUB>2<\/SUB>>]\n Xn [label = <<I>X<SUB>n<\/SUB><\/I>>]\n \n Y1 [label = <<I>Y<\/I><SUB>1<\/SUB>>]\n Y2 [label = <<I>Y<\/I><SUB>2<\/SUB>>]\n Yn [label = <<I>Y<SUB>n<\/SUB><\/I>>]\n \n node [shape = plaintext]\n dots [label = <...>]\n \n subgraph {\n rank = same; X1; X2; Xn; dots\n }\n subgraph {\n rank = same; Y1; Y2; Yn;\n }\n\n # several \"edge\" statements\n X1->X2 [ label = < <I>M<SUB>2<\/SUB><\/I> >]\n X2->dots;\n dots->Xn [ label = < <I>M<SUB>n<\/SUB><\/I> >]\n X1->Y1 [label = < <I>g<\/I>>]\n X2->Y2 [label = < <I>g<\/I>>]\n Xn->Yn [label = < <I>g<\/I>>]\n}\n","config":{"engine":"dot","options":null}},"evals":[],"jsHooks":[]}</script> </p> </center> --- # HMM examples - Economics: asset prices driven by a latent process - Chemistry: reactions driven by concentrations of chemicals - Physics: imperfect measurements of a dynamical system - Robotics: localization in space through noisy observations - Ecology: sparse observations of animals in space and time - Environment: noisy/partial observations of climate <br/> - Essentially any discretely and partially observed Markov process. --- # Objects of interest For `\(p \in \{1,\ldots,n\}\)`, we are interested in, 1. `\(\eta_p\)` : the distribution of `\(X_p\)` given `\(Y_1,\ldots,Y_{p-1}\)`. [ Note `\(\eta_1 \equiv \mu\)` ]. 1. `\(\hat{\eta}_p\)` : the distribution of `\(X_p\)` given `\(Y_1,\ldots,Y_{p}\)`. 1. `\(Z_p\)` : the marginal likelihood associated with `\(y_1,\ldots,y_p\)`. -- For example, we might have densities in common notation `$$\begin{align} \eta_p(x_p) &= f(x_p \mid y_1,\ldots,y_{p-1}) \\ \hat{\eta}_p(x_p) &= f(x_p \mid y_1,\ldots,y_p) \\ Z_p &= f(y_1,\ldots,y_p) \end{align}$$` -- To simplify notation in what follows, we define `$$G_p(x) := g(x, y_p),$$` rendering the dependence on `\(y_1,\ldots,y_n\)` implicit. --- # Recursive updates With densities, we have `\(\eta_1(x_1) = \mu(x_1) = f(x_1)\)`, and `$$\hat{\eta}_1(x_1) = f(x_1 \mid y_1) = \frac{f(x_1) f(y_1 \mid x_1)}{f(y_1) } = \frac{\eta_1 \cdot G_1}{\eta_1(G_1)}(x_1).$$` Similarly, for `\(p \in \{2,\ldots,n\}\)`, `$$\eta_p(x_p) = f(x_p \mid y_{1:p-1}) = \int f(x_{p-1} \mid y_{1:p-1}) f(x_p \mid x_{p-1}) {\rm d}x_{p-1} = \hat{\eta}_{p-1}M_p(x_p),$$` and `$$\hat{\eta}_p(x_p) = \frac{f(x_p \mid y_{1:p-1}) f(y_p \mid x_p)}{\int f(y_p \mid x_p')f(x_p' \mid y_{1:p-1}) {\rm d}x_p' } = \frac{\eta_p \cdot G_p}{\eta_p(G_p)}(x_p).$$` We also have `$$Z_p = f(y_{1:p}) = f(y_{1:p-1}) f(y_p \mid y_{1:p-1}) = Z_{p-1} \eta_p(G_p).$$` --- # Forward algorithm (finite state space) **Algorithm**: 1. Set `$$\eta_1 = \mu, \qquad Z_1 = \eta_1(G_1), \qquad \text{ and } \qquad \hat{\eta}_1 = \frac{\eta_1 \cdot G_1}{\eta_1(G_1)}.$$` 2. For `\(p = 2,\ldots,n\)`: set `$$\eta_p = \hat{\eta}_{p-1} M_p, \qquad Z_p = Z_{p-1} \eta_p(G_p), \qquad \text{ and } \qquad \hat{\eta}_p = \frac{\eta_p \cdot G_p}{\eta_p(G_p)}.$$` -- <br/><br/> In SMC we replace intractable objects with particle approximations. --- # SMC (general state space) Sample `\(\zeta_1^i \overset{\rm iid}{\sim} \mu = \eta_1\)` for `\(i \in \{1,\ldots,N\}\)`. Set `$$\eta_1^N = \frac{1}{N} \sum_{i=1}^N \delta_{\zeta_1^i}, \qquad Z_1^N = \eta_1^N(G_1), \qquad \hat{\eta}^N_1 = \frac{\eta_1^N \cdot G_1}{\eta_1^N(G_1)}.$$` For `\(p = 2,\ldots,n\)`: sample `$$\zeta_p^i \overset{\rm iid}{\sim} \hat{\eta}^N_{p-1}M_p = \frac{\sum_{j=1}^N G_{p-1}(\zeta_{p-1}^j)M_p(\zeta_{p-1}^j, \cdot)}{\sum_{j=1}^N G_{p-1}(\zeta_{p-1}^j)}, \qquad i \in \{1,\ldots,N\}.$$` and then set `$$\eta_p^N = \frac{1}{N} \sum_{i=1}^N \delta_{\zeta_p^i}, \qquad Z_p^N = Z_{p-1}^N \eta_p^N(G_p), \qquad \hat{\eta}^N_p = \frac{\eta_p^N \cdot G_p}{\eta_p^N(G_p)}.$$` --- # Evolutionary algorithm interpretation Sampling `$$\zeta_p^i \overset{\rm iid}{\sim} \hat{\eta}^N_{p-1}M_p = \frac{\sum_{j=1}^N G_{p-1}(\zeta_{p-1}^j)M_p(\zeta_{p-1}^j, \cdot)}{\sum_{j=1}^N G_{p-1}(\zeta_{p-1}^j)}, \qquad i \in \{1,\ldots,N\},$$` can be viewed as a two-stage procedure. -- For each `\(i \in \{1,\ldots,N\}\)`, 1. Sample `\(A_{p-1}^i \sim {\rm Categorical}(G_{p-1}(\zeta_{p-1}^1),\ldots,G_{p-1}(\zeta_{p-1}^N))\)`. 2. Sample `\(\zeta_p^i \sim M_p(\zeta_{p-1}^{A_{p-1}^i}, \cdot)\)`. --- 1. Selection: `\(G_{p-1}\)` is like a fitness function. 2. Mutation: `\(M_p\)` is a mutation kernel. --- # R code (univariate states) ```r smc <- function(mu, M, G, n, N) { zetas <- matrix(0,n,N) as <- matrix(0,n-1,N) gs <- matrix(0,n,N) log.Zs <- rep(0,n) zetas[1,] <- mu(N) gs[1,] <- G(1, zetas[1,]) log.Zs[1] <- log(mean(gs[1,])) for (p in 2:n) { # simulate ancestor indices, then particles as[p-1,] <- sample(N,N,replace=TRUE,prob=gs[p-1,]/sum(gs[p-1,])) zetas[p,] <- M(p, zetas[p-1,as[p-1,]]) gs[p,] <- G(p, zetas[p,]) log.Zs[p] <- log.Zs[p-1] + log(mean(gs[p,])) } return(list(zetas=zetas,gs=gs,as=as,log.Zs=log.Zs)) } ``` --- # Evolution of the particle system <div id="htmlwidget-c85e503dc4cdec54bec5" style="width:864px;height:504px;" class="plotly html-widget"></div> <script type="application/json" data-for="htmlwidget-c85e503dc4cdec54bec5">{"x":{"data":[{"x":[1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1],"y":[0.585528817843856,0.709466017509524,-0.109303314681054,-0.453497173462763,0.605887455840394,-1.81795596770373,0.630098551068391,-0.276184105225216,-0.284159743943371,-0.919322002474128,-0.116247806352002,1.81731204370422,0.370627864257954,0.520216457554957,-0.750531994502331,0.816899839520583],"text":["time: 1<br />x: 0.58552882<br />frame: 1","time: 1<br />x: 0.70946602<br />frame: 1","time: 1<br />x: -0.10930331<br />frame: 1","time: 1<br />x: -0.45349717<br />frame: 1","time: 1<br />x: 0.60588746<br />frame: 1","time: 1<br />x: -1.81795597<br />frame: 1","time: 1<br />x: 0.63009855<br />frame: 1","time: 1<br />x: -0.27618411<br />frame: 1","time: 1<br />x: -0.28415974<br />frame: 1","time: 1<br />x: -0.91932200<br />frame: 1","time: 1<br />x: -0.11624781<br />frame: 1","time: 1<br />x: 1.81731204<br />frame: 1","time: 1<br />x: 0.37062786<br />frame: 1","time: 1<br />x: 0.52021646<br />frame: 1","time: 1<br />x: -0.75053199<br />frame: 1","time: 1<br />x: 0.81689984<br />frame: 1"],"frame":"1","type":"scatter","mode":"markers","marker":{"autocolorscale":false,"color":"rgba(0,0,0,1)","opacity":1,"size":5.66929133858268,"symbol":"circle","line":{"width":1.88976377952756,"color":"rgba(0,0,0,1)"}},"hoveron":"points","showlegend":false,"xaxis":"x","yaxis":"y","hoverinfo":"text","visible":true},{"x":[1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1],"y":[0.585528817843856,0.585528817843856,null,0.709466017509524,0.709466017509524,null,-0.109303314681054,-0.109303314681054,null,-0.453497173462763,-0.453497173462763,null,0.605887455840394,0.605887455840394,null,-1.81795596770373,-1.81795596770373,null,0.630098551068391,0.630098551068391,null,-0.276184105225216,-0.276184105225216,null,-0.284159743943371,-0.284159743943371,null,-0.919322002474128,-0.919322002474128,null,-0.116247806352002,-0.116247806352002,null,1.81731204370422,1.81731204370422,null,0.370627864257954,0.370627864257954,null,0.520216457554957,0.520216457554957,null,-0.750531994502331,-0.750531994502331,null,0.816899839520583,0.816899839520583],"text":["pmax(1, time - 1): 1<br />anc.loc: 0.58552882<br />time: 1<br />loc: 0.58552882<br />frame: 1<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.58552882<br />time: 1<br />loc: 0.58552882<br />frame: 1<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.70946602<br />time: 1<br />loc: 0.70946602<br />frame: 1<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.70946602<br />time: 1<br />loc: 0.70946602<br />frame: 1<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.10930331<br />time: 1<br />loc: -0.10930331<br />frame: 1<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.10930331<br />time: 1<br />loc: -0.10930331<br />frame: 1<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.45349717<br />time: 1<br />loc: -0.45349717<br />frame: 1<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.45349717<br />time: 1<br />loc: -0.45349717<br />frame: 1<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.60588746<br />time: 1<br />loc: 0.60588746<br />frame: 1<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.60588746<br />time: 1<br />loc: 0.60588746<br />frame: 1<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -1.81795597<br />time: 1<br />loc: -1.81795597<br />frame: 1<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -1.81795597<br />time: 1<br />loc: -1.81795597<br />frame: 1<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.63009855<br />time: 1<br />loc: 0.63009855<br />frame: 1<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.63009855<br />time: 1<br />loc: 0.63009855<br />frame: 1<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.27618411<br />time: 1<br />loc: -0.27618411<br />frame: 1<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.27618411<br />time: 1<br />loc: -0.27618411<br />frame: 1<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.28415974<br />time: 1<br />loc: -0.28415974<br />frame: 1<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.28415974<br />time: 1<br />loc: -0.28415974<br />frame: 1<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.91932200<br />time: 1<br />loc: -0.91932200<br />frame: 1<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.91932200<br />time: 1<br />loc: -0.91932200<br />frame: 1<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.11624781<br />time: 1<br />loc: -0.11624781<br />frame: 1<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.11624781<br />time: 1<br />loc: -0.11624781<br />frame: 1<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 1.81731204<br />time: 1<br />loc: 1.81731204<br />frame: 1<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 1.81731204<br />time: 1<br />loc: 1.81731204<br />frame: 1<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.37062786<br />time: 1<br />loc: 0.37062786<br />frame: 1<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.37062786<br />time: 1<br />loc: 0.37062786<br />frame: 1<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.52021646<br />time: 1<br />loc: 0.52021646<br />frame: 1<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.52021646<br />time: 1<br />loc: 0.52021646<br />frame: 1<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.75053199<br />time: 1<br />loc: -0.75053199<br />frame: 1<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.75053199<br />time: 1<br />loc: -0.75053199<br />frame: 1<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.81689984<br />time: 1<br />loc: 0.81689984<br />frame: 1<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.81689984<br />time: 1<br />loc: 0.81689984<br />frame: 1<br />colour: ancestral line"],"frame":"1","type":"scatter","mode":"lines","line":{"width":1.88976377952756,"color":"rgba(248,118,109,1)","dash":"solid"},"hoveron":"points","name":"ancestral line","legendgroup":"ancestral line","showlegend":true,"xaxis":"x","yaxis":"y","hoverinfo":"text","visible":true}],"layout":{"margin":{"t":28.5244618395303,"r":7.30593607305936,"b":42.4787997390737,"l":37.2602739726027},"plot_bgcolor":"rgba(235,235,235,1)","paper_bgcolor":"rgba(255,255,255,1)","font":{"color":"rgba(0,0,0,1)","family":"","size":14.6118721461187},"xaxis":{"domain":[0,1],"automargin":true,"type":"linear","autorange":false,"range":[0.55,10.45],"tickmode":"array","ticktext":["2.5","5.0","7.5","10.0"],"tickvals":[2.5,5,7.5,10],"categoryorder":"array","categoryarray":["2.5","5.0","7.5","10.0"],"nticks":null,"ticks":"outside","tickcolor":"rgba(51,51,51,1)","ticklen":3.65296803652968,"tickwidth":0.66417600664176,"showticklabels":true,"tickfont":{"color":"rgba(77,77,77,1)","family":"","size":11.689497716895},"tickangle":-0,"showline":false,"linecolor":null,"linewidth":0,"showgrid":true,"gridcolor":"rgba(255,255,255,1)","gridwidth":0.66417600664176,"zeroline":false,"anchor":"y","title":{"text":"time","font":{"color":"rgba(0,0,0,1)","family":"","size":14.6118721461187}},"hoverformat":".2f"},"yaxis":{"domain":[0,1],"automargin":true,"type":"linear","autorange":false,"range":[-4.01045945369587,4.17558865240402],"tickmode":"array","ticktext":["-4","-2","0","2","4"],"tickvals":[-4,-2,0,2,4],"categoryorder":"array","categoryarray":["-4","-2","0","2","4"],"nticks":null,"ticks":"outside","tickcolor":"rgba(51,51,51,1)","ticklen":3.65296803652968,"tickwidth":0.66417600664176,"showticklabels":true,"tickfont":{"color":"rgba(77,77,77,1)","family":"","size":11.689497716895},"tickangle":-0,"showline":false,"linecolor":null,"linewidth":0,"showgrid":true,"gridcolor":"rgba(255,255,255,1)","gridwidth":0.66417600664176,"zeroline":false,"anchor":"x","title":{"text":"x","font":{"color":"rgba(0,0,0,1)","family":"","size":14.6118721461187}},"hoverformat":".2f"},"shapes":[{"type":"rect","fillcolor":null,"line":{"color":null,"width":0,"linetype":[]},"yref":"paper","xref":"paper","x0":0,"x1":1,"y0":0,"y1":1}],"showlegend":true,"legend":{"bgcolor":"rgba(255,255,255,1)","bordercolor":"transparent","borderwidth":1.88976377952756,"font":{"color":"rgba(0,0,0,1)","family":"","size":11.689497716895},"y":0.938132733408324},"annotations":[{"text":"colour","x":1.02,"y":1,"showarrow":false,"ax":0,"ay":0,"font":{"color":"rgba(0,0,0,1)","family":"","size":14.6118721461187},"xref":"paper","yref":"paper","textangle":-0,"xanchor":"left","yanchor":"bottom","legendTitle":true}],"hovermode":"closest","barmode":"relative","sliders":[{"currentvalue":{"prefix":"~frame: ","xanchor":"right","font":{"size":16,"color":"rgba(204,204,204,1)"}},"steps":[{"method":"animate","args":[["1"],{"transition":{"duration":0,"easing":"linear"},"frame":{"duration":1000,"redraw":true},"mode":"immediate"}],"label":"1","value":"1"},{"method":"animate","args":[["2"],{"transition":{"duration":0,"easing":"linear"},"frame":{"duration":1000,"redraw":true},"mode":"immediate"}],"label":"2","value":"2"},{"method":"animate","args":[["3"],{"transition":{"duration":0,"easing":"linear"},"frame":{"duration":1000,"redraw":true},"mode":"immediate"}],"label":"3","value":"3"},{"method":"animate","args":[["4"],{"transition":{"duration":0,"easing":"linear"},"frame":{"duration":1000,"redraw":true},"mode":"immediate"}],"label":"4","value":"4"},{"method":"animate","args":[["5"],{"transition":{"duration":0,"easing":"linear"},"frame":{"duration":1000,"redraw":true},"mode":"immediate"}],"label":"5","value":"5"},{"method":"animate","args":[["6"],{"transition":{"duration":0,"easing":"linear"},"frame":{"duration":1000,"redraw":true},"mode":"immediate"}],"label":"6","value":"6"},{"method":"animate","args":[["7"],{"transition":{"duration":0,"easing":"linear"},"frame":{"duration":1000,"redraw":true},"mode":"immediate"}],"label":"7","value":"7"},{"method":"animate","args":[["8"],{"transition":{"duration":0,"easing":"linear"},"frame":{"duration":1000,"redraw":true},"mode":"immediate"}],"label":"8","value":"8"},{"method":"animate","args":[["9"],{"transition":{"duration":0,"easing":"linear"},"frame":{"duration":1000,"redraw":true},"mode":"immediate"}],"label":"9","value":"9"},{"method":"animate","args":[["10"],{"transition":{"duration":0,"easing":"linear"},"frame":{"duration":1000,"redraw":true},"mode":"immediate"}],"label":"10","value":"10"}],"visible":true,"pad":{"t":40}}],"updatemenus":[{"type":"buttons","direction":"right","showactive":false,"y":0,"x":0,"yanchor":"top","xanchor":"right","pad":{"t":60,"r":5},"buttons":[{"label":"Play","method":"animate","args":[null,{"fromcurrent":true,"mode":"immediate","transition":{"duration":0,"easing":"linear"},"frame":{"duration":1000,"redraw":true}}]}]}]},"config":{"doubleClick":"reset","showSendToCloud":false},"source":"A","attrs":{"5342128e8fb3":{"x":{},"y":{},"frame":{},"type":"scatter"},"53424d7c3a49":{"x":{},"y":{},"xend":{},"yend":{},"frame":{},"colour":{}}},"cur_data":"5342128e8fb3","visdat":{"5342128e8fb3":["function (y) ","x"],"53424d7c3a49":["function (y) ","x"]},"highlight":{"on":"plotly_click","persistent":false,"dynamic":false,"selectize":false,"opacityDim":0.2,"selected":{"opacity":1},"debounce":0},"frames":[{"name":"1","data":[{"x":[1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1],"y":[0.585528817843856,0.709466017509524,-0.109303314681054,-0.453497173462763,0.605887455840394,-1.81795596770373,0.630098551068391,-0.276184105225216,-0.284159743943371,-0.919322002474128,-0.116247806352002,1.81731204370422,0.370627864257954,0.520216457554957,-0.750531994502331,0.816899839520583],"text":["time: 1<br />x: 0.58552882<br />frame: 1","time: 1<br />x: 0.70946602<br />frame: 1","time: 1<br />x: -0.10930331<br />frame: 1","time: 1<br />x: -0.45349717<br />frame: 1","time: 1<br />x: 0.60588746<br />frame: 1","time: 1<br />x: -1.81795597<br />frame: 1","time: 1<br />x: 0.63009855<br />frame: 1","time: 1<br />x: -0.27618411<br />frame: 1","time: 1<br />x: -0.28415974<br />frame: 1","time: 1<br />x: -0.91932200<br />frame: 1","time: 1<br />x: -0.11624781<br />frame: 1","time: 1<br />x: 1.81731204<br />frame: 1","time: 1<br />x: 0.37062786<br />frame: 1","time: 1<br />x: 0.52021646<br />frame: 1","time: 1<br />x: -0.75053199<br />frame: 1","time: 1<br />x: 0.81689984<br />frame: 1"],"frame":"1","type":"scatter","mode":"markers","marker":{"autocolorscale":false,"color":"rgba(0,0,0,1)","opacity":1,"size":5.66929133858268,"symbol":"circle","line":{"width":1.88976377952756,"color":"rgba(0,0,0,1)"}},"hoveron":"points","showlegend":false,"xaxis":"x","yaxis":"y","hoverinfo":"text","visible":true},{"x":[1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1],"y":[0.585528817843856,0.585528817843856,null,0.709466017509524,0.709466017509524,null,-0.109303314681054,-0.109303314681054,null,-0.453497173462763,-0.453497173462763,null,0.605887455840394,0.605887455840394,null,-1.81795596770373,-1.81795596770373,null,0.630098551068391,0.630098551068391,null,-0.276184105225216,-0.276184105225216,null,-0.284159743943371,-0.284159743943371,null,-0.919322002474128,-0.919322002474128,null,-0.116247806352002,-0.116247806352002,null,1.81731204370422,1.81731204370422,null,0.370627864257954,0.370627864257954,null,0.520216457554957,0.520216457554957,null,-0.750531994502331,-0.750531994502331,null,0.816899839520583,0.816899839520583],"text":["pmax(1, time - 1): 1<br />anc.loc: 0.58552882<br />time: 1<br />loc: 0.58552882<br />frame: 1<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.58552882<br />time: 1<br />loc: 0.58552882<br />frame: 1<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.70946602<br />time: 1<br />loc: 0.70946602<br />frame: 1<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.70946602<br />time: 1<br />loc: 0.70946602<br />frame: 1<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.10930331<br />time: 1<br />loc: -0.10930331<br />frame: 1<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.10930331<br />time: 1<br />loc: -0.10930331<br />frame: 1<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.45349717<br />time: 1<br />loc: -0.45349717<br />frame: 1<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.45349717<br />time: 1<br />loc: -0.45349717<br />frame: 1<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.60588746<br />time: 1<br />loc: 0.60588746<br />frame: 1<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.60588746<br />time: 1<br />loc: 0.60588746<br />frame: 1<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -1.81795597<br />time: 1<br />loc: -1.81795597<br />frame: 1<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -1.81795597<br />time: 1<br />loc: -1.81795597<br />frame: 1<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.63009855<br />time: 1<br />loc: 0.63009855<br />frame: 1<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.63009855<br />time: 1<br />loc: 0.63009855<br />frame: 1<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.27618411<br />time: 1<br />loc: -0.27618411<br />frame: 1<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.27618411<br />time: 1<br />loc: -0.27618411<br />frame: 1<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.28415974<br />time: 1<br />loc: -0.28415974<br />frame: 1<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.28415974<br />time: 1<br />loc: -0.28415974<br />frame: 1<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.91932200<br />time: 1<br />loc: -0.91932200<br />frame: 1<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.91932200<br />time: 1<br />loc: -0.91932200<br />frame: 1<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.11624781<br />time: 1<br />loc: -0.11624781<br />frame: 1<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.11624781<br />time: 1<br />loc: -0.11624781<br />frame: 1<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 1.81731204<br />time: 1<br />loc: 1.81731204<br />frame: 1<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 1.81731204<br />time: 1<br />loc: 1.81731204<br />frame: 1<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.37062786<br />time: 1<br />loc: 0.37062786<br />frame: 1<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.37062786<br />time: 1<br />loc: 0.37062786<br />frame: 1<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.52021646<br />time: 1<br />loc: 0.52021646<br />frame: 1<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.52021646<br />time: 1<br />loc: 0.52021646<br />frame: 1<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.75053199<br />time: 1<br />loc: -0.75053199<br />frame: 1<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.75053199<br />time: 1<br />loc: -0.75053199<br />frame: 1<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.81689984<br />time: 1<br />loc: 0.81689984<br />frame: 1<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.81689984<br />time: 1<br />loc: 0.81689984<br />frame: 1<br />colour: ancestral line"],"frame":"1","type":"scatter","mode":"lines","line":{"width":1.88976377952756,"color":"rgba(248,118,109,1)","dash":"solid"},"hoveron":"points","name":"ancestral line","legendgroup":"ancestral line","showlegend":true,"xaxis":"x","yaxis":"y","hoverinfo":"text","visible":true}],"traces":[0,1]},{"name":"2","data":[{"x":[1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,2,2,2,2,2,2,2,2,2,2,2,2,2,2,2,2],"y":[0.585528817843856,0.709466017509524,-0.109303314681054,-0.453497173462763,0.605887455840394,-1.81795596770373,0.630098551068391,-0.276184105225216,-0.284159743943371,-0.919322002474128,-0.116247806352002,1.81731204370422,0.370627864257954,0.520216457554957,-0.750531994502331,0.816899839520583,-1.87389362192153,2.43519606987921,-0.111019499436683,0.991007665556376,1.42902333217143,0.654588862602458,1.41776063439425,2.08058573999553,2.75865635491572,1.1789484660177,-0.665050809660074,1.20065429678208,-0.608246322680488,-1.29142237960068,1.65843053619191,-0.0835022660332458],"text":["time: 1<br />x: 0.58552882<br />frame: 2","time: 1<br />x: 0.70946602<br />frame: 2","time: 1<br />x: -0.10930331<br />frame: 2","time: 1<br />x: -0.45349717<br />frame: 2","time: 1<br />x: 0.60588746<br />frame: 2","time: 1<br />x: -1.81795597<br />frame: 2","time: 1<br />x: 0.63009855<br />frame: 2","time: 1<br />x: -0.27618411<br />frame: 2","time: 1<br />x: -0.28415974<br />frame: 2","time: 1<br />x: -0.91932200<br />frame: 2","time: 1<br />x: -0.11624781<br />frame: 2","time: 1<br />x: 1.81731204<br />frame: 2","time: 1<br />x: 0.37062786<br />frame: 2","time: 1<br />x: 0.52021646<br />frame: 2","time: 1<br />x: -0.75053199<br />frame: 2","time: 1<br />x: 0.81689984<br />frame: 2","time: 2<br />x: -1.87389362<br />frame: 2","time: 2<br />x: 2.43519607<br />frame: 2","time: 2<br />x: -0.11101950<br />frame: 2","time: 2<br />x: 0.99100767<br />frame: 2","time: 2<br />x: 1.42902333<br />frame: 2","time: 2<br />x: 0.65458886<br />frame: 2","time: 2<br />x: 1.41776063<br />frame: 2","time: 2<br />x: 2.08058574<br />frame: 2","time: 2<br />x: 2.75865635<br />frame: 2","time: 2<br />x: 1.17894847<br />frame: 2","time: 2<br />x: -0.66505081<br />frame: 2","time: 2<br />x: 1.20065430<br />frame: 2","time: 2<br />x: -0.60824632<br />frame: 2","time: 2<br />x: -1.29142238<br />frame: 2","time: 2<br />x: 1.65843054<br />frame: 2","time: 2<br />x: -0.08350227<br />frame: 2"],"frame":"2","type":"scatter","mode":"markers","marker":{"autocolorscale":false,"color":"rgba(0,0,0,1)","opacity":1,"size":5.66929133858268,"symbol":"circle","line":{"width":1.88976377952756,"color":"rgba(0,0,0,1)"}},"hoveron":"points","showlegend":false,"xaxis":"x","yaxis":"y","hoverinfo":"text","visible":true},{"x":[1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2],"y":[0.585528817843856,0.585528817843856,null,0.709466017509524,0.709466017509524,null,-0.109303314681054,-0.109303314681054,null,-0.453497173462763,-0.453497173462763,null,0.605887455840394,0.605887455840394,null,-1.81795596770373,-1.81795596770373,null,0.630098551068391,0.630098551068391,null,-0.276184105225216,-0.276184105225216,null,-0.284159743943371,-0.284159743943371,null,-0.919322002474128,-0.919322002474128,null,-0.116247806352002,-0.116247806352002,null,1.81731204370422,1.81731204370422,null,0.370627864257954,0.370627864257954,null,0.520216457554957,0.520216457554957,null,-0.750531994502331,-0.750531994502331,null,0.816899839520583,0.816899839520583,null,-0.276184105225216,-1.87389362192153,null,0.630098551068391,2.43519606987921,null,0.370627864257954,-0.111019499436683,null,0.370627864257954,0.991007665556376,null,0.816899839520583,1.42902333217143,null,0.816899839520583,0.654588862602458,null,0.605887455840394,1.41776063439425,null,-0.116247806352002,2.08058573999553,null,0.709466017509524,2.75865635491572,null,-0.453497173462763,1.1789484660177,null,-0.919322002474128,-0.665050809660074,null,0.709466017509524,1.20065429678208,null,-0.284159743943371,-0.608246322680488,null,0.370627864257954,-1.29142237960068,null,-0.109303314681054,1.65843053619191,null,-0.109303314681054,-0.0835022660332458],"text":["pmax(1, time - 1): 1<br />anc.loc: 0.58552882<br />time: 1<br />loc: 0.58552882<br />frame: 2<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.58552882<br />time: 1<br />loc: 0.58552882<br />frame: 2<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.70946602<br />time: 1<br />loc: 0.70946602<br />frame: 2<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.70946602<br />time: 1<br />loc: 0.70946602<br />frame: 2<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.10930331<br />time: 1<br />loc: -0.10930331<br />frame: 2<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.10930331<br />time: 1<br />loc: -0.10930331<br />frame: 2<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.45349717<br />time: 1<br />loc: -0.45349717<br />frame: 2<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.45349717<br />time: 1<br />loc: -0.45349717<br />frame: 2<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.60588746<br />time: 1<br />loc: 0.60588746<br />frame: 2<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.60588746<br />time: 1<br />loc: 0.60588746<br />frame: 2<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -1.81795597<br />time: 1<br />loc: -1.81795597<br />frame: 2<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -1.81795597<br />time: 1<br />loc: -1.81795597<br />frame: 2<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.63009855<br />time: 1<br />loc: 0.63009855<br />frame: 2<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.63009855<br />time: 1<br />loc: 0.63009855<br />frame: 2<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.27618411<br />time: 1<br />loc: -0.27618411<br />frame: 2<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.27618411<br />time: 1<br />loc: -0.27618411<br />frame: 2<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.28415974<br />time: 1<br />loc: -0.28415974<br />frame: 2<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.28415974<br />time: 1<br />loc: -0.28415974<br />frame: 2<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.91932200<br />time: 1<br />loc: -0.91932200<br />frame: 2<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.91932200<br />time: 1<br />loc: -0.91932200<br />frame: 2<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.11624781<br />time: 1<br />loc: -0.11624781<br />frame: 2<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.11624781<br />time: 1<br />loc: -0.11624781<br />frame: 2<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 1.81731204<br />time: 1<br />loc: 1.81731204<br />frame: 2<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 1.81731204<br />time: 1<br />loc: 1.81731204<br />frame: 2<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.37062786<br />time: 1<br />loc: 0.37062786<br />frame: 2<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.37062786<br />time: 1<br />loc: 0.37062786<br />frame: 2<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.52021646<br />time: 1<br />loc: 0.52021646<br />frame: 2<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.52021646<br />time: 1<br />loc: 0.52021646<br />frame: 2<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.75053199<br />time: 1<br />loc: -0.75053199<br />frame: 2<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.75053199<br />time: 1<br />loc: -0.75053199<br />frame: 2<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.81689984<br />time: 1<br />loc: 0.81689984<br />frame: 2<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.81689984<br />time: 1<br />loc: 0.81689984<br />frame: 2<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.27618411<br />time: 2<br />loc: -1.87389362<br />frame: 2<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.27618411<br />time: 2<br />loc: -1.87389362<br />frame: 2<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.63009855<br />time: 2<br />loc: 2.43519607<br />frame: 2<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.63009855<br />time: 2<br />loc: 2.43519607<br />frame: 2<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.37062786<br />time: 2<br />loc: -0.11101950<br />frame: 2<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.37062786<br />time: 2<br />loc: -0.11101950<br />frame: 2<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.37062786<br />time: 2<br />loc: 0.99100767<br />frame: 2<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.37062786<br />time: 2<br />loc: 0.99100767<br />frame: 2<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.81689984<br />time: 2<br />loc: 1.42902333<br />frame: 2<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.81689984<br />time: 2<br />loc: 1.42902333<br />frame: 2<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.81689984<br />time: 2<br />loc: 0.65458886<br />frame: 2<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.81689984<br />time: 2<br />loc: 0.65458886<br />frame: 2<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.60588746<br />time: 2<br />loc: 1.41776063<br />frame: 2<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.60588746<br />time: 2<br />loc: 1.41776063<br />frame: 2<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.11624781<br />time: 2<br />loc: 2.08058574<br />frame: 2<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.11624781<br />time: 2<br />loc: 2.08058574<br />frame: 2<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.70946602<br />time: 2<br />loc: 2.75865635<br />frame: 2<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.70946602<br />time: 2<br />loc: 2.75865635<br />frame: 2<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.45349717<br />time: 2<br />loc: 1.17894847<br />frame: 2<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.45349717<br />time: 2<br />loc: 1.17894847<br />frame: 2<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.91932200<br />time: 2<br />loc: -0.66505081<br />frame: 2<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.91932200<br />time: 2<br />loc: -0.66505081<br />frame: 2<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.70946602<br />time: 2<br />loc: 1.20065430<br />frame: 2<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.70946602<br />time: 2<br />loc: 1.20065430<br />frame: 2<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.28415974<br />time: 2<br />loc: -0.60824632<br />frame: 2<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.28415974<br />time: 2<br />loc: -0.60824632<br />frame: 2<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.37062786<br />time: 2<br />loc: -1.29142238<br />frame: 2<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.37062786<br />time: 2<br />loc: -1.29142238<br />frame: 2<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.10930331<br />time: 2<br />loc: 1.65843054<br />frame: 2<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.10930331<br />time: 2<br />loc: 1.65843054<br />frame: 2<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.10930331<br />time: 2<br />loc: -0.08350227<br />frame: 2<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.10930331<br />time: 2<br />loc: -0.08350227<br />frame: 2<br />colour: ancestral line"],"frame":"2","type":"scatter","mode":"lines","line":{"width":1.88976377952756,"color":"rgba(248,118,109,1)","dash":"solid"},"hoveron":"points","name":"ancestral line","legendgroup":"ancestral line","showlegend":true,"xaxis":"x","yaxis":"y","hoverinfo":"text","visible":true}],"traces":[0,1]},{"name":"3","data":[{"x":[1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,2,2,2,2,2,2,2,2,2,2,2,2,2,2,2,2,3,3,3,3,3,3,3,3,3,3,3,3,3,3,3,3],"y":[0.585528817843856,0.709466017509524,-0.109303314681054,-0.453497173462763,0.605887455840394,-1.81795596770373,0.630098551068391,-0.276184105225216,-0.284159743943371,-0.919322002474128,-0.116247806352002,1.81731204370422,0.370627864257954,0.520216457554957,-0.750531994502331,0.816899839520583,-1.87389362192153,2.43519606987921,-0.111019499436683,0.991007665556376,1.42902333217143,0.654588862602458,1.41776063439425,2.08058573999553,2.75865635491572,1.1789484660177,-0.665050809660074,1.20065429678208,-0.608246322680488,-1.29142237960068,1.65843053619191,-0.0835022660332458,2.00094828782994,-1.9718496431245,-0.62388833989535,1.86419039869879,-0.0574292290792283,-0.256583482125251,-1.96239891859462,-0.38709711485393,-0.600251106725797,0.74029306267284,3.8034955566722,-3.63836635796405,0.0660897145819629,-0.351523816173168,1.75395737228598,0.924912033035336],"text":["time: 1<br />x: 0.58552882<br />frame: 3","time: 1<br />x: 0.70946602<br />frame: 3","time: 1<br />x: -0.10930331<br />frame: 3","time: 1<br />x: -0.45349717<br />frame: 3","time: 1<br />x: 0.60588746<br />frame: 3","time: 1<br />x: -1.81795597<br />frame: 3","time: 1<br />x: 0.63009855<br />frame: 3","time: 1<br />x: -0.27618411<br />frame: 3","time: 1<br />x: -0.28415974<br />frame: 3","time: 1<br />x: -0.91932200<br />frame: 3","time: 1<br />x: -0.11624781<br />frame: 3","time: 1<br />x: 1.81731204<br />frame: 3","time: 1<br />x: 0.37062786<br />frame: 3","time: 1<br />x: 0.52021646<br />frame: 3","time: 1<br />x: -0.75053199<br />frame: 3","time: 1<br />x: 0.81689984<br />frame: 3","time: 2<br />x: -1.87389362<br />frame: 3","time: 2<br />x: 2.43519607<br />frame: 3","time: 2<br />x: -0.11101950<br />frame: 3","time: 2<br />x: 0.99100767<br />frame: 3","time: 2<br />x: 1.42902333<br />frame: 3","time: 2<br />x: 0.65458886<br />frame: 3","time: 2<br />x: 1.41776063<br />frame: 3","time: 2<br />x: 2.08058574<br />frame: 3","time: 2<br />x: 2.75865635<br />frame: 3","time: 2<br />x: 1.17894847<br />frame: 3","time: 2<br />x: -0.66505081<br />frame: 3","time: 2<br />x: 1.20065430<br />frame: 3","time: 2<br />x: -0.60824632<br />frame: 3","time: 2<br />x: -1.29142238<br />frame: 3","time: 2<br />x: 1.65843054<br />frame: 3","time: 2<br />x: -0.08350227<br />frame: 3","time: 3<br />x: 2.00094829<br />frame: 3","time: 3<br />x: -1.97184964<br />frame: 3","time: 3<br />x: -0.62388834<br />frame: 3","time: 3<br />x: 1.86419040<br />frame: 3","time: 3<br />x: -0.05742923<br />frame: 3","time: 3<br />x: -0.25658348<br />frame: 3","time: 3<br />x: -1.96239892<br />frame: 3","time: 3<br />x: -0.38709711<br />frame: 3","time: 3<br />x: -0.60025111<br />frame: 3","time: 3<br />x: 0.74029306<br />frame: 3","time: 3<br />x: 3.80349556<br />frame: 3","time: 3<br />x: -3.63836636<br />frame: 3","time: 3<br />x: 0.06608971<br />frame: 3","time: 3<br />x: -0.35152382<br />frame: 3","time: 3<br />x: 1.75395737<br />frame: 3","time: 3<br />x: 0.92491203<br />frame: 3"],"frame":"3","type":"scatter","mode":"markers","marker":{"autocolorscale":false,"color":"rgba(0,0,0,1)","opacity":1,"size":5.66929133858268,"symbol":"circle","line":{"width":1.88976377952756,"color":"rgba(0,0,0,1)"}},"hoveron":"points","showlegend":false,"xaxis":"x","yaxis":"y","hoverinfo":"text","visible":true},{"x":[1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,2,3,null,2,3,null,2,3,null,2,3,null,2,3,null,2,3,null,2,3,null,2,3,null,2,3,null,2,3,null,2,3,null,2,3,null,2,3,null,2,3,null,2,3,null,2,3],"y":[0.585528817843856,0.585528817843856,null,0.709466017509524,0.709466017509524,null,-0.109303314681054,-0.109303314681054,null,-0.453497173462763,-0.453497173462763,null,0.605887455840394,0.605887455840394,null,-1.81795596770373,-1.81795596770373,null,0.630098551068391,0.630098551068391,null,-0.276184105225216,-0.276184105225216,null,-0.284159743943371,-0.284159743943371,null,-0.919322002474128,-0.919322002474128,null,-0.116247806352002,-0.116247806352002,null,1.81731204370422,1.81731204370422,null,0.370627864257954,0.370627864257954,null,0.520216457554957,0.520216457554957,null,-0.750531994502331,-0.750531994502331,null,0.816899839520583,0.816899839520583,null,-0.276184105225216,-1.87389362192153,null,0.630098551068391,2.43519606987921,null,0.370627864257954,-0.111019499436683,null,0.370627864257954,0.991007665556376,null,0.816899839520583,1.42902333217143,null,0.816899839520583,0.654588862602458,null,0.605887455840394,1.41776063439425,null,-0.116247806352002,2.08058573999553,null,0.709466017509524,2.75865635491572,null,-0.453497173462763,1.1789484660177,null,-0.919322002474128,-0.665050809660074,null,0.709466017509524,1.20065429678208,null,-0.284159743943371,-0.608246322680488,null,0.370627864257954,-1.29142237960068,null,-0.109303314681054,1.65843053619191,null,-0.109303314681054,-0.0835022660332458,null,1.41776063439425,2.00094828782994,null,-0.665050809660074,-1.9718496431245,null,-0.0835022660332458,-0.62388833989535,null,-0.0835022660332458,1.86419039869879,null,-0.111019499436683,-0.0574292290792283,null,-0.608246322680488,-0.256583482125251,null,-1.29142237960068,-1.96239891859462,null,-0.665050809660074,-0.38709711485393,null,-1.29142237960068,-0.600251106725797,null,-0.0835022660332458,0.74029306267284,null,1.65843053619191,3.8034955566722,null,-1.29142237960068,-3.63836635796405,null,-0.0835022660332458,0.0660897145819629,null,0.991007665556376,-0.351523816173168,null,1.20065429678208,1.75395737228598,null,-0.665050809660074,0.924912033035336],"text":["pmax(1, time - 1): 1<br />anc.loc: 0.58552882<br />time: 1<br />loc: 0.58552882<br />frame: 3<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.58552882<br />time: 1<br />loc: 0.58552882<br />frame: 3<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.70946602<br />time: 1<br />loc: 0.70946602<br />frame: 3<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.70946602<br />time: 1<br />loc: 0.70946602<br />frame: 3<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.10930331<br />time: 1<br />loc: -0.10930331<br />frame: 3<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.10930331<br />time: 1<br />loc: -0.10930331<br />frame: 3<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.45349717<br />time: 1<br />loc: -0.45349717<br />frame: 3<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.45349717<br />time: 1<br />loc: -0.45349717<br />frame: 3<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.60588746<br />time: 1<br />loc: 0.60588746<br />frame: 3<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.60588746<br />time: 1<br />loc: 0.60588746<br />frame: 3<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -1.81795597<br />time: 1<br />loc: -1.81795597<br />frame: 3<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -1.81795597<br />time: 1<br />loc: -1.81795597<br />frame: 3<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.63009855<br />time: 1<br />loc: 0.63009855<br />frame: 3<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.63009855<br />time: 1<br />loc: 0.63009855<br />frame: 3<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.27618411<br />time: 1<br />loc: -0.27618411<br />frame: 3<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.27618411<br />time: 1<br />loc: -0.27618411<br />frame: 3<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.28415974<br />time: 1<br />loc: -0.28415974<br />frame: 3<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.28415974<br />time: 1<br />loc: -0.28415974<br />frame: 3<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.91932200<br />time: 1<br />loc: -0.91932200<br />frame: 3<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.91932200<br />time: 1<br />loc: -0.91932200<br />frame: 3<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.11624781<br />time: 1<br />loc: -0.11624781<br />frame: 3<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.11624781<br />time: 1<br />loc: -0.11624781<br />frame: 3<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 1.81731204<br />time: 1<br />loc: 1.81731204<br />frame: 3<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 1.81731204<br />time: 1<br />loc: 1.81731204<br />frame: 3<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.37062786<br />time: 1<br />loc: 0.37062786<br />frame: 3<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.37062786<br />time: 1<br />loc: 0.37062786<br />frame: 3<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.52021646<br />time: 1<br />loc: 0.52021646<br />frame: 3<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.52021646<br />time: 1<br />loc: 0.52021646<br />frame: 3<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.75053199<br />time: 1<br />loc: -0.75053199<br />frame: 3<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.75053199<br />time: 1<br />loc: -0.75053199<br />frame: 3<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.81689984<br />time: 1<br />loc: 0.81689984<br />frame: 3<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.81689984<br />time: 1<br />loc: 0.81689984<br />frame: 3<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.27618411<br />time: 2<br />loc: -1.87389362<br />frame: 3<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.27618411<br />time: 2<br />loc: -1.87389362<br />frame: 3<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.63009855<br />time: 2<br />loc: 2.43519607<br />frame: 3<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.63009855<br />time: 2<br />loc: 2.43519607<br />frame: 3<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.37062786<br />time: 2<br />loc: -0.11101950<br />frame: 3<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.37062786<br />time: 2<br />loc: -0.11101950<br />frame: 3<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.37062786<br />time: 2<br />loc: 0.99100767<br />frame: 3<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.37062786<br />time: 2<br />loc: 0.99100767<br />frame: 3<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.81689984<br />time: 2<br />loc: 1.42902333<br />frame: 3<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.81689984<br />time: 2<br />loc: 1.42902333<br />frame: 3<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.81689984<br />time: 2<br />loc: 0.65458886<br />frame: 3<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.81689984<br />time: 2<br />loc: 0.65458886<br />frame: 3<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.60588746<br />time: 2<br />loc: 1.41776063<br />frame: 3<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.60588746<br />time: 2<br />loc: 1.41776063<br />frame: 3<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.11624781<br />time: 2<br />loc: 2.08058574<br />frame: 3<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.11624781<br />time: 2<br />loc: 2.08058574<br />frame: 3<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.70946602<br />time: 2<br />loc: 2.75865635<br />frame: 3<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.70946602<br />time: 2<br />loc: 2.75865635<br />frame: 3<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.45349717<br />time: 2<br />loc: 1.17894847<br />frame: 3<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.45349717<br />time: 2<br />loc: 1.17894847<br />frame: 3<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.91932200<br />time: 2<br />loc: -0.66505081<br />frame: 3<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.91932200<br />time: 2<br />loc: -0.66505081<br />frame: 3<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.70946602<br />time: 2<br />loc: 1.20065430<br />frame: 3<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.70946602<br />time: 2<br />loc: 1.20065430<br />frame: 3<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.28415974<br />time: 2<br />loc: -0.60824632<br />frame: 3<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.28415974<br />time: 2<br />loc: -0.60824632<br />frame: 3<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.37062786<br />time: 2<br />loc: -1.29142238<br />frame: 3<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.37062786<br />time: 2<br />loc: -1.29142238<br />frame: 3<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.10930331<br />time: 2<br />loc: 1.65843054<br />frame: 3<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.10930331<br />time: 2<br />loc: 1.65843054<br />frame: 3<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.10930331<br />time: 2<br />loc: -0.08350227<br />frame: 3<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.10930331<br />time: 2<br />loc: -0.08350227<br />frame: 3<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: 1.41776063<br />time: 3<br />loc: 2.00094829<br />frame: 3<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: 1.41776063<br />time: 3<br />loc: 2.00094829<br />frame: 3<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: -0.66505081<br />time: 3<br />loc: -1.97184964<br />frame: 3<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: -0.66505081<br />time: 3<br />loc: -1.97184964<br />frame: 3<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: -0.08350227<br />time: 3<br />loc: -0.62388834<br />frame: 3<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: -0.08350227<br />time: 3<br />loc: -0.62388834<br />frame: 3<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: -0.08350227<br />time: 3<br />loc: 1.86419040<br />frame: 3<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: -0.08350227<br />time: 3<br />loc: 1.86419040<br />frame: 3<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: -0.11101950<br />time: 3<br />loc: -0.05742923<br />frame: 3<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: -0.11101950<br />time: 3<br />loc: -0.05742923<br />frame: 3<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: -0.60824632<br />time: 3<br />loc: -0.25658348<br />frame: 3<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: -0.60824632<br />time: 3<br />loc: -0.25658348<br />frame: 3<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: -1.29142238<br />time: 3<br />loc: -1.96239892<br />frame: 3<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: -1.29142238<br />time: 3<br />loc: -1.96239892<br />frame: 3<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: -0.66505081<br />time: 3<br />loc: -0.38709711<br />frame: 3<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: -0.66505081<br />time: 3<br />loc: -0.38709711<br />frame: 3<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: -1.29142238<br />time: 3<br />loc: -0.60025111<br />frame: 3<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: -1.29142238<br />time: 3<br />loc: -0.60025111<br />frame: 3<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: -0.08350227<br />time: 3<br />loc: 0.74029306<br />frame: 3<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: -0.08350227<br />time: 3<br />loc: 0.74029306<br />frame: 3<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: 1.65843054<br />time: 3<br />loc: 3.80349556<br />frame: 3<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: 1.65843054<br />time: 3<br />loc: 3.80349556<br />frame: 3<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: -1.29142238<br />time: 3<br />loc: -3.63836636<br />frame: 3<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: -1.29142238<br />time: 3<br />loc: -3.63836636<br />frame: 3<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: -0.08350227<br />time: 3<br />loc: 0.06608971<br />frame: 3<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: -0.08350227<br />time: 3<br />loc: 0.06608971<br />frame: 3<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: 0.99100767<br />time: 3<br />loc: -0.35152382<br />frame: 3<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: 0.99100767<br />time: 3<br />loc: -0.35152382<br />frame: 3<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: 1.20065430<br />time: 3<br />loc: 1.75395737<br />frame: 3<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: 1.20065430<br />time: 3<br />loc: 1.75395737<br />frame: 3<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: -0.66505081<br />time: 3<br />loc: 0.92491203<br />frame: 3<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: -0.66505081<br />time: 3<br />loc: 0.92491203<br />frame: 3<br />colour: ancestral line"],"frame":"3","type":"scatter","mode":"lines","line":{"width":1.88976377952756,"color":"rgba(248,118,109,1)","dash":"solid"},"hoveron":"points","name":"ancestral line","legendgroup":"ancestral line","showlegend":true,"xaxis":"x","yaxis":"y","hoverinfo":"text","visible":true}],"traces":[0,1]},{"name":"4","data":[{"x":[1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,2,2,2,2,2,2,2,2,2,2,2,2,2,2,2,2,3,3,3,3,3,3,3,3,3,3,3,3,3,3,3,3,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4],"y":[0.585528817843856,0.709466017509524,-0.109303314681054,-0.453497173462763,0.605887455840394,-1.81795596770373,0.630098551068391,-0.276184105225216,-0.284159743943371,-0.919322002474128,-0.116247806352002,1.81731204370422,0.370627864257954,0.520216457554957,-0.750531994502331,0.816899839520583,-1.87389362192153,2.43519606987921,-0.111019499436683,0.991007665556376,1.42902333217143,0.654588862602458,1.41776063439425,2.08058573999553,2.75865635491572,1.1789484660177,-0.665050809660074,1.20065429678208,-0.608246322680488,-1.29142237960068,1.65843053619191,-0.0835022660332458,2.00094828782994,-1.9718496431245,-0.62388833989535,1.86419039869879,-0.0574292290792283,-0.256583482125251,-1.96239891859462,-0.38709711485393,-0.600251106725797,0.74029306267284,3.8034955566722,-3.63836635796405,0.0660897145819629,-0.351523816173168,1.75395737228598,0.924912033035336,-1.30593630108152,3.07080502507755,1.3452761537882,0.318712510796175,1.56655134982527,-1.43542883008643,2.34043867945245,1.94617044051643,0.0214947343107265,1.1092332661334,-0.36179834177289,3.21740397963581,0.584123558183514,1.24321084467137,0.738132183596749,-0.908204487563135],"text":["time: 1<br />x: 0.58552882<br />frame: 4","time: 1<br />x: 0.70946602<br />frame: 4","time: 1<br />x: -0.10930331<br />frame: 4","time: 1<br />x: -0.45349717<br />frame: 4","time: 1<br />x: 0.60588746<br />frame: 4","time: 1<br />x: -1.81795597<br />frame: 4","time: 1<br />x: 0.63009855<br />frame: 4","time: 1<br />x: -0.27618411<br />frame: 4","time: 1<br />x: -0.28415974<br />frame: 4","time: 1<br />x: -0.91932200<br />frame: 4","time: 1<br />x: -0.11624781<br />frame: 4","time: 1<br />x: 1.81731204<br />frame: 4","time: 1<br />x: 0.37062786<br />frame: 4","time: 1<br />x: 0.52021646<br />frame: 4","time: 1<br />x: -0.75053199<br />frame: 4","time: 1<br />x: 0.81689984<br />frame: 4","time: 2<br />x: -1.87389362<br />frame: 4","time: 2<br />x: 2.43519607<br />frame: 4","time: 2<br />x: -0.11101950<br />frame: 4","time: 2<br />x: 0.99100767<br />frame: 4","time: 2<br />x: 1.42902333<br />frame: 4","time: 2<br />x: 0.65458886<br />frame: 4","time: 2<br />x: 1.41776063<br />frame: 4","time: 2<br />x: 2.08058574<br />frame: 4","time: 2<br />x: 2.75865635<br />frame: 4","time: 2<br />x: 1.17894847<br />frame: 4","time: 2<br />x: -0.66505081<br />frame: 4","time: 2<br />x: 1.20065430<br />frame: 4","time: 2<br />x: -0.60824632<br />frame: 4","time: 2<br />x: -1.29142238<br />frame: 4","time: 2<br />x: 1.65843054<br />frame: 4","time: 2<br />x: -0.08350227<br />frame: 4","time: 3<br />x: 2.00094829<br />frame: 4","time: 3<br />x: -1.97184964<br />frame: 4","time: 3<br />x: -0.62388834<br />frame: 4","time: 3<br />x: 1.86419040<br />frame: 4","time: 3<br />x: -0.05742923<br />frame: 4","time: 3<br />x: -0.25658348<br />frame: 4","time: 3<br />x: -1.96239892<br />frame: 4","time: 3<br />x: -0.38709711<br />frame: 4","time: 3<br />x: -0.60025111<br />frame: 4","time: 3<br />x: 0.74029306<br />frame: 4","time: 3<br />x: 3.80349556<br />frame: 4","time: 3<br />x: -3.63836636<br />frame: 4","time: 3<br />x: 0.06608971<br />frame: 4","time: 3<br />x: -0.35152382<br />frame: 4","time: 3<br />x: 1.75395737<br />frame: 4","time: 3<br />x: 0.92491203<br />frame: 4","time: 4<br />x: -1.30593630<br />frame: 4","time: 4<br />x: 3.07080503<br />frame: 4","time: 4<br />x: 1.34527615<br />frame: 4","time: 4<br />x: 0.31871251<br />frame: 4","time: 4<br />x: 1.56655135<br />frame: 4","time: 4<br />x: -1.43542883<br />frame: 4","time: 4<br />x: 2.34043868<br />frame: 4","time: 4<br />x: 1.94617044<br />frame: 4","time: 4<br />x: 0.02149473<br />frame: 4","time: 4<br />x: 1.10923327<br />frame: 4","time: 4<br />x: -0.36179834<br />frame: 4","time: 4<br />x: 3.21740398<br />frame: 4","time: 4<br />x: 0.58412356<br />frame: 4","time: 4<br />x: 1.24321084<br />frame: 4","time: 4<br />x: 0.73813218<br />frame: 4","time: 4<br />x: -0.90820449<br />frame: 4"],"frame":"4","type":"scatter","mode":"markers","marker":{"autocolorscale":false,"color":"rgba(0,0,0,1)","opacity":1,"size":5.66929133858268,"symbol":"circle","line":{"width":1.88976377952756,"color":"rgba(0,0,0,1)"}},"hoveron":"points","showlegend":false,"xaxis":"x","yaxis":"y","hoverinfo":"text","visible":true},{"x":[1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,2,3,null,2,3,null,2,3,null,2,3,null,2,3,null,2,3,null,2,3,null,2,3,null,2,3,null,2,3,null,2,3,null,2,3,null,2,3,null,2,3,null,2,3,null,2,3,null,3,4,null,3,4,null,3,4,null,3,4,null,3,4,null,3,4,null,3,4,null,3,4,null,3,4,null,3,4,null,3,4,null,3,4,null,3,4,null,3,4,null,3,4,null,3,4],"y":[0.585528817843856,0.585528817843856,null,0.709466017509524,0.709466017509524,null,-0.109303314681054,-0.109303314681054,null,-0.453497173462763,-0.453497173462763,null,0.605887455840394,0.605887455840394,null,-1.81795596770373,-1.81795596770373,null,0.630098551068391,0.630098551068391,null,-0.276184105225216,-0.276184105225216,null,-0.284159743943371,-0.284159743943371,null,-0.919322002474128,-0.919322002474128,null,-0.116247806352002,-0.116247806352002,null,1.81731204370422,1.81731204370422,null,0.370627864257954,0.370627864257954,null,0.520216457554957,0.520216457554957,null,-0.750531994502331,-0.750531994502331,null,0.816899839520583,0.816899839520583,null,-0.276184105225216,-1.87389362192153,null,0.630098551068391,2.43519606987921,null,0.370627864257954,-0.111019499436683,null,0.370627864257954,0.991007665556376,null,0.816899839520583,1.42902333217143,null,0.816899839520583,0.654588862602458,null,0.605887455840394,1.41776063439425,null,-0.116247806352002,2.08058573999553,null,0.709466017509524,2.75865635491572,null,-0.453497173462763,1.1789484660177,null,-0.919322002474128,-0.665050809660074,null,0.709466017509524,1.20065429678208,null,-0.284159743943371,-0.608246322680488,null,0.370627864257954,-1.29142237960068,null,-0.109303314681054,1.65843053619191,null,-0.109303314681054,-0.0835022660332458,null,1.41776063439425,2.00094828782994,null,-0.665050809660074,-1.9718496431245,null,-0.0835022660332458,-0.62388833989535,null,-0.0835022660332458,1.86419039869879,null,-0.111019499436683,-0.0574292290792283,null,-0.608246322680488,-0.256583482125251,null,-1.29142237960068,-1.96239891859462,null,-0.665050809660074,-0.38709711485393,null,-1.29142237960068,-0.600251106725797,null,-0.0835022660332458,0.74029306267284,null,1.65843053619191,3.8034955566722,null,-1.29142237960068,-3.63836635796405,null,-0.0835022660332458,0.0660897145819629,null,0.991007665556376,-0.351523816173168,null,1.20065429678208,1.75395737228598,null,-0.665050809660074,0.924912033035336,null,-0.256583482125251,-1.30593630108152,null,0.74029306267284,3.07080502507755,null,-0.0574292290792283,1.3452761537882,null,-0.62388833989535,0.318712510796175,null,0.74029306267284,1.56655134982527,null,-0.62388833989535,-1.43542883008643,null,1.86419039869879,2.34043867945245,null,0.924912033035336,1.94617044051643,null,-0.62388833989535,0.0214947343107265,null,0.0660897145819629,1.1092332661334,null,-0.0574292290792283,-0.36179834177289,null,0.74029306267284,3.21740397963581,null,-0.38709711485393,0.584123558183514,null,-0.62388833989535,1.24321084467137,null,0.0660897145819629,0.738132183596749,null,-0.600251106725797,-0.908204487563135],"text":["pmax(1, time - 1): 1<br />anc.loc: 0.58552882<br />time: 1<br />loc: 0.58552882<br />frame: 4<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.58552882<br />time: 1<br />loc: 0.58552882<br />frame: 4<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.70946602<br />time: 1<br />loc: 0.70946602<br />frame: 4<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.70946602<br />time: 1<br />loc: 0.70946602<br />frame: 4<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.10930331<br />time: 1<br />loc: -0.10930331<br />frame: 4<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.10930331<br />time: 1<br />loc: -0.10930331<br />frame: 4<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.45349717<br />time: 1<br />loc: -0.45349717<br />frame: 4<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.45349717<br />time: 1<br />loc: -0.45349717<br />frame: 4<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.60588746<br />time: 1<br />loc: 0.60588746<br />frame: 4<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.60588746<br />time: 1<br />loc: 0.60588746<br />frame: 4<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -1.81795597<br />time: 1<br />loc: -1.81795597<br />frame: 4<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -1.81795597<br />time: 1<br />loc: -1.81795597<br />frame: 4<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.63009855<br />time: 1<br />loc: 0.63009855<br />frame: 4<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.63009855<br />time: 1<br />loc: 0.63009855<br />frame: 4<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.27618411<br />time: 1<br />loc: -0.27618411<br />frame: 4<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.27618411<br />time: 1<br />loc: -0.27618411<br />frame: 4<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.28415974<br />time: 1<br />loc: -0.28415974<br />frame: 4<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.28415974<br />time: 1<br />loc: -0.28415974<br />frame: 4<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.91932200<br />time: 1<br />loc: -0.91932200<br />frame: 4<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.91932200<br />time: 1<br />loc: -0.91932200<br />frame: 4<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.11624781<br />time: 1<br />loc: -0.11624781<br />frame: 4<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.11624781<br />time: 1<br />loc: -0.11624781<br />frame: 4<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 1.81731204<br />time: 1<br />loc: 1.81731204<br />frame: 4<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 1.81731204<br />time: 1<br />loc: 1.81731204<br />frame: 4<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.37062786<br />time: 1<br />loc: 0.37062786<br />frame: 4<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.37062786<br />time: 1<br />loc: 0.37062786<br />frame: 4<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.52021646<br />time: 1<br />loc: 0.52021646<br />frame: 4<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.52021646<br />time: 1<br />loc: 0.52021646<br />frame: 4<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.75053199<br />time: 1<br />loc: -0.75053199<br />frame: 4<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.75053199<br />time: 1<br />loc: -0.75053199<br />frame: 4<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.81689984<br />time: 1<br />loc: 0.81689984<br />frame: 4<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.81689984<br />time: 1<br />loc: 0.81689984<br />frame: 4<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.27618411<br />time: 2<br />loc: -1.87389362<br />frame: 4<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.27618411<br />time: 2<br />loc: -1.87389362<br />frame: 4<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.63009855<br />time: 2<br />loc: 2.43519607<br />frame: 4<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.63009855<br />time: 2<br />loc: 2.43519607<br />frame: 4<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.37062786<br />time: 2<br />loc: -0.11101950<br />frame: 4<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.37062786<br />time: 2<br />loc: -0.11101950<br />frame: 4<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.37062786<br />time: 2<br />loc: 0.99100767<br />frame: 4<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.37062786<br />time: 2<br />loc: 0.99100767<br />frame: 4<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.81689984<br />time: 2<br />loc: 1.42902333<br />frame: 4<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.81689984<br />time: 2<br />loc: 1.42902333<br />frame: 4<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.81689984<br />time: 2<br />loc: 0.65458886<br />frame: 4<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.81689984<br />time: 2<br />loc: 0.65458886<br />frame: 4<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.60588746<br />time: 2<br />loc: 1.41776063<br />frame: 4<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.60588746<br />time: 2<br />loc: 1.41776063<br />frame: 4<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.11624781<br />time: 2<br />loc: 2.08058574<br />frame: 4<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.11624781<br />time: 2<br />loc: 2.08058574<br />frame: 4<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.70946602<br />time: 2<br />loc: 2.75865635<br />frame: 4<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.70946602<br />time: 2<br />loc: 2.75865635<br />frame: 4<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.45349717<br />time: 2<br />loc: 1.17894847<br />frame: 4<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.45349717<br />time: 2<br />loc: 1.17894847<br />frame: 4<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.91932200<br />time: 2<br />loc: -0.66505081<br />frame: 4<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.91932200<br />time: 2<br />loc: -0.66505081<br />frame: 4<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.70946602<br />time: 2<br />loc: 1.20065430<br />frame: 4<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.70946602<br />time: 2<br />loc: 1.20065430<br />frame: 4<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.28415974<br />time: 2<br />loc: -0.60824632<br />frame: 4<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.28415974<br />time: 2<br />loc: -0.60824632<br />frame: 4<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.37062786<br />time: 2<br />loc: -1.29142238<br />frame: 4<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.37062786<br />time: 2<br />loc: -1.29142238<br />frame: 4<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.10930331<br />time: 2<br />loc: 1.65843054<br />frame: 4<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.10930331<br />time: 2<br />loc: 1.65843054<br />frame: 4<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.10930331<br />time: 2<br />loc: -0.08350227<br />frame: 4<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.10930331<br />time: 2<br />loc: -0.08350227<br />frame: 4<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: 1.41776063<br />time: 3<br />loc: 2.00094829<br />frame: 4<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: 1.41776063<br />time: 3<br />loc: 2.00094829<br />frame: 4<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: -0.66505081<br />time: 3<br />loc: -1.97184964<br />frame: 4<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: -0.66505081<br />time: 3<br />loc: -1.97184964<br />frame: 4<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: -0.08350227<br />time: 3<br />loc: -0.62388834<br />frame: 4<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: -0.08350227<br />time: 3<br />loc: -0.62388834<br />frame: 4<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: -0.08350227<br />time: 3<br />loc: 1.86419040<br />frame: 4<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: -0.08350227<br />time: 3<br />loc: 1.86419040<br />frame: 4<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: -0.11101950<br />time: 3<br />loc: -0.05742923<br />frame: 4<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: -0.11101950<br />time: 3<br />loc: -0.05742923<br />frame: 4<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: -0.60824632<br />time: 3<br />loc: -0.25658348<br />frame: 4<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: -0.60824632<br />time: 3<br />loc: -0.25658348<br />frame: 4<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: -1.29142238<br />time: 3<br />loc: -1.96239892<br />frame: 4<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: -1.29142238<br />time: 3<br />loc: -1.96239892<br />frame: 4<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: -0.66505081<br />time: 3<br />loc: -0.38709711<br />frame: 4<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: -0.66505081<br />time: 3<br />loc: -0.38709711<br />frame: 4<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: -1.29142238<br />time: 3<br />loc: -0.60025111<br />frame: 4<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: -1.29142238<br />time: 3<br />loc: -0.60025111<br />frame: 4<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: -0.08350227<br />time: 3<br />loc: 0.74029306<br />frame: 4<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: -0.08350227<br />time: 3<br />loc: 0.74029306<br />frame: 4<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: 1.65843054<br />time: 3<br />loc: 3.80349556<br />frame: 4<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: 1.65843054<br />time: 3<br />loc: 3.80349556<br />frame: 4<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: -1.29142238<br />time: 3<br />loc: -3.63836636<br />frame: 4<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: -1.29142238<br />time: 3<br />loc: -3.63836636<br />frame: 4<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: -0.08350227<br />time: 3<br />loc: 0.06608971<br />frame: 4<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: -0.08350227<br />time: 3<br />loc: 0.06608971<br />frame: 4<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: 0.99100767<br />time: 3<br />loc: -0.35152382<br />frame: 4<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: 0.99100767<br />time: 3<br />loc: -0.35152382<br />frame: 4<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: 1.20065430<br />time: 3<br />loc: 1.75395737<br />frame: 4<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: 1.20065430<br />time: 3<br />loc: 1.75395737<br />frame: 4<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: -0.66505081<br />time: 3<br />loc: 0.92491203<br />frame: 4<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: -0.66505081<br />time: 3<br />loc: 0.92491203<br />frame: 4<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: -0.25658348<br />time: 4<br />loc: -1.30593630<br />frame: 4<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: -0.25658348<br />time: 4<br />loc: -1.30593630<br />frame: 4<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: 0.74029306<br />time: 4<br />loc: 3.07080503<br />frame: 4<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: 0.74029306<br />time: 4<br />loc: 3.07080503<br />frame: 4<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: -0.05742923<br />time: 4<br />loc: 1.34527615<br />frame: 4<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: -0.05742923<br />time: 4<br />loc: 1.34527615<br />frame: 4<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: -0.62388834<br />time: 4<br />loc: 0.31871251<br />frame: 4<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: -0.62388834<br />time: 4<br />loc: 0.31871251<br />frame: 4<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: 0.74029306<br />time: 4<br />loc: 1.56655135<br />frame: 4<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: 0.74029306<br />time: 4<br />loc: 1.56655135<br />frame: 4<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: -0.62388834<br />time: 4<br />loc: -1.43542883<br />frame: 4<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: -0.62388834<br />time: 4<br />loc: -1.43542883<br />frame: 4<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: 1.86419040<br />time: 4<br />loc: 2.34043868<br />frame: 4<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: 1.86419040<br />time: 4<br />loc: 2.34043868<br />frame: 4<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: 0.92491203<br />time: 4<br />loc: 1.94617044<br />frame: 4<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: 0.92491203<br />time: 4<br />loc: 1.94617044<br />frame: 4<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: -0.62388834<br />time: 4<br />loc: 0.02149473<br />frame: 4<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: -0.62388834<br />time: 4<br />loc: 0.02149473<br />frame: 4<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: 0.06608971<br />time: 4<br />loc: 1.10923327<br />frame: 4<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: 0.06608971<br />time: 4<br />loc: 1.10923327<br />frame: 4<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: -0.05742923<br />time: 4<br />loc: -0.36179834<br />frame: 4<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: -0.05742923<br />time: 4<br />loc: -0.36179834<br />frame: 4<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: 0.74029306<br />time: 4<br />loc: 3.21740398<br />frame: 4<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: 0.74029306<br />time: 4<br />loc: 3.21740398<br />frame: 4<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: -0.38709711<br />time: 4<br />loc: 0.58412356<br />frame: 4<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: -0.38709711<br />time: 4<br />loc: 0.58412356<br />frame: 4<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: -0.62388834<br />time: 4<br />loc: 1.24321084<br />frame: 4<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: -0.62388834<br />time: 4<br />loc: 1.24321084<br />frame: 4<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: 0.06608971<br />time: 4<br />loc: 0.73813218<br />frame: 4<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: 0.06608971<br />time: 4<br />loc: 0.73813218<br />frame: 4<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: -0.60025111<br />time: 4<br />loc: -0.90820449<br />frame: 4<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: -0.60025111<br />time: 4<br />loc: -0.90820449<br />frame: 4<br />colour: ancestral line"],"frame":"4","type":"scatter","mode":"lines","line":{"width":1.88976377952756,"color":"rgba(248,118,109,1)","dash":"solid"},"hoveron":"points","name":"ancestral line","legendgroup":"ancestral line","showlegend":true,"xaxis":"x","yaxis":"y","hoverinfo":"text","visible":true}],"traces":[0,1]},{"name":"5","data":[{"x":[1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,2,2,2,2,2,2,2,2,2,2,2,2,2,2,2,2,3,3,3,3,3,3,3,3,3,3,3,3,3,3,3,3,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5],"y":[0.585528817843856,0.709466017509524,-0.109303314681054,-0.453497173462763,0.605887455840394,-1.81795596770373,0.630098551068391,-0.276184105225216,-0.284159743943371,-0.919322002474128,-0.116247806352002,1.81731204370422,0.370627864257954,0.520216457554957,-0.750531994502331,0.816899839520583,-1.87389362192153,2.43519606987921,-0.111019499436683,0.991007665556376,1.42902333217143,0.654588862602458,1.41776063439425,2.08058573999553,2.75865635491572,1.1789484660177,-0.665050809660074,1.20065429678208,-0.608246322680488,-1.29142237960068,1.65843053619191,-0.0835022660332458,2.00094828782994,-1.9718496431245,-0.62388833989535,1.86419039869879,-0.0574292290792283,-0.256583482125251,-1.96239891859462,-0.38709711485393,-0.600251106725797,0.74029306267284,3.8034955566722,-3.63836635796405,0.0660897145819629,-0.351523816173168,1.75395737228598,0.924912033035336,-1.30593630108152,3.07080502507755,1.3452761537882,0.318712510796175,1.56655134982527,-1.43542883008643,2.34043867945245,1.94617044051643,0.0214947343107265,1.1092332661334,-0.36179834177289,3.21740397963581,0.584123558183514,1.24321084467137,0.738132183596749,-0.908204487563135,0.604189748612507,0.722290986419984,-1.90573386490635,-2.00048299435227,0.542637918317463,-0.572099772057259,0.741131038583174,-1.68655359762182,1.70763566268555,-1.84198429957246,-0.673404426351037,3.12266099242653,-0.129320780498295,0.639836048290143,0.01303859779118,0.0212174616340499],"text":["time: 1<br />x: 0.58552882<br />frame: 5","time: 1<br />x: 0.70946602<br />frame: 5","time: 1<br />x: -0.10930331<br />frame: 5","time: 1<br />x: -0.45349717<br />frame: 5","time: 1<br />x: 0.60588746<br />frame: 5","time: 1<br />x: -1.81795597<br />frame: 5","time: 1<br />x: 0.63009855<br />frame: 5","time: 1<br />x: -0.27618411<br />frame: 5","time: 1<br />x: -0.28415974<br />frame: 5","time: 1<br />x: -0.91932200<br />frame: 5","time: 1<br />x: -0.11624781<br />frame: 5","time: 1<br />x: 1.81731204<br />frame: 5","time: 1<br />x: 0.37062786<br />frame: 5","time: 1<br />x: 0.52021646<br />frame: 5","time: 1<br />x: -0.75053199<br />frame: 5","time: 1<br />x: 0.81689984<br />frame: 5","time: 2<br />x: -1.87389362<br />frame: 5","time: 2<br />x: 2.43519607<br />frame: 5","time: 2<br />x: -0.11101950<br />frame: 5","time: 2<br />x: 0.99100767<br />frame: 5","time: 2<br />x: 1.42902333<br />frame: 5","time: 2<br />x: 0.65458886<br />frame: 5","time: 2<br />x: 1.41776063<br />frame: 5","time: 2<br />x: 2.08058574<br />frame: 5","time: 2<br />x: 2.75865635<br />frame: 5","time: 2<br />x: 1.17894847<br />frame: 5","time: 2<br />x: -0.66505081<br />frame: 5","time: 2<br />x: 1.20065430<br />frame: 5","time: 2<br />x: -0.60824632<br />frame: 5","time: 2<br />x: -1.29142238<br />frame: 5","time: 2<br />x: 1.65843054<br />frame: 5","time: 2<br />x: -0.08350227<br />frame: 5","time: 3<br />x: 2.00094829<br />frame: 5","time: 3<br />x: -1.97184964<br />frame: 5","time: 3<br />x: -0.62388834<br />frame: 5","time: 3<br />x: 1.86419040<br />frame: 5","time: 3<br />x: -0.05742923<br />frame: 5","time: 3<br />x: -0.25658348<br />frame: 5","time: 3<br />x: -1.96239892<br />frame: 5","time: 3<br />x: -0.38709711<br />frame: 5","time: 3<br />x: -0.60025111<br />frame: 5","time: 3<br />x: 0.74029306<br />frame: 5","time: 3<br />x: 3.80349556<br />frame: 5","time: 3<br />x: -3.63836636<br />frame: 5","time: 3<br />x: 0.06608971<br />frame: 5","time: 3<br />x: -0.35152382<br />frame: 5","time: 3<br />x: 1.75395737<br />frame: 5","time: 3<br />x: 0.92491203<br />frame: 5","time: 4<br />x: -1.30593630<br />frame: 5","time: 4<br />x: 3.07080503<br />frame: 5","time: 4<br />x: 1.34527615<br />frame: 5","time: 4<br />x: 0.31871251<br />frame: 5","time: 4<br />x: 1.56655135<br />frame: 5","time: 4<br />x: -1.43542883<br />frame: 5","time: 4<br />x: 2.34043868<br />frame: 5","time: 4<br />x: 1.94617044<br />frame: 5","time: 4<br />x: 0.02149473<br />frame: 5","time: 4<br />x: 1.10923327<br />frame: 5","time: 4<br />x: -0.36179834<br />frame: 5","time: 4<br />x: 3.21740398<br />frame: 5","time: 4<br />x: 0.58412356<br />frame: 5","time: 4<br />x: 1.24321084<br />frame: 5","time: 4<br />x: 0.73813218<br />frame: 5","time: 4<br />x: -0.90820449<br />frame: 5","time: 5<br />x: 0.60418975<br />frame: 5","time: 5<br />x: 0.72229099<br />frame: 5","time: 5<br />x: -1.90573386<br />frame: 5","time: 5<br />x: -2.00048299<br />frame: 5","time: 5<br />x: 0.54263792<br />frame: 5","time: 5<br />x: -0.57209977<br />frame: 5","time: 5<br />x: 0.74113104<br />frame: 5","time: 5<br />x: -1.68655360<br />frame: 5","time: 5<br />x: 1.70763566<br />frame: 5","time: 5<br />x: -1.84198430<br />frame: 5","time: 5<br />x: -0.67340443<br />frame: 5","time: 5<br />x: 3.12266099<br />frame: 5","time: 5<br />x: -0.12932078<br />frame: 5","time: 5<br />x: 0.63983605<br />frame: 5","time: 5<br />x: 0.01303860<br />frame: 5","time: 5<br />x: 0.02121746<br />frame: 5"],"frame":"5","type":"scatter","mode":"markers","marker":{"autocolorscale":false,"color":"rgba(0,0,0,1)","opacity":1,"size":5.66929133858268,"symbol":"circle","line":{"width":1.88976377952756,"color":"rgba(0,0,0,1)"}},"hoveron":"points","showlegend":false,"xaxis":"x","yaxis":"y","hoverinfo":"text","visible":true},{"x":[1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,2,3,null,2,3,null,2,3,null,2,3,null,2,3,null,2,3,null,2,3,null,2,3,null,2,3,null,2,3,null,2,3,null,2,3,null,2,3,null,2,3,null,2,3,null,2,3,null,3,4,null,3,4,null,3,4,null,3,4,null,3,4,null,3,4,null,3,4,null,3,4,null,3,4,null,3,4,null,3,4,null,3,4,null,3,4,null,3,4,null,3,4,null,3,4,null,4,5,null,4,5,null,4,5,null,4,5,null,4,5,null,4,5,null,4,5,null,4,5,null,4,5,null,4,5,null,4,5,null,4,5,null,4,5,null,4,5,null,4,5,null,4,5],"y":[0.585528817843856,0.585528817843856,null,0.709466017509524,0.709466017509524,null,-0.109303314681054,-0.109303314681054,null,-0.453497173462763,-0.453497173462763,null,0.605887455840394,0.605887455840394,null,-1.81795596770373,-1.81795596770373,null,0.630098551068391,0.630098551068391,null,-0.276184105225216,-0.276184105225216,null,-0.284159743943371,-0.284159743943371,null,-0.919322002474128,-0.919322002474128,null,-0.116247806352002,-0.116247806352002,null,1.81731204370422,1.81731204370422,null,0.370627864257954,0.370627864257954,null,0.520216457554957,0.520216457554957,null,-0.750531994502331,-0.750531994502331,null,0.816899839520583,0.816899839520583,null,-0.276184105225216,-1.87389362192153,null,0.630098551068391,2.43519606987921,null,0.370627864257954,-0.111019499436683,null,0.370627864257954,0.991007665556376,null,0.816899839520583,1.42902333217143,null,0.816899839520583,0.654588862602458,null,0.605887455840394,1.41776063439425,null,-0.116247806352002,2.08058573999553,null,0.709466017509524,2.75865635491572,null,-0.453497173462763,1.1789484660177,null,-0.919322002474128,-0.665050809660074,null,0.709466017509524,1.20065429678208,null,-0.284159743943371,-0.608246322680488,null,0.370627864257954,-1.29142237960068,null,-0.109303314681054,1.65843053619191,null,-0.109303314681054,-0.0835022660332458,null,1.41776063439425,2.00094828782994,null,-0.665050809660074,-1.9718496431245,null,-0.0835022660332458,-0.62388833989535,null,-0.0835022660332458,1.86419039869879,null,-0.111019499436683,-0.0574292290792283,null,-0.608246322680488,-0.256583482125251,null,-1.29142237960068,-1.96239891859462,null,-0.665050809660074,-0.38709711485393,null,-1.29142237960068,-0.600251106725797,null,-0.0835022660332458,0.74029306267284,null,1.65843053619191,3.8034955566722,null,-1.29142237960068,-3.63836635796405,null,-0.0835022660332458,0.0660897145819629,null,0.991007665556376,-0.351523816173168,null,1.20065429678208,1.75395737228598,null,-0.665050809660074,0.924912033035336,null,-0.256583482125251,-1.30593630108152,null,0.74029306267284,3.07080502507755,null,-0.0574292290792283,1.3452761537882,null,-0.62388833989535,0.318712510796175,null,0.74029306267284,1.56655134982527,null,-0.62388833989535,-1.43542883008643,null,1.86419039869879,2.34043867945245,null,0.924912033035336,1.94617044051643,null,-0.62388833989535,0.0214947343107265,null,0.0660897145819629,1.1092332661334,null,-0.0574292290792283,-0.36179834177289,null,0.74029306267284,3.21740397963581,null,-0.38709711485393,0.584123558183514,null,-0.62388833989535,1.24321084467137,null,0.0660897145819629,0.738132183596749,null,-0.600251106725797,-0.908204487563135,null,1.1092332661334,0.604189748612507,null,-1.43542883008643,0.722290986419984,null,-1.30593630108152,-1.90573386490635,null,-1.30593630108152,-2.00048299435227,null,0.318712510796175,0.542637918317463,null,0.584123558183514,-0.572099772057259,null,0.318712510796175,0.741131038583174,null,-0.36179834177289,-1.68655359762182,null,1.56655134982527,1.70763566268555,null,-1.30593630108152,-1.84198429957246,null,-0.36179834177289,-0.673404426351037,null,1.56655134982527,3.12266099242653,null,0.318712510796175,-0.129320780498295,null,0.318712510796175,0.639836048290143,null,1.24321084467137,0.01303859779118,null,1.3452761537882,0.0212174616340499],"text":["pmax(1, time - 1): 1<br />anc.loc: 0.58552882<br />time: 1<br />loc: 0.58552882<br />frame: 5<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.58552882<br />time: 1<br />loc: 0.58552882<br />frame: 5<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.70946602<br />time: 1<br />loc: 0.70946602<br />frame: 5<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.70946602<br />time: 1<br />loc: 0.70946602<br />frame: 5<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.10930331<br />time: 1<br />loc: -0.10930331<br />frame: 5<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.10930331<br />time: 1<br />loc: -0.10930331<br />frame: 5<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.45349717<br />time: 1<br />loc: -0.45349717<br />frame: 5<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.45349717<br />time: 1<br />loc: -0.45349717<br />frame: 5<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.60588746<br />time: 1<br />loc: 0.60588746<br />frame: 5<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.60588746<br />time: 1<br />loc: 0.60588746<br />frame: 5<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -1.81795597<br />time: 1<br />loc: -1.81795597<br />frame: 5<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -1.81795597<br />time: 1<br />loc: -1.81795597<br />frame: 5<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.63009855<br />time: 1<br />loc: 0.63009855<br />frame: 5<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.63009855<br />time: 1<br />loc: 0.63009855<br />frame: 5<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.27618411<br />time: 1<br />loc: -0.27618411<br />frame: 5<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.27618411<br />time: 1<br />loc: -0.27618411<br />frame: 5<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.28415974<br />time: 1<br />loc: -0.28415974<br />frame: 5<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.28415974<br />time: 1<br />loc: -0.28415974<br />frame: 5<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.91932200<br />time: 1<br />loc: -0.91932200<br />frame: 5<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.91932200<br />time: 1<br />loc: -0.91932200<br />frame: 5<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.11624781<br />time: 1<br />loc: -0.11624781<br />frame: 5<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.11624781<br />time: 1<br />loc: -0.11624781<br />frame: 5<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 1.81731204<br />time: 1<br />loc: 1.81731204<br />frame: 5<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 1.81731204<br />time: 1<br />loc: 1.81731204<br />frame: 5<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.37062786<br />time: 1<br />loc: 0.37062786<br />frame: 5<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.37062786<br />time: 1<br />loc: 0.37062786<br />frame: 5<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.52021646<br />time: 1<br />loc: 0.52021646<br />frame: 5<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.52021646<br />time: 1<br />loc: 0.52021646<br />frame: 5<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.75053199<br />time: 1<br />loc: -0.75053199<br />frame: 5<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.75053199<br />time: 1<br />loc: -0.75053199<br />frame: 5<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.81689984<br />time: 1<br />loc: 0.81689984<br />frame: 5<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.81689984<br />time: 1<br />loc: 0.81689984<br />frame: 5<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.27618411<br />time: 2<br />loc: -1.87389362<br />frame: 5<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.27618411<br />time: 2<br />loc: -1.87389362<br />frame: 5<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.63009855<br />time: 2<br />loc: 2.43519607<br />frame: 5<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.63009855<br />time: 2<br />loc: 2.43519607<br />frame: 5<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.37062786<br />time: 2<br />loc: -0.11101950<br />frame: 5<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.37062786<br />time: 2<br />loc: -0.11101950<br />frame: 5<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.37062786<br />time: 2<br />loc: 0.99100767<br />frame: 5<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.37062786<br />time: 2<br />loc: 0.99100767<br />frame: 5<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.81689984<br />time: 2<br />loc: 1.42902333<br />frame: 5<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.81689984<br />time: 2<br />loc: 1.42902333<br />frame: 5<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.81689984<br />time: 2<br />loc: 0.65458886<br />frame: 5<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.81689984<br />time: 2<br />loc: 0.65458886<br />frame: 5<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.60588746<br />time: 2<br />loc: 1.41776063<br />frame: 5<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.60588746<br />time: 2<br />loc: 1.41776063<br />frame: 5<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.11624781<br />time: 2<br />loc: 2.08058574<br />frame: 5<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.11624781<br />time: 2<br />loc: 2.08058574<br />frame: 5<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.70946602<br />time: 2<br />loc: 2.75865635<br />frame: 5<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.70946602<br />time: 2<br />loc: 2.75865635<br />frame: 5<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.45349717<br />time: 2<br />loc: 1.17894847<br />frame: 5<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.45349717<br />time: 2<br />loc: 1.17894847<br />frame: 5<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.91932200<br />time: 2<br />loc: -0.66505081<br />frame: 5<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.91932200<br />time: 2<br />loc: -0.66505081<br />frame: 5<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.70946602<br />time: 2<br />loc: 1.20065430<br />frame: 5<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.70946602<br />time: 2<br />loc: 1.20065430<br />frame: 5<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.28415974<br />time: 2<br />loc: -0.60824632<br />frame: 5<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.28415974<br />time: 2<br />loc: -0.60824632<br />frame: 5<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.37062786<br />time: 2<br />loc: -1.29142238<br />frame: 5<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.37062786<br />time: 2<br />loc: -1.29142238<br />frame: 5<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.10930331<br />time: 2<br />loc: 1.65843054<br />frame: 5<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.10930331<br />time: 2<br />loc: 1.65843054<br />frame: 5<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.10930331<br />time: 2<br />loc: -0.08350227<br />frame: 5<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.10930331<br />time: 2<br />loc: -0.08350227<br />frame: 5<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: 1.41776063<br />time: 3<br />loc: 2.00094829<br />frame: 5<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: 1.41776063<br />time: 3<br />loc: 2.00094829<br />frame: 5<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: -0.66505081<br />time: 3<br />loc: -1.97184964<br />frame: 5<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: -0.66505081<br />time: 3<br />loc: -1.97184964<br />frame: 5<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: -0.08350227<br />time: 3<br />loc: -0.62388834<br />frame: 5<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: -0.08350227<br />time: 3<br />loc: -0.62388834<br />frame: 5<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: -0.08350227<br />time: 3<br />loc: 1.86419040<br />frame: 5<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: -0.08350227<br />time: 3<br />loc: 1.86419040<br />frame: 5<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: -0.11101950<br />time: 3<br />loc: -0.05742923<br />frame: 5<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: -0.11101950<br />time: 3<br />loc: -0.05742923<br />frame: 5<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: -0.60824632<br />time: 3<br />loc: -0.25658348<br />frame: 5<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: -0.60824632<br />time: 3<br />loc: -0.25658348<br />frame: 5<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: -1.29142238<br />time: 3<br />loc: -1.96239892<br />frame: 5<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: -1.29142238<br />time: 3<br />loc: -1.96239892<br />frame: 5<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: -0.66505081<br />time: 3<br />loc: -0.38709711<br />frame: 5<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: -0.66505081<br />time: 3<br />loc: -0.38709711<br />frame: 5<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: -1.29142238<br />time: 3<br />loc: -0.60025111<br />frame: 5<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: -1.29142238<br />time: 3<br />loc: -0.60025111<br />frame: 5<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: -0.08350227<br />time: 3<br />loc: 0.74029306<br />frame: 5<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: -0.08350227<br />time: 3<br />loc: 0.74029306<br />frame: 5<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: 1.65843054<br />time: 3<br />loc: 3.80349556<br />frame: 5<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: 1.65843054<br />time: 3<br />loc: 3.80349556<br />frame: 5<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: -1.29142238<br />time: 3<br />loc: -3.63836636<br />frame: 5<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: -1.29142238<br />time: 3<br />loc: -3.63836636<br />frame: 5<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: -0.08350227<br />time: 3<br />loc: 0.06608971<br />frame: 5<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: -0.08350227<br />time: 3<br />loc: 0.06608971<br />frame: 5<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: 0.99100767<br />time: 3<br />loc: -0.35152382<br />frame: 5<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: 0.99100767<br />time: 3<br />loc: -0.35152382<br />frame: 5<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: 1.20065430<br />time: 3<br />loc: 1.75395737<br />frame: 5<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: 1.20065430<br />time: 3<br />loc: 1.75395737<br />frame: 5<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: -0.66505081<br />time: 3<br />loc: 0.92491203<br />frame: 5<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: -0.66505081<br />time: 3<br />loc: 0.92491203<br />frame: 5<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: -0.25658348<br />time: 4<br />loc: -1.30593630<br />frame: 5<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: -0.25658348<br />time: 4<br />loc: -1.30593630<br />frame: 5<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: 0.74029306<br />time: 4<br />loc: 3.07080503<br />frame: 5<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: 0.74029306<br />time: 4<br />loc: 3.07080503<br />frame: 5<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: -0.05742923<br />time: 4<br />loc: 1.34527615<br />frame: 5<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: -0.05742923<br />time: 4<br />loc: 1.34527615<br />frame: 5<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: -0.62388834<br />time: 4<br />loc: 0.31871251<br />frame: 5<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: -0.62388834<br />time: 4<br />loc: 0.31871251<br />frame: 5<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: 0.74029306<br />time: 4<br />loc: 1.56655135<br />frame: 5<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: 0.74029306<br />time: 4<br />loc: 1.56655135<br />frame: 5<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: -0.62388834<br />time: 4<br />loc: -1.43542883<br />frame: 5<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: -0.62388834<br />time: 4<br />loc: -1.43542883<br />frame: 5<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: 1.86419040<br />time: 4<br />loc: 2.34043868<br />frame: 5<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: 1.86419040<br />time: 4<br />loc: 2.34043868<br />frame: 5<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: 0.92491203<br />time: 4<br />loc: 1.94617044<br />frame: 5<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: 0.92491203<br />time: 4<br />loc: 1.94617044<br />frame: 5<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: -0.62388834<br />time: 4<br />loc: 0.02149473<br />frame: 5<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: -0.62388834<br />time: 4<br />loc: 0.02149473<br />frame: 5<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: 0.06608971<br />time: 4<br />loc: 1.10923327<br />frame: 5<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: 0.06608971<br />time: 4<br />loc: 1.10923327<br />frame: 5<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: -0.05742923<br />time: 4<br />loc: -0.36179834<br />frame: 5<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: -0.05742923<br />time: 4<br />loc: -0.36179834<br />frame: 5<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: 0.74029306<br />time: 4<br />loc: 3.21740398<br />frame: 5<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: 0.74029306<br />time: 4<br />loc: 3.21740398<br />frame: 5<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: -0.38709711<br />time: 4<br />loc: 0.58412356<br />frame: 5<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: -0.38709711<br />time: 4<br />loc: 0.58412356<br />frame: 5<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: -0.62388834<br />time: 4<br />loc: 1.24321084<br />frame: 5<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: -0.62388834<br />time: 4<br />loc: 1.24321084<br />frame: 5<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: 0.06608971<br />time: 4<br />loc: 0.73813218<br />frame: 5<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: 0.06608971<br />time: 4<br />loc: 0.73813218<br />frame: 5<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: -0.60025111<br />time: 4<br />loc: -0.90820449<br />frame: 5<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: -0.60025111<br />time: 4<br />loc: -0.90820449<br />frame: 5<br />colour: ancestral line",null,"pmax(1, time - 1): 4<br />anc.loc: 1.10923327<br />time: 5<br />loc: 0.60418975<br />frame: 5<br />colour: ancestral line","pmax(1, time - 1): 4<br />anc.loc: 1.10923327<br />time: 5<br />loc: 0.60418975<br />frame: 5<br />colour: ancestral line",null,"pmax(1, time - 1): 4<br />anc.loc: -1.43542883<br />time: 5<br />loc: 0.72229099<br />frame: 5<br />colour: ancestral line","pmax(1, time - 1): 4<br />anc.loc: -1.43542883<br />time: 5<br />loc: 0.72229099<br />frame: 5<br />colour: ancestral line",null,"pmax(1, time - 1): 4<br />anc.loc: -1.30593630<br />time: 5<br />loc: -1.90573386<br />frame: 5<br />colour: ancestral line","pmax(1, time - 1): 4<br />anc.loc: -1.30593630<br />time: 5<br />loc: -1.90573386<br />frame: 5<br />colour: ancestral line",null,"pmax(1, time - 1): 4<br />anc.loc: -1.30593630<br />time: 5<br />loc: -2.00048299<br />frame: 5<br />colour: ancestral line","pmax(1, time - 1): 4<br />anc.loc: -1.30593630<br />time: 5<br />loc: -2.00048299<br />frame: 5<br />colour: ancestral line",null,"pmax(1, time - 1): 4<br />anc.loc: 0.31871251<br />time: 5<br />loc: 0.54263792<br />frame: 5<br />colour: ancestral line","pmax(1, time - 1): 4<br />anc.loc: 0.31871251<br />time: 5<br />loc: 0.54263792<br />frame: 5<br />colour: ancestral line",null,"pmax(1, time - 1): 4<br />anc.loc: 0.58412356<br />time: 5<br />loc: -0.57209977<br />frame: 5<br />colour: ancestral line","pmax(1, time - 1): 4<br />anc.loc: 0.58412356<br />time: 5<br />loc: -0.57209977<br />frame: 5<br />colour: ancestral line",null,"pmax(1, time - 1): 4<br />anc.loc: 0.31871251<br />time: 5<br />loc: 0.74113104<br />frame: 5<br />colour: ancestral line","pmax(1, time - 1): 4<br />anc.loc: 0.31871251<br />time: 5<br />loc: 0.74113104<br />frame: 5<br />colour: ancestral line",null,"pmax(1, time - 1): 4<br />anc.loc: -0.36179834<br />time: 5<br />loc: -1.68655360<br />frame: 5<br />colour: ancestral line","pmax(1, time - 1): 4<br />anc.loc: -0.36179834<br />time: 5<br />loc: -1.68655360<br />frame: 5<br />colour: ancestral line",null,"pmax(1, time - 1): 4<br />anc.loc: 1.56655135<br />time: 5<br />loc: 1.70763566<br />frame: 5<br />colour: ancestral line","pmax(1, time - 1): 4<br />anc.loc: 1.56655135<br />time: 5<br />loc: 1.70763566<br />frame: 5<br />colour: ancestral line",null,"pmax(1, time - 1): 4<br />anc.loc: -1.30593630<br />time: 5<br />loc: -1.84198430<br />frame: 5<br />colour: ancestral line","pmax(1, time - 1): 4<br />anc.loc: -1.30593630<br />time: 5<br />loc: -1.84198430<br />frame: 5<br />colour: ancestral line",null,"pmax(1, time - 1): 4<br />anc.loc: -0.36179834<br />time: 5<br />loc: -0.67340443<br />frame: 5<br />colour: ancestral line","pmax(1, time - 1): 4<br />anc.loc: -0.36179834<br />time: 5<br />loc: -0.67340443<br />frame: 5<br />colour: ancestral line",null,"pmax(1, time - 1): 4<br />anc.loc: 1.56655135<br />time: 5<br />loc: 3.12266099<br />frame: 5<br />colour: ancestral line","pmax(1, time - 1): 4<br />anc.loc: 1.56655135<br />time: 5<br />loc: 3.12266099<br />frame: 5<br />colour: ancestral line",null,"pmax(1, time - 1): 4<br />anc.loc: 0.31871251<br />time: 5<br />loc: -0.12932078<br />frame: 5<br />colour: ancestral line","pmax(1, time - 1): 4<br />anc.loc: 0.31871251<br />time: 5<br />loc: -0.12932078<br />frame: 5<br />colour: ancestral line",null,"pmax(1, time - 1): 4<br />anc.loc: 0.31871251<br />time: 5<br />loc: 0.63983605<br />frame: 5<br />colour: ancestral line","pmax(1, time - 1): 4<br />anc.loc: 0.31871251<br />time: 5<br />loc: 0.63983605<br />frame: 5<br />colour: ancestral line",null,"pmax(1, time - 1): 4<br />anc.loc: 1.24321084<br />time: 5<br />loc: 0.01303860<br />frame: 5<br />colour: ancestral line","pmax(1, time - 1): 4<br />anc.loc: 1.24321084<br />time: 5<br />loc: 0.01303860<br />frame: 5<br />colour: ancestral line",null,"pmax(1, time - 1): 4<br />anc.loc: 1.34527615<br />time: 5<br />loc: 0.02121746<br />frame: 5<br />colour: ancestral line","pmax(1, time - 1): 4<br />anc.loc: 1.34527615<br />time: 5<br />loc: 0.02121746<br />frame: 5<br />colour: ancestral line"],"frame":"5","type":"scatter","mode":"lines","line":{"width":1.88976377952756,"color":"rgba(248,118,109,1)","dash":"solid"},"hoveron":"points","name":"ancestral line","legendgroup":"ancestral line","showlegend":true,"xaxis":"x","yaxis":"y","hoverinfo":"text","visible":true}],"traces":[0,1]},{"name":"6","data":[{"x":[1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,2,2,2,2,2,2,2,2,2,2,2,2,2,2,2,2,3,3,3,3,3,3,3,3,3,3,3,3,3,3,3,3,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,6,6,6,6,6,6,6,6,6,6,6,6,6,6,6,6],"y":[0.585528817843856,0.709466017509524,-0.109303314681054,-0.453497173462763,0.605887455840394,-1.81795596770373,0.630098551068391,-0.276184105225216,-0.284159743943371,-0.919322002474128,-0.116247806352002,1.81731204370422,0.370627864257954,0.520216457554957,-0.750531994502331,0.816899839520583,-1.87389362192153,2.43519606987921,-0.111019499436683,0.991007665556376,1.42902333217143,0.654588862602458,1.41776063439425,2.08058573999553,2.75865635491572,1.1789484660177,-0.665050809660074,1.20065429678208,-0.608246322680488,-1.29142237960068,1.65843053619191,-0.0835022660332458,2.00094828782994,-1.9718496431245,-0.62388833989535,1.86419039869879,-0.0574292290792283,-0.256583482125251,-1.96239891859462,-0.38709711485393,-0.600251106725797,0.74029306267284,3.8034955566722,-3.63836635796405,0.0660897145819629,-0.351523816173168,1.75395737228598,0.924912033035336,-1.30593630108152,3.07080502507755,1.3452761537882,0.318712510796175,1.56655134982527,-1.43542883008643,2.34043867945245,1.94617044051643,0.0214947343107265,1.1092332661334,-0.36179834177289,3.21740397963581,0.584123558183514,1.24321084467137,0.738132183596749,-0.908204487563135,0.604189748612507,0.722290986419984,-1.90573386490635,-2.00048299435227,0.542637918317463,-0.572099772057259,0.741131038583174,-1.68655359762182,1.70763566268555,-1.84198429957246,-0.673404426351037,3.12266099242653,-0.129320780498295,0.639836048290143,0.01303859779118,0.0212174616340499,2.28599464062077,0.648047590811593,-1.36440202225901,0.958855347677899,-0.993339465395833,-0.518984974527995,-1.97468906991976,1.76151503566965,-2.69564965307202,-1.86943594446187,0.968787564416303,1.48784458177452,-1.47560710331635,-2.87121266325259,2.08368850008554,-0.505275791900848],"text":["time: 1<br />x: 0.58552882<br />frame: 6","time: 1<br />x: 0.70946602<br />frame: 6","time: 1<br />x: -0.10930331<br />frame: 6","time: 1<br />x: -0.45349717<br />frame: 6","time: 1<br />x: 0.60588746<br />frame: 6","time: 1<br />x: -1.81795597<br />frame: 6","time: 1<br />x: 0.63009855<br />frame: 6","time: 1<br />x: -0.27618411<br />frame: 6","time: 1<br />x: -0.28415974<br />frame: 6","time: 1<br />x: -0.91932200<br />frame: 6","time: 1<br />x: -0.11624781<br />frame: 6","time: 1<br />x: 1.81731204<br />frame: 6","time: 1<br />x: 0.37062786<br />frame: 6","time: 1<br />x: 0.52021646<br />frame: 6","time: 1<br />x: -0.75053199<br />frame: 6","time: 1<br />x: 0.81689984<br />frame: 6","time: 2<br />x: -1.87389362<br />frame: 6","time: 2<br />x: 2.43519607<br />frame: 6","time: 2<br />x: -0.11101950<br />frame: 6","time: 2<br />x: 0.99100767<br />frame: 6","time: 2<br />x: 1.42902333<br />frame: 6","time: 2<br />x: 0.65458886<br />frame: 6","time: 2<br />x: 1.41776063<br />frame: 6","time: 2<br />x: 2.08058574<br />frame: 6","time: 2<br />x: 2.75865635<br />frame: 6","time: 2<br />x: 1.17894847<br />frame: 6","time: 2<br />x: -0.66505081<br />frame: 6","time: 2<br />x: 1.20065430<br />frame: 6","time: 2<br />x: -0.60824632<br />frame: 6","time: 2<br />x: -1.29142238<br />frame: 6","time: 2<br />x: 1.65843054<br />frame: 6","time: 2<br />x: -0.08350227<br />frame: 6","time: 3<br />x: 2.00094829<br />frame: 6","time: 3<br />x: -1.97184964<br />frame: 6","time: 3<br />x: -0.62388834<br />frame: 6","time: 3<br />x: 1.86419040<br />frame: 6","time: 3<br />x: -0.05742923<br />frame: 6","time: 3<br />x: -0.25658348<br />frame: 6","time: 3<br />x: -1.96239892<br />frame: 6","time: 3<br />x: -0.38709711<br />frame: 6","time: 3<br />x: -0.60025111<br />frame: 6","time: 3<br />x: 0.74029306<br />frame: 6","time: 3<br />x: 3.80349556<br />frame: 6","time: 3<br />x: -3.63836636<br />frame: 6","time: 3<br />x: 0.06608971<br />frame: 6","time: 3<br />x: -0.35152382<br />frame: 6","time: 3<br />x: 1.75395737<br />frame: 6","time: 3<br />x: 0.92491203<br />frame: 6","time: 4<br />x: -1.30593630<br />frame: 6","time: 4<br />x: 3.07080503<br />frame: 6","time: 4<br />x: 1.34527615<br />frame: 6","time: 4<br />x: 0.31871251<br />frame: 6","time: 4<br />x: 1.56655135<br />frame: 6","time: 4<br />x: -1.43542883<br />frame: 6","time: 4<br />x: 2.34043868<br />frame: 6","time: 4<br />x: 1.94617044<br />frame: 6","time: 4<br />x: 0.02149473<br />frame: 6","time: 4<br />x: 1.10923327<br />frame: 6","time: 4<br />x: -0.36179834<br />frame: 6","time: 4<br />x: 3.21740398<br />frame: 6","time: 4<br />x: 0.58412356<br />frame: 6","time: 4<br />x: 1.24321084<br />frame: 6","time: 4<br />x: 0.73813218<br />frame: 6","time: 4<br />x: -0.90820449<br />frame: 6","time: 5<br />x: 0.60418975<br />frame: 6","time: 5<br />x: 0.72229099<br />frame: 6","time: 5<br />x: -1.90573386<br />frame: 6","time: 5<br />x: -2.00048299<br />frame: 6","time: 5<br />x: 0.54263792<br />frame: 6","time: 5<br />x: -0.57209977<br />frame: 6","time: 5<br />x: 0.74113104<br />frame: 6","time: 5<br />x: -1.68655360<br />frame: 6","time: 5<br />x: 1.70763566<br />frame: 6","time: 5<br />x: -1.84198430<br />frame: 6","time: 5<br />x: -0.67340443<br />frame: 6","time: 5<br />x: 3.12266099<br />frame: 6","time: 5<br />x: -0.12932078<br />frame: 6","time: 5<br />x: 0.63983605<br />frame: 6","time: 5<br />x: 0.01303860<br />frame: 6","time: 5<br />x: 0.02121746<br />frame: 6","time: 6<br />x: 2.28599464<br />frame: 6","time: 6<br />x: 0.64804759<br />frame: 6","time: 6<br />x: -1.36440202<br />frame: 6","time: 6<br />x: 0.95885535<br />frame: 6","time: 6<br />x: -0.99333947<br />frame: 6","time: 6<br />x: -0.51898497<br />frame: 6","time: 6<br />x: -1.97468907<br />frame: 6","time: 6<br />x: 1.76151504<br />frame: 6","time: 6<br />x: -2.69564965<br />frame: 6","time: 6<br />x: -1.86943594<br />frame: 6","time: 6<br />x: 0.96878756<br />frame: 6","time: 6<br />x: 1.48784458<br />frame: 6","time: 6<br />x: -1.47560710<br />frame: 6","time: 6<br />x: -2.87121266<br />frame: 6","time: 6<br />x: 2.08368850<br />frame: 6","time: 6<br />x: -0.50527579<br />frame: 6"],"frame":"6","type":"scatter","mode":"markers","marker":{"autocolorscale":false,"color":"rgba(0,0,0,1)","opacity":1,"size":5.66929133858268,"symbol":"circle","line":{"width":1.88976377952756,"color":"rgba(0,0,0,1)"}},"hoveron":"points","showlegend":false,"xaxis":"x","yaxis":"y","hoverinfo":"text","visible":true},{"x":[1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,2,3,null,2,3,null,2,3,null,2,3,null,2,3,null,2,3,null,2,3,null,2,3,null,2,3,null,2,3,null,2,3,null,2,3,null,2,3,null,2,3,null,2,3,null,2,3,null,3,4,null,3,4,null,3,4,null,3,4,null,3,4,null,3,4,null,3,4,null,3,4,null,3,4,null,3,4,null,3,4,null,3,4,null,3,4,null,3,4,null,3,4,null,3,4,null,4,5,null,4,5,null,4,5,null,4,5,null,4,5,null,4,5,null,4,5,null,4,5,null,4,5,null,4,5,null,4,5,null,4,5,null,4,5,null,4,5,null,4,5,null,4,5,null,5,6,null,5,6,null,5,6,null,5,6,null,5,6,null,5,6,null,5,6,null,5,6,null,5,6,null,5,6,null,5,6,null,5,6,null,5,6,null,5,6,null,5,6,null,5,6],"y":[0.585528817843856,0.585528817843856,null,0.709466017509524,0.709466017509524,null,-0.109303314681054,-0.109303314681054,null,-0.453497173462763,-0.453497173462763,null,0.605887455840394,0.605887455840394,null,-1.81795596770373,-1.81795596770373,null,0.630098551068391,0.630098551068391,null,-0.276184105225216,-0.276184105225216,null,-0.284159743943371,-0.284159743943371,null,-0.919322002474128,-0.919322002474128,null,-0.116247806352002,-0.116247806352002,null,1.81731204370422,1.81731204370422,null,0.370627864257954,0.370627864257954,null,0.520216457554957,0.520216457554957,null,-0.750531994502331,-0.750531994502331,null,0.816899839520583,0.816899839520583,null,-0.276184105225216,-1.87389362192153,null,0.630098551068391,2.43519606987921,null,0.370627864257954,-0.111019499436683,null,0.370627864257954,0.991007665556376,null,0.816899839520583,1.42902333217143,null,0.816899839520583,0.654588862602458,null,0.605887455840394,1.41776063439425,null,-0.116247806352002,2.08058573999553,null,0.709466017509524,2.75865635491572,null,-0.453497173462763,1.1789484660177,null,-0.919322002474128,-0.665050809660074,null,0.709466017509524,1.20065429678208,null,-0.284159743943371,-0.608246322680488,null,0.370627864257954,-1.29142237960068,null,-0.109303314681054,1.65843053619191,null,-0.109303314681054,-0.0835022660332458,null,1.41776063439425,2.00094828782994,null,-0.665050809660074,-1.9718496431245,null,-0.0835022660332458,-0.62388833989535,null,-0.0835022660332458,1.86419039869879,null,-0.111019499436683,-0.0574292290792283,null,-0.608246322680488,-0.256583482125251,null,-1.29142237960068,-1.96239891859462,null,-0.665050809660074,-0.38709711485393,null,-1.29142237960068,-0.600251106725797,null,-0.0835022660332458,0.74029306267284,null,1.65843053619191,3.8034955566722,null,-1.29142237960068,-3.63836635796405,null,-0.0835022660332458,0.0660897145819629,null,0.991007665556376,-0.351523816173168,null,1.20065429678208,1.75395737228598,null,-0.665050809660074,0.924912033035336,null,-0.256583482125251,-1.30593630108152,null,0.74029306267284,3.07080502507755,null,-0.0574292290792283,1.3452761537882,null,-0.62388833989535,0.318712510796175,null,0.74029306267284,1.56655134982527,null,-0.62388833989535,-1.43542883008643,null,1.86419039869879,2.34043867945245,null,0.924912033035336,1.94617044051643,null,-0.62388833989535,0.0214947343107265,null,0.0660897145819629,1.1092332661334,null,-0.0574292290792283,-0.36179834177289,null,0.74029306267284,3.21740397963581,null,-0.38709711485393,0.584123558183514,null,-0.62388833989535,1.24321084467137,null,0.0660897145819629,0.738132183596749,null,-0.600251106725797,-0.908204487563135,null,1.1092332661334,0.604189748612507,null,-1.43542883008643,0.722290986419984,null,-1.30593630108152,-1.90573386490635,null,-1.30593630108152,-2.00048299435227,null,0.318712510796175,0.542637918317463,null,0.584123558183514,-0.572099772057259,null,0.318712510796175,0.741131038583174,null,-0.36179834177289,-1.68655359762182,null,1.56655134982527,1.70763566268555,null,-1.30593630108152,-1.84198429957246,null,-0.36179834177289,-0.673404426351037,null,1.56655134982527,3.12266099242653,null,0.318712510796175,-0.129320780498295,null,0.318712510796175,0.639836048290143,null,1.24321084467137,0.01303859779118,null,1.3452761537882,0.0212174616340499,null,0.741131038583174,2.28599464062077,null,-0.673404426351037,0.648047590811593,null,-1.68655359762182,-1.36440202225901,null,-0.572099772057259,0.958855347677899,null,-0.572099772057259,-0.993339465395833,null,0.639836048290143,-0.518984974527995,null,-0.129320780498295,-1.97468906991976,null,0.604189748612507,1.76151503566965,null,-0.572099772057259,-2.69564965307202,null,-0.673404426351037,-1.86943594446187,null,-0.673404426351037,0.968787564416303,null,0.604189748612507,1.48784458177452,null,-2.00048299435227,-1.47560710331635,null,-1.68655359762182,-2.87121266325259,null,-0.572099772057259,2.08368850008554,null,0.542637918317463,-0.505275791900848],"text":["pmax(1, time - 1): 1<br />anc.loc: 0.58552882<br />time: 1<br />loc: 0.58552882<br />frame: 6<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.58552882<br />time: 1<br />loc: 0.58552882<br />frame: 6<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.70946602<br />time: 1<br />loc: 0.70946602<br />frame: 6<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.70946602<br />time: 1<br />loc: 0.70946602<br />frame: 6<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.10930331<br />time: 1<br />loc: -0.10930331<br />frame: 6<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.10930331<br />time: 1<br />loc: -0.10930331<br />frame: 6<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.45349717<br />time: 1<br />loc: -0.45349717<br />frame: 6<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.45349717<br />time: 1<br />loc: -0.45349717<br />frame: 6<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.60588746<br />time: 1<br />loc: 0.60588746<br />frame: 6<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.60588746<br />time: 1<br />loc: 0.60588746<br />frame: 6<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -1.81795597<br />time: 1<br />loc: -1.81795597<br />frame: 6<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -1.81795597<br />time: 1<br />loc: -1.81795597<br />frame: 6<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.63009855<br />time: 1<br />loc: 0.63009855<br />frame: 6<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.63009855<br />time: 1<br />loc: 0.63009855<br />frame: 6<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.27618411<br />time: 1<br />loc: -0.27618411<br />frame: 6<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.27618411<br />time: 1<br />loc: -0.27618411<br />frame: 6<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.28415974<br />time: 1<br />loc: -0.28415974<br />frame: 6<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.28415974<br />time: 1<br />loc: -0.28415974<br />frame: 6<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.91932200<br />time: 1<br />loc: -0.91932200<br />frame: 6<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.91932200<br />time: 1<br />loc: -0.91932200<br />frame: 6<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.11624781<br />time: 1<br />loc: -0.11624781<br />frame: 6<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.11624781<br />time: 1<br />loc: -0.11624781<br />frame: 6<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 1.81731204<br />time: 1<br />loc: 1.81731204<br />frame: 6<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 1.81731204<br />time: 1<br />loc: 1.81731204<br />frame: 6<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.37062786<br />time: 1<br />loc: 0.37062786<br />frame: 6<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.37062786<br />time: 1<br />loc: 0.37062786<br />frame: 6<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.52021646<br />time: 1<br />loc: 0.52021646<br />frame: 6<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.52021646<br />time: 1<br />loc: 0.52021646<br />frame: 6<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.75053199<br />time: 1<br />loc: -0.75053199<br />frame: 6<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.75053199<br />time: 1<br />loc: -0.75053199<br />frame: 6<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.81689984<br />time: 1<br />loc: 0.81689984<br />frame: 6<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.81689984<br />time: 1<br />loc: 0.81689984<br />frame: 6<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.27618411<br />time: 2<br />loc: -1.87389362<br />frame: 6<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.27618411<br />time: 2<br />loc: -1.87389362<br />frame: 6<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.63009855<br />time: 2<br />loc: 2.43519607<br />frame: 6<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.63009855<br />time: 2<br />loc: 2.43519607<br />frame: 6<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.37062786<br />time: 2<br />loc: -0.11101950<br />frame: 6<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.37062786<br />time: 2<br />loc: -0.11101950<br />frame: 6<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.37062786<br />time: 2<br />loc: 0.99100767<br />frame: 6<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.37062786<br />time: 2<br />loc: 0.99100767<br />frame: 6<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.81689984<br />time: 2<br />loc: 1.42902333<br />frame: 6<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.81689984<br />time: 2<br />loc: 1.42902333<br />frame: 6<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.81689984<br />time: 2<br />loc: 0.65458886<br />frame: 6<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.81689984<br />time: 2<br />loc: 0.65458886<br />frame: 6<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.60588746<br />time: 2<br />loc: 1.41776063<br />frame: 6<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.60588746<br />time: 2<br />loc: 1.41776063<br />frame: 6<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.11624781<br />time: 2<br />loc: 2.08058574<br />frame: 6<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.11624781<br />time: 2<br />loc: 2.08058574<br />frame: 6<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.70946602<br />time: 2<br />loc: 2.75865635<br />frame: 6<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.70946602<br />time: 2<br />loc: 2.75865635<br />frame: 6<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.45349717<br />time: 2<br />loc: 1.17894847<br />frame: 6<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.45349717<br />time: 2<br />loc: 1.17894847<br />frame: 6<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.91932200<br />time: 2<br />loc: -0.66505081<br />frame: 6<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.91932200<br />time: 2<br />loc: -0.66505081<br />frame: 6<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.70946602<br />time: 2<br />loc: 1.20065430<br />frame: 6<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.70946602<br />time: 2<br />loc: 1.20065430<br />frame: 6<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.28415974<br />time: 2<br />loc: -0.60824632<br />frame: 6<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.28415974<br />time: 2<br />loc: -0.60824632<br />frame: 6<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.37062786<br />time: 2<br />loc: -1.29142238<br />frame: 6<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.37062786<br />time: 2<br />loc: -1.29142238<br />frame: 6<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.10930331<br />time: 2<br />loc: 1.65843054<br />frame: 6<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.10930331<br />time: 2<br />loc: 1.65843054<br />frame: 6<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.10930331<br />time: 2<br />loc: -0.08350227<br />frame: 6<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.10930331<br />time: 2<br />loc: -0.08350227<br />frame: 6<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: 1.41776063<br />time: 3<br />loc: 2.00094829<br />frame: 6<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: 1.41776063<br />time: 3<br />loc: 2.00094829<br />frame: 6<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: -0.66505081<br />time: 3<br />loc: -1.97184964<br />frame: 6<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: -0.66505081<br />time: 3<br />loc: -1.97184964<br />frame: 6<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: -0.08350227<br />time: 3<br />loc: -0.62388834<br />frame: 6<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: -0.08350227<br />time: 3<br />loc: -0.62388834<br />frame: 6<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: -0.08350227<br />time: 3<br />loc: 1.86419040<br />frame: 6<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: -0.08350227<br />time: 3<br />loc: 1.86419040<br />frame: 6<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: -0.11101950<br />time: 3<br />loc: -0.05742923<br />frame: 6<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: -0.11101950<br />time: 3<br />loc: -0.05742923<br />frame: 6<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: -0.60824632<br />time: 3<br />loc: -0.25658348<br />frame: 6<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: -0.60824632<br />time: 3<br />loc: -0.25658348<br />frame: 6<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: -1.29142238<br />time: 3<br />loc: -1.96239892<br />frame: 6<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: -1.29142238<br />time: 3<br />loc: -1.96239892<br />frame: 6<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: -0.66505081<br />time: 3<br />loc: -0.38709711<br />frame: 6<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: -0.66505081<br />time: 3<br />loc: -0.38709711<br />frame: 6<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: -1.29142238<br />time: 3<br />loc: -0.60025111<br />frame: 6<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: -1.29142238<br />time: 3<br />loc: -0.60025111<br />frame: 6<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: -0.08350227<br />time: 3<br />loc: 0.74029306<br />frame: 6<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: -0.08350227<br />time: 3<br />loc: 0.74029306<br />frame: 6<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: 1.65843054<br />time: 3<br />loc: 3.80349556<br />frame: 6<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: 1.65843054<br />time: 3<br />loc: 3.80349556<br />frame: 6<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: -1.29142238<br />time: 3<br />loc: -3.63836636<br />frame: 6<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: -1.29142238<br />time: 3<br />loc: -3.63836636<br />frame: 6<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: -0.08350227<br />time: 3<br />loc: 0.06608971<br />frame: 6<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: -0.08350227<br />time: 3<br />loc: 0.06608971<br />frame: 6<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: 0.99100767<br />time: 3<br />loc: -0.35152382<br />frame: 6<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: 0.99100767<br />time: 3<br />loc: -0.35152382<br />frame: 6<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: 1.20065430<br />time: 3<br />loc: 1.75395737<br />frame: 6<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: 1.20065430<br />time: 3<br />loc: 1.75395737<br />frame: 6<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: -0.66505081<br />time: 3<br />loc: 0.92491203<br />frame: 6<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: -0.66505081<br />time: 3<br />loc: 0.92491203<br />frame: 6<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: -0.25658348<br />time: 4<br />loc: -1.30593630<br />frame: 6<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: -0.25658348<br />time: 4<br />loc: -1.30593630<br />frame: 6<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: 0.74029306<br />time: 4<br />loc: 3.07080503<br />frame: 6<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: 0.74029306<br />time: 4<br />loc: 3.07080503<br />frame: 6<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: -0.05742923<br />time: 4<br />loc: 1.34527615<br />frame: 6<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: -0.05742923<br />time: 4<br />loc: 1.34527615<br />frame: 6<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: -0.62388834<br />time: 4<br />loc: 0.31871251<br />frame: 6<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: -0.62388834<br />time: 4<br />loc: 0.31871251<br />frame: 6<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: 0.74029306<br />time: 4<br />loc: 1.56655135<br />frame: 6<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: 0.74029306<br />time: 4<br />loc: 1.56655135<br />frame: 6<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: -0.62388834<br />time: 4<br />loc: -1.43542883<br />frame: 6<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: -0.62388834<br />time: 4<br />loc: -1.43542883<br />frame: 6<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: 1.86419040<br />time: 4<br />loc: 2.34043868<br />frame: 6<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: 1.86419040<br />time: 4<br />loc: 2.34043868<br />frame: 6<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: 0.92491203<br />time: 4<br />loc: 1.94617044<br />frame: 6<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: 0.92491203<br />time: 4<br />loc: 1.94617044<br />frame: 6<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: -0.62388834<br />time: 4<br />loc: 0.02149473<br />frame: 6<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: -0.62388834<br />time: 4<br />loc: 0.02149473<br />frame: 6<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: 0.06608971<br />time: 4<br />loc: 1.10923327<br />frame: 6<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: 0.06608971<br />time: 4<br />loc: 1.10923327<br />frame: 6<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: -0.05742923<br />time: 4<br />loc: -0.36179834<br />frame: 6<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: -0.05742923<br />time: 4<br />loc: -0.36179834<br />frame: 6<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: 0.74029306<br />time: 4<br />loc: 3.21740398<br />frame: 6<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: 0.74029306<br />time: 4<br />loc: 3.21740398<br />frame: 6<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: -0.38709711<br />time: 4<br />loc: 0.58412356<br />frame: 6<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: -0.38709711<br />time: 4<br />loc: 0.58412356<br />frame: 6<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: -0.62388834<br />time: 4<br />loc: 1.24321084<br />frame: 6<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: -0.62388834<br />time: 4<br />loc: 1.24321084<br />frame: 6<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: 0.06608971<br />time: 4<br />loc: 0.73813218<br />frame: 6<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: 0.06608971<br />time: 4<br />loc: 0.73813218<br />frame: 6<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: -0.60025111<br />time: 4<br />loc: -0.90820449<br />frame: 6<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: -0.60025111<br />time: 4<br />loc: -0.90820449<br />frame: 6<br />colour: ancestral line",null,"pmax(1, time - 1): 4<br />anc.loc: 1.10923327<br />time: 5<br />loc: 0.60418975<br />frame: 6<br />colour: ancestral line","pmax(1, time - 1): 4<br />anc.loc: 1.10923327<br />time: 5<br />loc: 0.60418975<br />frame: 6<br />colour: ancestral line",null,"pmax(1, time - 1): 4<br />anc.loc: -1.43542883<br />time: 5<br />loc: 0.72229099<br />frame: 6<br />colour: ancestral line","pmax(1, time - 1): 4<br />anc.loc: -1.43542883<br />time: 5<br />loc: 0.72229099<br />frame: 6<br />colour: ancestral line",null,"pmax(1, time - 1): 4<br />anc.loc: -1.30593630<br />time: 5<br />loc: -1.90573386<br />frame: 6<br />colour: ancestral line","pmax(1, time - 1): 4<br />anc.loc: -1.30593630<br />time: 5<br />loc: -1.90573386<br />frame: 6<br />colour: ancestral line",null,"pmax(1, time - 1): 4<br />anc.loc: -1.30593630<br />time: 5<br />loc: -2.00048299<br />frame: 6<br />colour: ancestral line","pmax(1, time - 1): 4<br />anc.loc: -1.30593630<br />time: 5<br />loc: -2.00048299<br />frame: 6<br />colour: ancestral line",null,"pmax(1, time - 1): 4<br />anc.loc: 0.31871251<br />time: 5<br />loc: 0.54263792<br />frame: 6<br />colour: ancestral line","pmax(1, time - 1): 4<br />anc.loc: 0.31871251<br />time: 5<br />loc: 0.54263792<br />frame: 6<br />colour: ancestral line",null,"pmax(1, time - 1): 4<br />anc.loc: 0.58412356<br />time: 5<br />loc: -0.57209977<br />frame: 6<br />colour: ancestral line","pmax(1, time - 1): 4<br />anc.loc: 0.58412356<br />time: 5<br />loc: -0.57209977<br />frame: 6<br />colour: ancestral line",null,"pmax(1, time - 1): 4<br />anc.loc: 0.31871251<br />time: 5<br />loc: 0.74113104<br />frame: 6<br />colour: ancestral line","pmax(1, time - 1): 4<br />anc.loc: 0.31871251<br />time: 5<br />loc: 0.74113104<br />frame: 6<br />colour: ancestral line",null,"pmax(1, time - 1): 4<br />anc.loc: -0.36179834<br />time: 5<br />loc: -1.68655360<br />frame: 6<br />colour: ancestral line","pmax(1, time - 1): 4<br />anc.loc: -0.36179834<br />time: 5<br />loc: -1.68655360<br />frame: 6<br />colour: ancestral line",null,"pmax(1, time - 1): 4<br />anc.loc: 1.56655135<br />time: 5<br />loc: 1.70763566<br />frame: 6<br />colour: ancestral line","pmax(1, time - 1): 4<br />anc.loc: 1.56655135<br />time: 5<br />loc: 1.70763566<br />frame: 6<br />colour: ancestral line",null,"pmax(1, time - 1): 4<br />anc.loc: -1.30593630<br />time: 5<br />loc: -1.84198430<br />frame: 6<br />colour: ancestral line","pmax(1, time - 1): 4<br />anc.loc: -1.30593630<br />time: 5<br />loc: -1.84198430<br />frame: 6<br />colour: ancestral line",null,"pmax(1, time - 1): 4<br />anc.loc: -0.36179834<br />time: 5<br />loc: -0.67340443<br />frame: 6<br />colour: ancestral line","pmax(1, time - 1): 4<br />anc.loc: -0.36179834<br />time: 5<br />loc: -0.67340443<br />frame: 6<br />colour: ancestral line",null,"pmax(1, time - 1): 4<br />anc.loc: 1.56655135<br />time: 5<br />loc: 3.12266099<br />frame: 6<br />colour: ancestral line","pmax(1, time - 1): 4<br />anc.loc: 1.56655135<br />time: 5<br />loc: 3.12266099<br />frame: 6<br />colour: ancestral line",null,"pmax(1, time - 1): 4<br />anc.loc: 0.31871251<br />time: 5<br />loc: -0.12932078<br />frame: 6<br />colour: ancestral line","pmax(1, time - 1): 4<br />anc.loc: 0.31871251<br />time: 5<br />loc: -0.12932078<br />frame: 6<br />colour: ancestral line",null,"pmax(1, time - 1): 4<br />anc.loc: 0.31871251<br />time: 5<br />loc: 0.63983605<br />frame: 6<br />colour: ancestral line","pmax(1, time - 1): 4<br />anc.loc: 0.31871251<br />time: 5<br />loc: 0.63983605<br />frame: 6<br />colour: ancestral line",null,"pmax(1, time - 1): 4<br />anc.loc: 1.24321084<br />time: 5<br />loc: 0.01303860<br />frame: 6<br />colour: ancestral line","pmax(1, time - 1): 4<br />anc.loc: 1.24321084<br />time: 5<br />loc: 0.01303860<br />frame: 6<br />colour: ancestral line",null,"pmax(1, time - 1): 4<br />anc.loc: 1.34527615<br />time: 5<br />loc: 0.02121746<br />frame: 6<br />colour: ancestral line","pmax(1, time - 1): 4<br />anc.loc: 1.34527615<br />time: 5<br />loc: 0.02121746<br />frame: 6<br />colour: ancestral line",null,"pmax(1, time - 1): 5<br />anc.loc: 0.74113104<br />time: 6<br />loc: 2.28599464<br />frame: 6<br />colour: ancestral line","pmax(1, time - 1): 5<br />anc.loc: 0.74113104<br />time: 6<br />loc: 2.28599464<br />frame: 6<br />colour: ancestral line",null,"pmax(1, time - 1): 5<br />anc.loc: -0.67340443<br />time: 6<br />loc: 0.64804759<br />frame: 6<br />colour: ancestral line","pmax(1, time - 1): 5<br />anc.loc: -0.67340443<br />time: 6<br />loc: 0.64804759<br />frame: 6<br />colour: ancestral line",null,"pmax(1, time - 1): 5<br />anc.loc: -1.68655360<br />time: 6<br />loc: -1.36440202<br />frame: 6<br />colour: ancestral line","pmax(1, time - 1): 5<br />anc.loc: -1.68655360<br />time: 6<br />loc: -1.36440202<br />frame: 6<br />colour: ancestral line",null,"pmax(1, time - 1): 5<br />anc.loc: -0.57209977<br />time: 6<br />loc: 0.95885535<br />frame: 6<br />colour: ancestral line","pmax(1, time - 1): 5<br />anc.loc: -0.57209977<br />time: 6<br />loc: 0.95885535<br />frame: 6<br />colour: ancestral line",null,"pmax(1, time - 1): 5<br />anc.loc: -0.57209977<br />time: 6<br />loc: -0.99333947<br />frame: 6<br />colour: ancestral line","pmax(1, time - 1): 5<br />anc.loc: -0.57209977<br />time: 6<br />loc: -0.99333947<br />frame: 6<br />colour: ancestral line",null,"pmax(1, time - 1): 5<br />anc.loc: 0.63983605<br />time: 6<br />loc: -0.51898497<br />frame: 6<br />colour: ancestral line","pmax(1, time - 1): 5<br />anc.loc: 0.63983605<br />time: 6<br />loc: -0.51898497<br />frame: 6<br />colour: ancestral line",null,"pmax(1, time - 1): 5<br />anc.loc: -0.12932078<br />time: 6<br />loc: -1.97468907<br />frame: 6<br />colour: ancestral line","pmax(1, time - 1): 5<br />anc.loc: -0.12932078<br />time: 6<br />loc: -1.97468907<br />frame: 6<br />colour: ancestral line",null,"pmax(1, time - 1): 5<br />anc.loc: 0.60418975<br />time: 6<br />loc: 1.76151504<br />frame: 6<br />colour: ancestral line","pmax(1, time - 1): 5<br />anc.loc: 0.60418975<br />time: 6<br />loc: 1.76151504<br />frame: 6<br />colour: ancestral line",null,"pmax(1, time - 1): 5<br />anc.loc: -0.57209977<br />time: 6<br />loc: -2.69564965<br />frame: 6<br />colour: ancestral line","pmax(1, time - 1): 5<br />anc.loc: -0.57209977<br />time: 6<br />loc: -2.69564965<br />frame: 6<br />colour: ancestral line",null,"pmax(1, time - 1): 5<br />anc.loc: -0.67340443<br />time: 6<br />loc: -1.86943594<br />frame: 6<br />colour: ancestral line","pmax(1, time - 1): 5<br />anc.loc: -0.67340443<br />time: 6<br />loc: -1.86943594<br />frame: 6<br />colour: ancestral line",null,"pmax(1, time - 1): 5<br />anc.loc: -0.67340443<br />time: 6<br />loc: 0.96878756<br />frame: 6<br />colour: ancestral line","pmax(1, time - 1): 5<br />anc.loc: -0.67340443<br />time: 6<br />loc: 0.96878756<br />frame: 6<br />colour: ancestral line",null,"pmax(1, time - 1): 5<br />anc.loc: 0.60418975<br />time: 6<br />loc: 1.48784458<br />frame: 6<br />colour: ancestral line","pmax(1, time - 1): 5<br />anc.loc: 0.60418975<br />time: 6<br />loc: 1.48784458<br />frame: 6<br />colour: ancestral line",null,"pmax(1, time - 1): 5<br />anc.loc: -2.00048299<br />time: 6<br />loc: -1.47560710<br />frame: 6<br />colour: ancestral line","pmax(1, time - 1): 5<br />anc.loc: -2.00048299<br />time: 6<br />loc: -1.47560710<br />frame: 6<br />colour: ancestral line",null,"pmax(1, time - 1): 5<br />anc.loc: -1.68655360<br />time: 6<br />loc: -2.87121266<br />frame: 6<br />colour: ancestral line","pmax(1, time - 1): 5<br />anc.loc: -1.68655360<br />time: 6<br />loc: -2.87121266<br />frame: 6<br />colour: ancestral line",null,"pmax(1, time - 1): 5<br />anc.loc: -0.57209977<br />time: 6<br />loc: 2.08368850<br />frame: 6<br />colour: ancestral line","pmax(1, time - 1): 5<br />anc.loc: -0.57209977<br />time: 6<br />loc: 2.08368850<br />frame: 6<br />colour: ancestral line",null,"pmax(1, time - 1): 5<br />anc.loc: 0.54263792<br />time: 6<br />loc: -0.50527579<br />frame: 6<br />colour: ancestral line","pmax(1, time - 1): 5<br />anc.loc: 0.54263792<br />time: 6<br />loc: -0.50527579<br />frame: 6<br />colour: ancestral line"],"frame":"6","type":"scatter","mode":"lines","line":{"width":1.88976377952756,"color":"rgba(248,118,109,1)","dash":"solid"},"hoveron":"points","name":"ancestral line","legendgroup":"ancestral line","showlegend":true,"xaxis":"x","yaxis":"y","hoverinfo":"text","visible":true}],"traces":[0,1]},{"name":"7","data":[{"x":[1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,2,2,2,2,2,2,2,2,2,2,2,2,2,2,2,2,3,3,3,3,3,3,3,3,3,3,3,3,3,3,3,3,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,6,6,6,6,6,6,6,6,6,6,6,6,6,6,6,6,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7],"y":[0.585528817843856,0.709466017509524,-0.109303314681054,-0.453497173462763,0.605887455840394,-1.81795596770373,0.630098551068391,-0.276184105225216,-0.284159743943371,-0.919322002474128,-0.116247806352002,1.81731204370422,0.370627864257954,0.520216457554957,-0.750531994502331,0.816899839520583,-1.87389362192153,2.43519606987921,-0.111019499436683,0.991007665556376,1.42902333217143,0.654588862602458,1.41776063439425,2.08058573999553,2.75865635491572,1.1789484660177,-0.665050809660074,1.20065429678208,-0.608246322680488,-1.29142237960068,1.65843053619191,-0.0835022660332458,2.00094828782994,-1.9718496431245,-0.62388833989535,1.86419039869879,-0.0574292290792283,-0.256583482125251,-1.96239891859462,-0.38709711485393,-0.600251106725797,0.74029306267284,3.8034955566722,-3.63836635796405,0.0660897145819629,-0.351523816173168,1.75395737228598,0.924912033035336,-1.30593630108152,3.07080502507755,1.3452761537882,0.318712510796175,1.56655134982527,-1.43542883008643,2.34043867945245,1.94617044051643,0.0214947343107265,1.1092332661334,-0.36179834177289,3.21740397963581,0.584123558183514,1.24321084467137,0.738132183596749,-0.908204487563135,0.604189748612507,0.722290986419984,-1.90573386490635,-2.00048299435227,0.542637918317463,-0.572099772057259,0.741131038583174,-1.68655359762182,1.70763566268555,-1.84198429957246,-0.673404426351037,3.12266099242653,-0.129320780498295,0.639836048290143,0.01303859779118,0.0212174616340499,2.28599464062077,0.648047590811593,-1.36440202225901,0.958855347677899,-0.993339465395833,-0.518984974527995,-1.97468906991976,1.76151503566965,-2.69564965307202,-1.86943594446187,0.968787564416303,1.48784458177452,-1.47560710331635,-2.87121266325259,2.08368850008554,-0.505275791900848,-0.503129281462436,-2.1554800853798,-2.91169398930471,1.49770052074003,1.86480074874875,-0.366584795091119,-0.971280731554545,2.03624250478892,-0.309993638089596,-2.28210509807982,-0.613900027107975,-0.756222415753161,1.18036169400518,0.61455655141293,-0.437503730496629,-2.12617683017041],"text":["time: 1<br />x: 0.58552882<br />frame: 7","time: 1<br />x: 0.70946602<br />frame: 7","time: 1<br />x: -0.10930331<br />frame: 7","time: 1<br />x: -0.45349717<br />frame: 7","time: 1<br />x: 0.60588746<br />frame: 7","time: 1<br />x: -1.81795597<br />frame: 7","time: 1<br />x: 0.63009855<br />frame: 7","time: 1<br />x: -0.27618411<br />frame: 7","time: 1<br />x: -0.28415974<br />frame: 7","time: 1<br />x: -0.91932200<br />frame: 7","time: 1<br />x: -0.11624781<br />frame: 7","time: 1<br />x: 1.81731204<br />frame: 7","time: 1<br />x: 0.37062786<br />frame: 7","time: 1<br />x: 0.52021646<br />frame: 7","time: 1<br />x: -0.75053199<br />frame: 7","time: 1<br />x: 0.81689984<br />frame: 7","time: 2<br />x: -1.87389362<br />frame: 7","time: 2<br />x: 2.43519607<br />frame: 7","time: 2<br />x: -0.11101950<br />frame: 7","time: 2<br />x: 0.99100767<br />frame: 7","time: 2<br />x: 1.42902333<br />frame: 7","time: 2<br />x: 0.65458886<br />frame: 7","time: 2<br />x: 1.41776063<br />frame: 7","time: 2<br />x: 2.08058574<br />frame: 7","time: 2<br />x: 2.75865635<br />frame: 7","time: 2<br />x: 1.17894847<br />frame: 7","time: 2<br />x: -0.66505081<br />frame: 7","time: 2<br />x: 1.20065430<br />frame: 7","time: 2<br />x: -0.60824632<br />frame: 7","time: 2<br />x: -1.29142238<br />frame: 7","time: 2<br />x: 1.65843054<br />frame: 7","time: 2<br />x: -0.08350227<br />frame: 7","time: 3<br />x: 2.00094829<br />frame: 7","time: 3<br />x: -1.97184964<br />frame: 7","time: 3<br />x: -0.62388834<br />frame: 7","time: 3<br />x: 1.86419040<br />frame: 7","time: 3<br />x: -0.05742923<br />frame: 7","time: 3<br />x: -0.25658348<br />frame: 7","time: 3<br />x: -1.96239892<br />frame: 7","time: 3<br />x: -0.38709711<br />frame: 7","time: 3<br />x: -0.60025111<br />frame: 7","time: 3<br />x: 0.74029306<br />frame: 7","time: 3<br />x: 3.80349556<br />frame: 7","time: 3<br />x: -3.63836636<br />frame: 7","time: 3<br />x: 0.06608971<br />frame: 7","time: 3<br />x: -0.35152382<br />frame: 7","time: 3<br />x: 1.75395737<br />frame: 7","time: 3<br />x: 0.92491203<br />frame: 7","time: 4<br />x: -1.30593630<br />frame: 7","time: 4<br />x: 3.07080503<br />frame: 7","time: 4<br />x: 1.34527615<br />frame: 7","time: 4<br />x: 0.31871251<br />frame: 7","time: 4<br />x: 1.56655135<br />frame: 7","time: 4<br />x: -1.43542883<br />frame: 7","time: 4<br />x: 2.34043868<br />frame: 7","time: 4<br />x: 1.94617044<br />frame: 7","time: 4<br />x: 0.02149473<br />frame: 7","time: 4<br />x: 1.10923327<br />frame: 7","time: 4<br />x: -0.36179834<br />frame: 7","time: 4<br />x: 3.21740398<br />frame: 7","time: 4<br />x: 0.58412356<br />frame: 7","time: 4<br />x: 1.24321084<br />frame: 7","time: 4<br />x: 0.73813218<br />frame: 7","time: 4<br />x: -0.90820449<br />frame: 7","time: 5<br />x: 0.60418975<br />frame: 7","time: 5<br />x: 0.72229099<br />frame: 7","time: 5<br />x: -1.90573386<br />frame: 7","time: 5<br />x: -2.00048299<br />frame: 7","time: 5<br />x: 0.54263792<br />frame: 7","time: 5<br />x: -0.57209977<br />frame: 7","time: 5<br />x: 0.74113104<br />frame: 7","time: 5<br />x: -1.68655360<br />frame: 7","time: 5<br />x: 1.70763566<br />frame: 7","time: 5<br />x: -1.84198430<br />frame: 7","time: 5<br />x: -0.67340443<br />frame: 7","time: 5<br />x: 3.12266099<br />frame: 7","time: 5<br />x: -0.12932078<br />frame: 7","time: 5<br />x: 0.63983605<br />frame: 7","time: 5<br />x: 0.01303860<br />frame: 7","time: 5<br />x: 0.02121746<br />frame: 7","time: 6<br />x: 2.28599464<br />frame: 7","time: 6<br />x: 0.64804759<br />frame: 7","time: 6<br />x: -1.36440202<br />frame: 7","time: 6<br />x: 0.95885535<br />frame: 7","time: 6<br />x: -0.99333947<br />frame: 7","time: 6<br />x: -0.51898497<br />frame: 7","time: 6<br />x: -1.97468907<br />frame: 7","time: 6<br />x: 1.76151504<br />frame: 7","time: 6<br />x: -2.69564965<br />frame: 7","time: 6<br />x: -1.86943594<br />frame: 7","time: 6<br />x: 0.96878756<br />frame: 7","time: 6<br />x: 1.48784458<br />frame: 7","time: 6<br />x: -1.47560710<br />frame: 7","time: 6<br />x: -2.87121266<br />frame: 7","time: 6<br />x: 2.08368850<br />frame: 7","time: 6<br />x: -0.50527579<br />frame: 7","time: 7<br />x: -0.50312928<br />frame: 7","time: 7<br />x: -2.15548009<br />frame: 7","time: 7<br />x: -2.91169399<br />frame: 7","time: 7<br />x: 1.49770052<br />frame: 7","time: 7<br />x: 1.86480075<br />frame: 7","time: 7<br />x: -0.36658480<br />frame: 7","time: 7<br />x: -0.97128073<br />frame: 7","time: 7<br />x: 2.03624250<br />frame: 7","time: 7<br />x: -0.30999364<br />frame: 7","time: 7<br />x: -2.28210510<br />frame: 7","time: 7<br />x: -0.61390003<br />frame: 7","time: 7<br />x: -0.75622242<br />frame: 7","time: 7<br />x: 1.18036169<br />frame: 7","time: 7<br />x: 0.61455655<br />frame: 7","time: 7<br />x: -0.43750373<br />frame: 7","time: 7<br />x: -2.12617683<br />frame: 7"],"frame":"7","type":"scatter","mode":"markers","marker":{"autocolorscale":false,"color":"rgba(0,0,0,1)","opacity":1,"size":5.66929133858268,"symbol":"circle","line":{"width":1.88976377952756,"color":"rgba(0,0,0,1)"}},"hoveron":"points","showlegend":false,"xaxis":"x","yaxis":"y","hoverinfo":"text","visible":true},{"x":[1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,2,3,null,2,3,null,2,3,null,2,3,null,2,3,null,2,3,null,2,3,null,2,3,null,2,3,null,2,3,null,2,3,null,2,3,null,2,3,null,2,3,null,2,3,null,2,3,null,3,4,null,3,4,null,3,4,null,3,4,null,3,4,null,3,4,null,3,4,null,3,4,null,3,4,null,3,4,null,3,4,null,3,4,null,3,4,null,3,4,null,3,4,null,3,4,null,4,5,null,4,5,null,4,5,null,4,5,null,4,5,null,4,5,null,4,5,null,4,5,null,4,5,null,4,5,null,4,5,null,4,5,null,4,5,null,4,5,null,4,5,null,4,5,null,5,6,null,5,6,null,5,6,null,5,6,null,5,6,null,5,6,null,5,6,null,5,6,null,5,6,null,5,6,null,5,6,null,5,6,null,5,6,null,5,6,null,5,6,null,5,6,null,6,7,null,6,7,null,6,7,null,6,7,null,6,7,null,6,7,null,6,7,null,6,7,null,6,7,null,6,7,null,6,7,null,6,7,null,6,7,null,6,7,null,6,7,null,6,7],"y":[0.585528817843856,0.585528817843856,null,0.709466017509524,0.709466017509524,null,-0.109303314681054,-0.109303314681054,null,-0.453497173462763,-0.453497173462763,null,0.605887455840394,0.605887455840394,null,-1.81795596770373,-1.81795596770373,null,0.630098551068391,0.630098551068391,null,-0.276184105225216,-0.276184105225216,null,-0.284159743943371,-0.284159743943371,null,-0.919322002474128,-0.919322002474128,null,-0.116247806352002,-0.116247806352002,null,1.81731204370422,1.81731204370422,null,0.370627864257954,0.370627864257954,null,0.520216457554957,0.520216457554957,null,-0.750531994502331,-0.750531994502331,null,0.816899839520583,0.816899839520583,null,-0.276184105225216,-1.87389362192153,null,0.630098551068391,2.43519606987921,null,0.370627864257954,-0.111019499436683,null,0.370627864257954,0.991007665556376,null,0.816899839520583,1.42902333217143,null,0.816899839520583,0.654588862602458,null,0.605887455840394,1.41776063439425,null,-0.116247806352002,2.08058573999553,null,0.709466017509524,2.75865635491572,null,-0.453497173462763,1.1789484660177,null,-0.919322002474128,-0.665050809660074,null,0.709466017509524,1.20065429678208,null,-0.284159743943371,-0.608246322680488,null,0.370627864257954,-1.29142237960068,null,-0.109303314681054,1.65843053619191,null,-0.109303314681054,-0.0835022660332458,null,1.41776063439425,2.00094828782994,null,-0.665050809660074,-1.9718496431245,null,-0.0835022660332458,-0.62388833989535,null,-0.0835022660332458,1.86419039869879,null,-0.111019499436683,-0.0574292290792283,null,-0.608246322680488,-0.256583482125251,null,-1.29142237960068,-1.96239891859462,null,-0.665050809660074,-0.38709711485393,null,-1.29142237960068,-0.600251106725797,null,-0.0835022660332458,0.74029306267284,null,1.65843053619191,3.8034955566722,null,-1.29142237960068,-3.63836635796405,null,-0.0835022660332458,0.0660897145819629,null,0.991007665556376,-0.351523816173168,null,1.20065429678208,1.75395737228598,null,-0.665050809660074,0.924912033035336,null,-0.256583482125251,-1.30593630108152,null,0.74029306267284,3.07080502507755,null,-0.0574292290792283,1.3452761537882,null,-0.62388833989535,0.318712510796175,null,0.74029306267284,1.56655134982527,null,-0.62388833989535,-1.43542883008643,null,1.86419039869879,2.34043867945245,null,0.924912033035336,1.94617044051643,null,-0.62388833989535,0.0214947343107265,null,0.0660897145819629,1.1092332661334,null,-0.0574292290792283,-0.36179834177289,null,0.74029306267284,3.21740397963581,null,-0.38709711485393,0.584123558183514,null,-0.62388833989535,1.24321084467137,null,0.0660897145819629,0.738132183596749,null,-0.600251106725797,-0.908204487563135,null,1.1092332661334,0.604189748612507,null,-1.43542883008643,0.722290986419984,null,-1.30593630108152,-1.90573386490635,null,-1.30593630108152,-2.00048299435227,null,0.318712510796175,0.542637918317463,null,0.584123558183514,-0.572099772057259,null,0.318712510796175,0.741131038583174,null,-0.36179834177289,-1.68655359762182,null,1.56655134982527,1.70763566268555,null,-1.30593630108152,-1.84198429957246,null,-0.36179834177289,-0.673404426351037,null,1.56655134982527,3.12266099242653,null,0.318712510796175,-0.129320780498295,null,0.318712510796175,0.639836048290143,null,1.24321084467137,0.01303859779118,null,1.3452761537882,0.0212174616340499,null,0.741131038583174,2.28599464062077,null,-0.673404426351037,0.648047590811593,null,-1.68655359762182,-1.36440202225901,null,-0.572099772057259,0.958855347677899,null,-0.572099772057259,-0.993339465395833,null,0.639836048290143,-0.518984974527995,null,-0.129320780498295,-1.97468906991976,null,0.604189748612507,1.76151503566965,null,-0.572099772057259,-2.69564965307202,null,-0.673404426351037,-1.86943594446187,null,-0.673404426351037,0.968787564416303,null,0.604189748612507,1.48784458177452,null,-2.00048299435227,-1.47560710331635,null,-1.68655359762182,-2.87121266325259,null,-0.572099772057259,2.08368850008554,null,0.542637918317463,-0.505275791900848,null,-0.518984974527995,-0.503129281462436,null,-2.69564965307202,-2.1554800853798,null,-1.36440202225901,-2.91169398930471,null,0.648047590811593,1.49770052074003,null,0.968787564416303,1.86480074874875,null,-0.505275791900848,-0.366584795091119,null,0.648047590811593,-0.971280731554545,null,1.48784458177452,2.03624250478892,null,-0.505275791900848,-0.309993638089596,null,-1.47560710331635,-2.28210509807982,null,-0.505275791900848,-0.613900027107975,null,-0.505275791900848,-0.756222415753161,null,-0.518984974527995,1.18036169400518,null,0.958855347677899,0.61455655141293,null,-0.505275791900848,-0.437503730496629,null,-1.47560710331635,-2.12617683017041],"text":["pmax(1, time - 1): 1<br />anc.loc: 0.58552882<br />time: 1<br />loc: 0.58552882<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.58552882<br />time: 1<br />loc: 0.58552882<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.70946602<br />time: 1<br />loc: 0.70946602<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.70946602<br />time: 1<br />loc: 0.70946602<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.10930331<br />time: 1<br />loc: -0.10930331<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.10930331<br />time: 1<br />loc: -0.10930331<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.45349717<br />time: 1<br />loc: -0.45349717<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.45349717<br />time: 1<br />loc: -0.45349717<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.60588746<br />time: 1<br />loc: 0.60588746<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.60588746<br />time: 1<br />loc: 0.60588746<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -1.81795597<br />time: 1<br />loc: -1.81795597<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -1.81795597<br />time: 1<br />loc: -1.81795597<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.63009855<br />time: 1<br />loc: 0.63009855<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.63009855<br />time: 1<br />loc: 0.63009855<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.27618411<br />time: 1<br />loc: -0.27618411<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.27618411<br />time: 1<br />loc: -0.27618411<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.28415974<br />time: 1<br />loc: -0.28415974<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.28415974<br />time: 1<br />loc: -0.28415974<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.91932200<br />time: 1<br />loc: -0.91932200<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.91932200<br />time: 1<br />loc: -0.91932200<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.11624781<br />time: 1<br />loc: -0.11624781<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.11624781<br />time: 1<br />loc: -0.11624781<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 1.81731204<br />time: 1<br />loc: 1.81731204<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 1.81731204<br />time: 1<br />loc: 1.81731204<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.37062786<br />time: 1<br />loc: 0.37062786<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.37062786<br />time: 1<br />loc: 0.37062786<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.52021646<br />time: 1<br />loc: 0.52021646<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.52021646<br />time: 1<br />loc: 0.52021646<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.75053199<br />time: 1<br />loc: -0.75053199<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.75053199<br />time: 1<br />loc: -0.75053199<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.81689984<br />time: 1<br />loc: 0.81689984<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.81689984<br />time: 1<br />loc: 0.81689984<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.27618411<br />time: 2<br />loc: -1.87389362<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.27618411<br />time: 2<br />loc: -1.87389362<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.63009855<br />time: 2<br />loc: 2.43519607<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.63009855<br />time: 2<br />loc: 2.43519607<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.37062786<br />time: 2<br />loc: -0.11101950<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.37062786<br />time: 2<br />loc: -0.11101950<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.37062786<br />time: 2<br />loc: 0.99100767<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.37062786<br />time: 2<br />loc: 0.99100767<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.81689984<br />time: 2<br />loc: 1.42902333<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.81689984<br />time: 2<br />loc: 1.42902333<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.81689984<br />time: 2<br />loc: 0.65458886<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.81689984<br />time: 2<br />loc: 0.65458886<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.60588746<br />time: 2<br />loc: 1.41776063<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.60588746<br />time: 2<br />loc: 1.41776063<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.11624781<br />time: 2<br />loc: 2.08058574<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.11624781<br />time: 2<br />loc: 2.08058574<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.70946602<br />time: 2<br />loc: 2.75865635<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.70946602<br />time: 2<br />loc: 2.75865635<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.45349717<br />time: 2<br />loc: 1.17894847<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.45349717<br />time: 2<br />loc: 1.17894847<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.91932200<br />time: 2<br />loc: -0.66505081<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.91932200<br />time: 2<br />loc: -0.66505081<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.70946602<br />time: 2<br />loc: 1.20065430<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.70946602<br />time: 2<br />loc: 1.20065430<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.28415974<br />time: 2<br />loc: -0.60824632<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.28415974<br />time: 2<br />loc: -0.60824632<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.37062786<br />time: 2<br />loc: -1.29142238<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.37062786<br />time: 2<br />loc: -1.29142238<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.10930331<br />time: 2<br />loc: 1.65843054<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.10930331<br />time: 2<br />loc: 1.65843054<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.10930331<br />time: 2<br />loc: -0.08350227<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.10930331<br />time: 2<br />loc: -0.08350227<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: 1.41776063<br />time: 3<br />loc: 2.00094829<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: 1.41776063<br />time: 3<br />loc: 2.00094829<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: -0.66505081<br />time: 3<br />loc: -1.97184964<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: -0.66505081<br />time: 3<br />loc: -1.97184964<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: -0.08350227<br />time: 3<br />loc: -0.62388834<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: -0.08350227<br />time: 3<br />loc: -0.62388834<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: -0.08350227<br />time: 3<br />loc: 1.86419040<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: -0.08350227<br />time: 3<br />loc: 1.86419040<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: -0.11101950<br />time: 3<br />loc: -0.05742923<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: -0.11101950<br />time: 3<br />loc: -0.05742923<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: -0.60824632<br />time: 3<br />loc: -0.25658348<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: -0.60824632<br />time: 3<br />loc: -0.25658348<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: -1.29142238<br />time: 3<br />loc: -1.96239892<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: -1.29142238<br />time: 3<br />loc: -1.96239892<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: -0.66505081<br />time: 3<br />loc: -0.38709711<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: -0.66505081<br />time: 3<br />loc: -0.38709711<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: -1.29142238<br />time: 3<br />loc: -0.60025111<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: -1.29142238<br />time: 3<br />loc: -0.60025111<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: -0.08350227<br />time: 3<br />loc: 0.74029306<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: -0.08350227<br />time: 3<br />loc: 0.74029306<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: 1.65843054<br />time: 3<br />loc: 3.80349556<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: 1.65843054<br />time: 3<br />loc: 3.80349556<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: -1.29142238<br />time: 3<br />loc: -3.63836636<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: -1.29142238<br />time: 3<br />loc: -3.63836636<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: -0.08350227<br />time: 3<br />loc: 0.06608971<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: -0.08350227<br />time: 3<br />loc: 0.06608971<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: 0.99100767<br />time: 3<br />loc: -0.35152382<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: 0.99100767<br />time: 3<br />loc: -0.35152382<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: 1.20065430<br />time: 3<br />loc: 1.75395737<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: 1.20065430<br />time: 3<br />loc: 1.75395737<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: -0.66505081<br />time: 3<br />loc: 0.92491203<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: -0.66505081<br />time: 3<br />loc: 0.92491203<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: -0.25658348<br />time: 4<br />loc: -1.30593630<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: -0.25658348<br />time: 4<br />loc: -1.30593630<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: 0.74029306<br />time: 4<br />loc: 3.07080503<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: 0.74029306<br />time: 4<br />loc: 3.07080503<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: -0.05742923<br />time: 4<br />loc: 1.34527615<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: -0.05742923<br />time: 4<br />loc: 1.34527615<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: -0.62388834<br />time: 4<br />loc: 0.31871251<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: -0.62388834<br />time: 4<br />loc: 0.31871251<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: 0.74029306<br />time: 4<br />loc: 1.56655135<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: 0.74029306<br />time: 4<br />loc: 1.56655135<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: -0.62388834<br />time: 4<br />loc: -1.43542883<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: -0.62388834<br />time: 4<br />loc: -1.43542883<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: 1.86419040<br />time: 4<br />loc: 2.34043868<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: 1.86419040<br />time: 4<br />loc: 2.34043868<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: 0.92491203<br />time: 4<br />loc: 1.94617044<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: 0.92491203<br />time: 4<br />loc: 1.94617044<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: -0.62388834<br />time: 4<br />loc: 0.02149473<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: -0.62388834<br />time: 4<br />loc: 0.02149473<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: 0.06608971<br />time: 4<br />loc: 1.10923327<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: 0.06608971<br />time: 4<br />loc: 1.10923327<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: -0.05742923<br />time: 4<br />loc: -0.36179834<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: -0.05742923<br />time: 4<br />loc: -0.36179834<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: 0.74029306<br />time: 4<br />loc: 3.21740398<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: 0.74029306<br />time: 4<br />loc: 3.21740398<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: -0.38709711<br />time: 4<br />loc: 0.58412356<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: -0.38709711<br />time: 4<br />loc: 0.58412356<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: -0.62388834<br />time: 4<br />loc: 1.24321084<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: -0.62388834<br />time: 4<br />loc: 1.24321084<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: 0.06608971<br />time: 4<br />loc: 0.73813218<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: 0.06608971<br />time: 4<br />loc: 0.73813218<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: -0.60025111<br />time: 4<br />loc: -0.90820449<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: -0.60025111<br />time: 4<br />loc: -0.90820449<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 4<br />anc.loc: 1.10923327<br />time: 5<br />loc: 0.60418975<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 4<br />anc.loc: 1.10923327<br />time: 5<br />loc: 0.60418975<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 4<br />anc.loc: -1.43542883<br />time: 5<br />loc: 0.72229099<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 4<br />anc.loc: -1.43542883<br />time: 5<br />loc: 0.72229099<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 4<br />anc.loc: -1.30593630<br />time: 5<br />loc: -1.90573386<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 4<br />anc.loc: -1.30593630<br />time: 5<br />loc: -1.90573386<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 4<br />anc.loc: -1.30593630<br />time: 5<br />loc: -2.00048299<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 4<br />anc.loc: -1.30593630<br />time: 5<br />loc: -2.00048299<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 4<br />anc.loc: 0.31871251<br />time: 5<br />loc: 0.54263792<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 4<br />anc.loc: 0.31871251<br />time: 5<br />loc: 0.54263792<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 4<br />anc.loc: 0.58412356<br />time: 5<br />loc: -0.57209977<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 4<br />anc.loc: 0.58412356<br />time: 5<br />loc: -0.57209977<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 4<br />anc.loc: 0.31871251<br />time: 5<br />loc: 0.74113104<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 4<br />anc.loc: 0.31871251<br />time: 5<br />loc: 0.74113104<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 4<br />anc.loc: -0.36179834<br />time: 5<br />loc: -1.68655360<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 4<br />anc.loc: -0.36179834<br />time: 5<br />loc: -1.68655360<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 4<br />anc.loc: 1.56655135<br />time: 5<br />loc: 1.70763566<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 4<br />anc.loc: 1.56655135<br />time: 5<br />loc: 1.70763566<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 4<br />anc.loc: -1.30593630<br />time: 5<br />loc: -1.84198430<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 4<br />anc.loc: -1.30593630<br />time: 5<br />loc: -1.84198430<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 4<br />anc.loc: -0.36179834<br />time: 5<br />loc: -0.67340443<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 4<br />anc.loc: -0.36179834<br />time: 5<br />loc: -0.67340443<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 4<br />anc.loc: 1.56655135<br />time: 5<br />loc: 3.12266099<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 4<br />anc.loc: 1.56655135<br />time: 5<br />loc: 3.12266099<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 4<br />anc.loc: 0.31871251<br />time: 5<br />loc: -0.12932078<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 4<br />anc.loc: 0.31871251<br />time: 5<br />loc: -0.12932078<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 4<br />anc.loc: 0.31871251<br />time: 5<br />loc: 0.63983605<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 4<br />anc.loc: 0.31871251<br />time: 5<br />loc: 0.63983605<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 4<br />anc.loc: 1.24321084<br />time: 5<br />loc: 0.01303860<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 4<br />anc.loc: 1.24321084<br />time: 5<br />loc: 0.01303860<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 4<br />anc.loc: 1.34527615<br />time: 5<br />loc: 0.02121746<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 4<br />anc.loc: 1.34527615<br />time: 5<br />loc: 0.02121746<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 5<br />anc.loc: 0.74113104<br />time: 6<br />loc: 2.28599464<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 5<br />anc.loc: 0.74113104<br />time: 6<br />loc: 2.28599464<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 5<br />anc.loc: -0.67340443<br />time: 6<br />loc: 0.64804759<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 5<br />anc.loc: -0.67340443<br />time: 6<br />loc: 0.64804759<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 5<br />anc.loc: -1.68655360<br />time: 6<br />loc: -1.36440202<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 5<br />anc.loc: -1.68655360<br />time: 6<br />loc: -1.36440202<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 5<br />anc.loc: -0.57209977<br />time: 6<br />loc: 0.95885535<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 5<br />anc.loc: -0.57209977<br />time: 6<br />loc: 0.95885535<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 5<br />anc.loc: -0.57209977<br />time: 6<br />loc: -0.99333947<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 5<br />anc.loc: -0.57209977<br />time: 6<br />loc: -0.99333947<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 5<br />anc.loc: 0.63983605<br />time: 6<br />loc: -0.51898497<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 5<br />anc.loc: 0.63983605<br />time: 6<br />loc: -0.51898497<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 5<br />anc.loc: -0.12932078<br />time: 6<br />loc: -1.97468907<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 5<br />anc.loc: -0.12932078<br />time: 6<br />loc: -1.97468907<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 5<br />anc.loc: 0.60418975<br />time: 6<br />loc: 1.76151504<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 5<br />anc.loc: 0.60418975<br />time: 6<br />loc: 1.76151504<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 5<br />anc.loc: -0.57209977<br />time: 6<br />loc: -2.69564965<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 5<br />anc.loc: -0.57209977<br />time: 6<br />loc: -2.69564965<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 5<br />anc.loc: -0.67340443<br />time: 6<br />loc: -1.86943594<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 5<br />anc.loc: -0.67340443<br />time: 6<br />loc: -1.86943594<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 5<br />anc.loc: -0.67340443<br />time: 6<br />loc: 0.96878756<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 5<br />anc.loc: -0.67340443<br />time: 6<br />loc: 0.96878756<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 5<br />anc.loc: 0.60418975<br />time: 6<br />loc: 1.48784458<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 5<br />anc.loc: 0.60418975<br />time: 6<br />loc: 1.48784458<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 5<br />anc.loc: -2.00048299<br />time: 6<br />loc: -1.47560710<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 5<br />anc.loc: -2.00048299<br />time: 6<br />loc: -1.47560710<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 5<br />anc.loc: -1.68655360<br />time: 6<br />loc: -2.87121266<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 5<br />anc.loc: -1.68655360<br />time: 6<br />loc: -2.87121266<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 5<br />anc.loc: -0.57209977<br />time: 6<br />loc: 2.08368850<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 5<br />anc.loc: -0.57209977<br />time: 6<br />loc: 2.08368850<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 5<br />anc.loc: 0.54263792<br />time: 6<br />loc: -0.50527579<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 5<br />anc.loc: 0.54263792<br />time: 6<br />loc: -0.50527579<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 6<br />anc.loc: -0.51898497<br />time: 7<br />loc: -0.50312928<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 6<br />anc.loc: -0.51898497<br />time: 7<br />loc: -0.50312928<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 6<br />anc.loc: -2.69564965<br />time: 7<br />loc: -2.15548009<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 6<br />anc.loc: -2.69564965<br />time: 7<br />loc: -2.15548009<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 6<br />anc.loc: -1.36440202<br />time: 7<br />loc: -2.91169399<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 6<br />anc.loc: -1.36440202<br />time: 7<br />loc: -2.91169399<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 6<br />anc.loc: 0.64804759<br />time: 7<br />loc: 1.49770052<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 6<br />anc.loc: 0.64804759<br />time: 7<br />loc: 1.49770052<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 6<br />anc.loc: 0.96878756<br />time: 7<br />loc: 1.86480075<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 6<br />anc.loc: 0.96878756<br />time: 7<br />loc: 1.86480075<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 6<br />anc.loc: -0.50527579<br />time: 7<br />loc: -0.36658480<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 6<br />anc.loc: -0.50527579<br />time: 7<br />loc: -0.36658480<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 6<br />anc.loc: 0.64804759<br />time: 7<br />loc: -0.97128073<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 6<br />anc.loc: 0.64804759<br />time: 7<br />loc: -0.97128073<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 6<br />anc.loc: 1.48784458<br />time: 7<br />loc: 2.03624250<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 6<br />anc.loc: 1.48784458<br />time: 7<br />loc: 2.03624250<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 6<br />anc.loc: -0.50527579<br />time: 7<br />loc: -0.30999364<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 6<br />anc.loc: -0.50527579<br />time: 7<br />loc: -0.30999364<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 6<br />anc.loc: -1.47560710<br />time: 7<br />loc: -2.28210510<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 6<br />anc.loc: -1.47560710<br />time: 7<br />loc: -2.28210510<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 6<br />anc.loc: -0.50527579<br />time: 7<br />loc: -0.61390003<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 6<br />anc.loc: -0.50527579<br />time: 7<br />loc: -0.61390003<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 6<br />anc.loc: -0.50527579<br />time: 7<br />loc: -0.75622242<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 6<br />anc.loc: -0.50527579<br />time: 7<br />loc: -0.75622242<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 6<br />anc.loc: -0.51898497<br />time: 7<br />loc: 1.18036169<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 6<br />anc.loc: -0.51898497<br />time: 7<br />loc: 1.18036169<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 6<br />anc.loc: 0.95885535<br />time: 7<br />loc: 0.61455655<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 6<br />anc.loc: 0.95885535<br />time: 7<br />loc: 0.61455655<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 6<br />anc.loc: -0.50527579<br />time: 7<br />loc: -0.43750373<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 6<br />anc.loc: -0.50527579<br />time: 7<br />loc: -0.43750373<br />frame: 7<br />colour: ancestral line",null,"pmax(1, time - 1): 6<br />anc.loc: -1.47560710<br />time: 7<br />loc: -2.12617683<br />frame: 7<br />colour: ancestral line","pmax(1, time - 1): 6<br />anc.loc: -1.47560710<br />time: 7<br />loc: -2.12617683<br />frame: 7<br />colour: ancestral line"],"frame":"7","type":"scatter","mode":"lines","line":{"width":1.88976377952756,"color":"rgba(248,118,109,1)","dash":"solid"},"hoveron":"points","name":"ancestral line","legendgroup":"ancestral line","showlegend":true,"xaxis":"x","yaxis":"y","hoverinfo":"text","visible":true}],"traces":[0,1]},{"name":"8","data":[{"x":[1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,2,2,2,2,2,2,2,2,2,2,2,2,2,2,2,2,3,3,3,3,3,3,3,3,3,3,3,3,3,3,3,3,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,6,6,6,6,6,6,6,6,6,6,6,6,6,6,6,6,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8],"y":[0.585528817843856,0.709466017509524,-0.109303314681054,-0.453497173462763,0.605887455840394,-1.81795596770373,0.630098551068391,-0.276184105225216,-0.284159743943371,-0.919322002474128,-0.116247806352002,1.81731204370422,0.370627864257954,0.520216457554957,-0.750531994502331,0.816899839520583,-1.87389362192153,2.43519606987921,-0.111019499436683,0.991007665556376,1.42902333217143,0.654588862602458,1.41776063439425,2.08058573999553,2.75865635491572,1.1789484660177,-0.665050809660074,1.20065429678208,-0.608246322680488,-1.29142237960068,1.65843053619191,-0.0835022660332458,2.00094828782994,-1.9718496431245,-0.62388833989535,1.86419039869879,-0.0574292290792283,-0.256583482125251,-1.96239891859462,-0.38709711485393,-0.600251106725797,0.74029306267284,3.8034955566722,-3.63836635796405,0.0660897145819629,-0.351523816173168,1.75395737228598,0.924912033035336,-1.30593630108152,3.07080502507755,1.3452761537882,0.318712510796175,1.56655134982527,-1.43542883008643,2.34043867945245,1.94617044051643,0.0214947343107265,1.1092332661334,-0.36179834177289,3.21740397963581,0.584123558183514,1.24321084467137,0.738132183596749,-0.908204487563135,0.604189748612507,0.722290986419984,-1.90573386490635,-2.00048299435227,0.542637918317463,-0.572099772057259,0.741131038583174,-1.68655359762182,1.70763566268555,-1.84198429957246,-0.673404426351037,3.12266099242653,-0.129320780498295,0.639836048290143,0.01303859779118,0.0212174616340499,2.28599464062077,0.648047590811593,-1.36440202225901,0.958855347677899,-0.993339465395833,-0.518984974527995,-1.97468906991976,1.76151503566965,-2.69564965307202,-1.86943594446187,0.968787564416303,1.48784458177452,-1.47560710331635,-2.87121266325259,2.08368850008554,-0.505275791900848,-0.503129281462436,-2.1554800853798,-2.91169398930471,1.49770052074003,1.86480074874875,-0.366584795091119,-0.971280731554545,2.03624250478892,-0.309993638089596,-2.28210509807982,-0.613900027107975,-0.756222415753161,1.18036169400518,0.61455655141293,-0.437503730496629,-2.12617683017041,1.32681033849769,-0.779902303906169,0.814477035240365,0.844063496177574,-0.467055793662738,2.86082363535566,-2.14017558210265,0.170956517178086,2.11696529423513,0.0347801924144859,-0.287952110715391,-0.635350658897616,-1.66796183998604,-0.805267106606783,-0.71538111477141,0.820388159757257],"text":["time: 1<br />x: 0.58552882<br />frame: 8","time: 1<br />x: 0.70946602<br />frame: 8","time: 1<br />x: -0.10930331<br />frame: 8","time: 1<br />x: -0.45349717<br />frame: 8","time: 1<br />x: 0.60588746<br />frame: 8","time: 1<br />x: -1.81795597<br />frame: 8","time: 1<br />x: 0.63009855<br />frame: 8","time: 1<br />x: -0.27618411<br />frame: 8","time: 1<br />x: -0.28415974<br />frame: 8","time: 1<br />x: -0.91932200<br />frame: 8","time: 1<br />x: -0.11624781<br />frame: 8","time: 1<br />x: 1.81731204<br />frame: 8","time: 1<br />x: 0.37062786<br />frame: 8","time: 1<br />x: 0.52021646<br />frame: 8","time: 1<br />x: -0.75053199<br />frame: 8","time: 1<br />x: 0.81689984<br />frame: 8","time: 2<br />x: -1.87389362<br />frame: 8","time: 2<br />x: 2.43519607<br />frame: 8","time: 2<br />x: -0.11101950<br />frame: 8","time: 2<br />x: 0.99100767<br />frame: 8","time: 2<br />x: 1.42902333<br />frame: 8","time: 2<br />x: 0.65458886<br />frame: 8","time: 2<br />x: 1.41776063<br />frame: 8","time: 2<br />x: 2.08058574<br />frame: 8","time: 2<br />x: 2.75865635<br />frame: 8","time: 2<br />x: 1.17894847<br />frame: 8","time: 2<br />x: -0.66505081<br />frame: 8","time: 2<br />x: 1.20065430<br />frame: 8","time: 2<br />x: -0.60824632<br />frame: 8","time: 2<br />x: -1.29142238<br />frame: 8","time: 2<br />x: 1.65843054<br />frame: 8","time: 2<br />x: -0.08350227<br />frame: 8","time: 3<br />x: 2.00094829<br />frame: 8","time: 3<br />x: -1.97184964<br />frame: 8","time: 3<br />x: -0.62388834<br />frame: 8","time: 3<br />x: 1.86419040<br />frame: 8","time: 3<br />x: -0.05742923<br />frame: 8","time: 3<br />x: -0.25658348<br />frame: 8","time: 3<br />x: -1.96239892<br />frame: 8","time: 3<br />x: -0.38709711<br />frame: 8","time: 3<br />x: -0.60025111<br />frame: 8","time: 3<br />x: 0.74029306<br />frame: 8","time: 3<br />x: 3.80349556<br />frame: 8","time: 3<br />x: -3.63836636<br />frame: 8","time: 3<br />x: 0.06608971<br />frame: 8","time: 3<br />x: -0.35152382<br />frame: 8","time: 3<br />x: 1.75395737<br />frame: 8","time: 3<br />x: 0.92491203<br />frame: 8","time: 4<br />x: -1.30593630<br />frame: 8","time: 4<br />x: 3.07080503<br />frame: 8","time: 4<br />x: 1.34527615<br />frame: 8","time: 4<br />x: 0.31871251<br />frame: 8","time: 4<br />x: 1.56655135<br />frame: 8","time: 4<br />x: -1.43542883<br />frame: 8","time: 4<br />x: 2.34043868<br />frame: 8","time: 4<br />x: 1.94617044<br />frame: 8","time: 4<br />x: 0.02149473<br />frame: 8","time: 4<br />x: 1.10923327<br />frame: 8","time: 4<br />x: -0.36179834<br />frame: 8","time: 4<br />x: 3.21740398<br />frame: 8","time: 4<br />x: 0.58412356<br />frame: 8","time: 4<br />x: 1.24321084<br />frame: 8","time: 4<br />x: 0.73813218<br />frame: 8","time: 4<br />x: -0.90820449<br />frame: 8","time: 5<br />x: 0.60418975<br />frame: 8","time: 5<br />x: 0.72229099<br />frame: 8","time: 5<br />x: -1.90573386<br />frame: 8","time: 5<br />x: -2.00048299<br />frame: 8","time: 5<br />x: 0.54263792<br />frame: 8","time: 5<br />x: -0.57209977<br />frame: 8","time: 5<br />x: 0.74113104<br />frame: 8","time: 5<br />x: -1.68655360<br />frame: 8","time: 5<br />x: 1.70763566<br />frame: 8","time: 5<br />x: -1.84198430<br />frame: 8","time: 5<br />x: -0.67340443<br />frame: 8","time: 5<br />x: 3.12266099<br />frame: 8","time: 5<br />x: -0.12932078<br />frame: 8","time: 5<br />x: 0.63983605<br />frame: 8","time: 5<br />x: 0.01303860<br />frame: 8","time: 5<br />x: 0.02121746<br />frame: 8","time: 6<br />x: 2.28599464<br />frame: 8","time: 6<br />x: 0.64804759<br />frame: 8","time: 6<br />x: -1.36440202<br />frame: 8","time: 6<br />x: 0.95885535<br />frame: 8","time: 6<br />x: -0.99333947<br />frame: 8","time: 6<br />x: -0.51898497<br />frame: 8","time: 6<br />x: -1.97468907<br />frame: 8","time: 6<br />x: 1.76151504<br />frame: 8","time: 6<br />x: -2.69564965<br />frame: 8","time: 6<br />x: -1.86943594<br />frame: 8","time: 6<br />x: 0.96878756<br />frame: 8","time: 6<br />x: 1.48784458<br />frame: 8","time: 6<br />x: -1.47560710<br />frame: 8","time: 6<br />x: -2.87121266<br />frame: 8","time: 6<br />x: 2.08368850<br />frame: 8","time: 6<br />x: -0.50527579<br />frame: 8","time: 7<br />x: -0.50312928<br />frame: 8","time: 7<br />x: -2.15548009<br />frame: 8","time: 7<br />x: -2.91169399<br />frame: 8","time: 7<br />x: 1.49770052<br />frame: 8","time: 7<br />x: 1.86480075<br />frame: 8","time: 7<br />x: -0.36658480<br />frame: 8","time: 7<br />x: -0.97128073<br />frame: 8","time: 7<br />x: 2.03624250<br />frame: 8","time: 7<br />x: -0.30999364<br />frame: 8","time: 7<br />x: -2.28210510<br />frame: 8","time: 7<br />x: -0.61390003<br />frame: 8","time: 7<br />x: -0.75622242<br />frame: 8","time: 7<br />x: 1.18036169<br />frame: 8","time: 7<br />x: 0.61455655<br />frame: 8","time: 7<br />x: -0.43750373<br />frame: 8","time: 7<br />x: -2.12617683<br />frame: 8","time: 8<br />x: 1.32681034<br />frame: 8","time: 8<br />x: -0.77990230<br />frame: 8","time: 8<br />x: 0.81447704<br />frame: 8","time: 8<br />x: 0.84406350<br />frame: 8","time: 8<br />x: -0.46705579<br />frame: 8","time: 8<br />x: 2.86082364<br />frame: 8","time: 8<br />x: -2.14017558<br />frame: 8","time: 8<br />x: 0.17095652<br />frame: 8","time: 8<br />x: 2.11696529<br />frame: 8","time: 8<br />x: 0.03478019<br />frame: 8","time: 8<br />x: -0.28795211<br />frame: 8","time: 8<br />x: -0.63535066<br />frame: 8","time: 8<br />x: -1.66796184<br />frame: 8","time: 8<br />x: -0.80526711<br />frame: 8","time: 8<br />x: -0.71538111<br />frame: 8","time: 8<br />x: 0.82038816<br />frame: 8"],"frame":"8","type":"scatter","mode":"markers","marker":{"autocolorscale":false,"color":"rgba(0,0,0,1)","opacity":1,"size":5.66929133858268,"symbol":"circle","line":{"width":1.88976377952756,"color":"rgba(0,0,0,1)"}},"hoveron":"points","showlegend":false,"xaxis":"x","yaxis":"y","hoverinfo":"text","visible":true},{"x":[1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,2,3,null,2,3,null,2,3,null,2,3,null,2,3,null,2,3,null,2,3,null,2,3,null,2,3,null,2,3,null,2,3,null,2,3,null,2,3,null,2,3,null,2,3,null,2,3,null,3,4,null,3,4,null,3,4,null,3,4,null,3,4,null,3,4,null,3,4,null,3,4,null,3,4,null,3,4,null,3,4,null,3,4,null,3,4,null,3,4,null,3,4,null,3,4,null,4,5,null,4,5,null,4,5,null,4,5,null,4,5,null,4,5,null,4,5,null,4,5,null,4,5,null,4,5,null,4,5,null,4,5,null,4,5,null,4,5,null,4,5,null,4,5,null,5,6,null,5,6,null,5,6,null,5,6,null,5,6,null,5,6,null,5,6,null,5,6,null,5,6,null,5,6,null,5,6,null,5,6,null,5,6,null,5,6,null,5,6,null,5,6,null,6,7,null,6,7,null,6,7,null,6,7,null,6,7,null,6,7,null,6,7,null,6,7,null,6,7,null,6,7,null,6,7,null,6,7,null,6,7,null,6,7,null,6,7,null,6,7,null,7,8,null,7,8,null,7,8,null,7,8,null,7,8,null,7,8,null,7,8,null,7,8,null,7,8,null,7,8,null,7,8,null,7,8,null,7,8,null,7,8,null,7,8,null,7,8],"y":[0.585528817843856,0.585528817843856,null,0.709466017509524,0.709466017509524,null,-0.109303314681054,-0.109303314681054,null,-0.453497173462763,-0.453497173462763,null,0.605887455840394,0.605887455840394,null,-1.81795596770373,-1.81795596770373,null,0.630098551068391,0.630098551068391,null,-0.276184105225216,-0.276184105225216,null,-0.284159743943371,-0.284159743943371,null,-0.919322002474128,-0.919322002474128,null,-0.116247806352002,-0.116247806352002,null,1.81731204370422,1.81731204370422,null,0.370627864257954,0.370627864257954,null,0.520216457554957,0.520216457554957,null,-0.750531994502331,-0.750531994502331,null,0.816899839520583,0.816899839520583,null,-0.276184105225216,-1.87389362192153,null,0.630098551068391,2.43519606987921,null,0.370627864257954,-0.111019499436683,null,0.370627864257954,0.991007665556376,null,0.816899839520583,1.42902333217143,null,0.816899839520583,0.654588862602458,null,0.605887455840394,1.41776063439425,null,-0.116247806352002,2.08058573999553,null,0.709466017509524,2.75865635491572,null,-0.453497173462763,1.1789484660177,null,-0.919322002474128,-0.665050809660074,null,0.709466017509524,1.20065429678208,null,-0.284159743943371,-0.608246322680488,null,0.370627864257954,-1.29142237960068,null,-0.109303314681054,1.65843053619191,null,-0.109303314681054,-0.0835022660332458,null,1.41776063439425,2.00094828782994,null,-0.665050809660074,-1.9718496431245,null,-0.0835022660332458,-0.62388833989535,null,-0.0835022660332458,1.86419039869879,null,-0.111019499436683,-0.0574292290792283,null,-0.608246322680488,-0.256583482125251,null,-1.29142237960068,-1.96239891859462,null,-0.665050809660074,-0.38709711485393,null,-1.29142237960068,-0.600251106725797,null,-0.0835022660332458,0.74029306267284,null,1.65843053619191,3.8034955566722,null,-1.29142237960068,-3.63836635796405,null,-0.0835022660332458,0.0660897145819629,null,0.991007665556376,-0.351523816173168,null,1.20065429678208,1.75395737228598,null,-0.665050809660074,0.924912033035336,null,-0.256583482125251,-1.30593630108152,null,0.74029306267284,3.07080502507755,null,-0.0574292290792283,1.3452761537882,null,-0.62388833989535,0.318712510796175,null,0.74029306267284,1.56655134982527,null,-0.62388833989535,-1.43542883008643,null,1.86419039869879,2.34043867945245,null,0.924912033035336,1.94617044051643,null,-0.62388833989535,0.0214947343107265,null,0.0660897145819629,1.1092332661334,null,-0.0574292290792283,-0.36179834177289,null,0.74029306267284,3.21740397963581,null,-0.38709711485393,0.584123558183514,null,-0.62388833989535,1.24321084467137,null,0.0660897145819629,0.738132183596749,null,-0.600251106725797,-0.908204487563135,null,1.1092332661334,0.604189748612507,null,-1.43542883008643,0.722290986419984,null,-1.30593630108152,-1.90573386490635,null,-1.30593630108152,-2.00048299435227,null,0.318712510796175,0.542637918317463,null,0.584123558183514,-0.572099772057259,null,0.318712510796175,0.741131038583174,null,-0.36179834177289,-1.68655359762182,null,1.56655134982527,1.70763566268555,null,-1.30593630108152,-1.84198429957246,null,-0.36179834177289,-0.673404426351037,null,1.56655134982527,3.12266099242653,null,0.318712510796175,-0.129320780498295,null,0.318712510796175,0.639836048290143,null,1.24321084467137,0.01303859779118,null,1.3452761537882,0.0212174616340499,null,0.741131038583174,2.28599464062077,null,-0.673404426351037,0.648047590811593,null,-1.68655359762182,-1.36440202225901,null,-0.572099772057259,0.958855347677899,null,-0.572099772057259,-0.993339465395833,null,0.639836048290143,-0.518984974527995,null,-0.129320780498295,-1.97468906991976,null,0.604189748612507,1.76151503566965,null,-0.572099772057259,-2.69564965307202,null,-0.673404426351037,-1.86943594446187,null,-0.673404426351037,0.968787564416303,null,0.604189748612507,1.48784458177452,null,-2.00048299435227,-1.47560710331635,null,-1.68655359762182,-2.87121266325259,null,-0.572099772057259,2.08368850008554,null,0.542637918317463,-0.505275791900848,null,-0.518984974527995,-0.503129281462436,null,-2.69564965307202,-2.1554800853798,null,-1.36440202225901,-2.91169398930471,null,0.648047590811593,1.49770052074003,null,0.968787564416303,1.86480074874875,null,-0.505275791900848,-0.366584795091119,null,0.648047590811593,-0.971280731554545,null,1.48784458177452,2.03624250478892,null,-0.505275791900848,-0.309993638089596,null,-1.47560710331635,-2.28210509807982,null,-0.505275791900848,-0.613900027107975,null,-0.505275791900848,-0.756222415753161,null,-0.518984974527995,1.18036169400518,null,0.958855347677899,0.61455655141293,null,-0.505275791900848,-0.437503730496629,null,-1.47560710331635,-2.12617683017041,null,-0.437503730496629,1.32681033849769,null,-0.756222415753161,-0.779902303906169,null,0.61455655141293,0.814477035240365,null,-0.503129281462436,0.844063496177574,null,-0.503129281462436,-0.467055793662738,null,2.03624250478892,2.86082363535566,null,-0.437503730496629,-2.14017558210265,null,-0.309993638089596,0.170956517178086,null,-0.366584795091119,2.11696529423513,null,-0.366584795091119,0.0347801924144859,null,-0.503129281462436,-0.287952110715391,null,1.18036169400518,-0.635350658897616,null,-0.756222415753161,-1.66796183998604,null,-0.756222415753161,-0.805267106606783,null,-0.309993638089596,-0.71538111477141,null,-0.309993638089596,0.820388159757257],"text":["pmax(1, time - 1): 1<br />anc.loc: 0.58552882<br />time: 1<br />loc: 0.58552882<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.58552882<br />time: 1<br />loc: 0.58552882<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.70946602<br />time: 1<br />loc: 0.70946602<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.70946602<br />time: 1<br />loc: 0.70946602<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.10930331<br />time: 1<br />loc: -0.10930331<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.10930331<br />time: 1<br />loc: -0.10930331<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.45349717<br />time: 1<br />loc: -0.45349717<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.45349717<br />time: 1<br />loc: -0.45349717<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.60588746<br />time: 1<br />loc: 0.60588746<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.60588746<br />time: 1<br />loc: 0.60588746<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -1.81795597<br />time: 1<br />loc: -1.81795597<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -1.81795597<br />time: 1<br />loc: -1.81795597<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.63009855<br />time: 1<br />loc: 0.63009855<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.63009855<br />time: 1<br />loc: 0.63009855<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.27618411<br />time: 1<br />loc: -0.27618411<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.27618411<br />time: 1<br />loc: -0.27618411<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.28415974<br />time: 1<br />loc: -0.28415974<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.28415974<br />time: 1<br />loc: -0.28415974<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.91932200<br />time: 1<br />loc: -0.91932200<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.91932200<br />time: 1<br />loc: -0.91932200<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.11624781<br />time: 1<br />loc: -0.11624781<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.11624781<br />time: 1<br />loc: -0.11624781<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 1.81731204<br />time: 1<br />loc: 1.81731204<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 1.81731204<br />time: 1<br />loc: 1.81731204<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.37062786<br />time: 1<br />loc: 0.37062786<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.37062786<br />time: 1<br />loc: 0.37062786<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.52021646<br />time: 1<br />loc: 0.52021646<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.52021646<br />time: 1<br />loc: 0.52021646<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.75053199<br />time: 1<br />loc: -0.75053199<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.75053199<br />time: 1<br />loc: -0.75053199<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.81689984<br />time: 1<br />loc: 0.81689984<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.81689984<br />time: 1<br />loc: 0.81689984<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.27618411<br />time: 2<br />loc: -1.87389362<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.27618411<br />time: 2<br />loc: -1.87389362<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.63009855<br />time: 2<br />loc: 2.43519607<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.63009855<br />time: 2<br />loc: 2.43519607<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.37062786<br />time: 2<br />loc: -0.11101950<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.37062786<br />time: 2<br />loc: -0.11101950<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.37062786<br />time: 2<br />loc: 0.99100767<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.37062786<br />time: 2<br />loc: 0.99100767<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.81689984<br />time: 2<br />loc: 1.42902333<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.81689984<br />time: 2<br />loc: 1.42902333<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.81689984<br />time: 2<br />loc: 0.65458886<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.81689984<br />time: 2<br />loc: 0.65458886<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.60588746<br />time: 2<br />loc: 1.41776063<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.60588746<br />time: 2<br />loc: 1.41776063<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.11624781<br />time: 2<br />loc: 2.08058574<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.11624781<br />time: 2<br />loc: 2.08058574<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.70946602<br />time: 2<br />loc: 2.75865635<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.70946602<br />time: 2<br />loc: 2.75865635<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.45349717<br />time: 2<br />loc: 1.17894847<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.45349717<br />time: 2<br />loc: 1.17894847<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.91932200<br />time: 2<br />loc: -0.66505081<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.91932200<br />time: 2<br />loc: -0.66505081<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.70946602<br />time: 2<br />loc: 1.20065430<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.70946602<br />time: 2<br />loc: 1.20065430<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.28415974<br />time: 2<br />loc: -0.60824632<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.28415974<br />time: 2<br />loc: -0.60824632<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.37062786<br />time: 2<br />loc: -1.29142238<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.37062786<br />time: 2<br />loc: -1.29142238<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.10930331<br />time: 2<br />loc: 1.65843054<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.10930331<br />time: 2<br />loc: 1.65843054<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.10930331<br />time: 2<br />loc: -0.08350227<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.10930331<br />time: 2<br />loc: -0.08350227<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: 1.41776063<br />time: 3<br />loc: 2.00094829<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: 1.41776063<br />time: 3<br />loc: 2.00094829<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: -0.66505081<br />time: 3<br />loc: -1.97184964<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: -0.66505081<br />time: 3<br />loc: -1.97184964<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: -0.08350227<br />time: 3<br />loc: -0.62388834<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: -0.08350227<br />time: 3<br />loc: -0.62388834<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: -0.08350227<br />time: 3<br />loc: 1.86419040<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: -0.08350227<br />time: 3<br />loc: 1.86419040<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: -0.11101950<br />time: 3<br />loc: -0.05742923<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: -0.11101950<br />time: 3<br />loc: -0.05742923<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: -0.60824632<br />time: 3<br />loc: -0.25658348<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: -0.60824632<br />time: 3<br />loc: -0.25658348<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: -1.29142238<br />time: 3<br />loc: -1.96239892<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: -1.29142238<br />time: 3<br />loc: -1.96239892<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: -0.66505081<br />time: 3<br />loc: -0.38709711<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: -0.66505081<br />time: 3<br />loc: -0.38709711<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: -1.29142238<br />time: 3<br />loc: -0.60025111<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: -1.29142238<br />time: 3<br />loc: -0.60025111<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: -0.08350227<br />time: 3<br />loc: 0.74029306<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: -0.08350227<br />time: 3<br />loc: 0.74029306<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: 1.65843054<br />time: 3<br />loc: 3.80349556<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: 1.65843054<br />time: 3<br />loc: 3.80349556<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: -1.29142238<br />time: 3<br />loc: -3.63836636<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: -1.29142238<br />time: 3<br />loc: -3.63836636<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: -0.08350227<br />time: 3<br />loc: 0.06608971<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: -0.08350227<br />time: 3<br />loc: 0.06608971<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: 0.99100767<br />time: 3<br />loc: -0.35152382<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: 0.99100767<br />time: 3<br />loc: -0.35152382<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: 1.20065430<br />time: 3<br />loc: 1.75395737<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: 1.20065430<br />time: 3<br />loc: 1.75395737<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: -0.66505081<br />time: 3<br />loc: 0.92491203<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: -0.66505081<br />time: 3<br />loc: 0.92491203<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: -0.25658348<br />time: 4<br />loc: -1.30593630<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: -0.25658348<br />time: 4<br />loc: -1.30593630<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: 0.74029306<br />time: 4<br />loc: 3.07080503<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: 0.74029306<br />time: 4<br />loc: 3.07080503<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: -0.05742923<br />time: 4<br />loc: 1.34527615<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: -0.05742923<br />time: 4<br />loc: 1.34527615<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: -0.62388834<br />time: 4<br />loc: 0.31871251<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: -0.62388834<br />time: 4<br />loc: 0.31871251<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: 0.74029306<br />time: 4<br />loc: 1.56655135<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: 0.74029306<br />time: 4<br />loc: 1.56655135<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: -0.62388834<br />time: 4<br />loc: -1.43542883<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: -0.62388834<br />time: 4<br />loc: -1.43542883<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: 1.86419040<br />time: 4<br />loc: 2.34043868<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: 1.86419040<br />time: 4<br />loc: 2.34043868<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: 0.92491203<br />time: 4<br />loc: 1.94617044<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: 0.92491203<br />time: 4<br />loc: 1.94617044<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: -0.62388834<br />time: 4<br />loc: 0.02149473<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: -0.62388834<br />time: 4<br />loc: 0.02149473<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: 0.06608971<br />time: 4<br />loc: 1.10923327<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: 0.06608971<br />time: 4<br />loc: 1.10923327<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: -0.05742923<br />time: 4<br />loc: -0.36179834<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: -0.05742923<br />time: 4<br />loc: -0.36179834<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: 0.74029306<br />time: 4<br />loc: 3.21740398<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: 0.74029306<br />time: 4<br />loc: 3.21740398<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: -0.38709711<br />time: 4<br />loc: 0.58412356<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: -0.38709711<br />time: 4<br />loc: 0.58412356<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: -0.62388834<br />time: 4<br />loc: 1.24321084<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: -0.62388834<br />time: 4<br />loc: 1.24321084<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: 0.06608971<br />time: 4<br />loc: 0.73813218<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: 0.06608971<br />time: 4<br />loc: 0.73813218<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: -0.60025111<br />time: 4<br />loc: -0.90820449<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: -0.60025111<br />time: 4<br />loc: -0.90820449<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 4<br />anc.loc: 1.10923327<br />time: 5<br />loc: 0.60418975<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 4<br />anc.loc: 1.10923327<br />time: 5<br />loc: 0.60418975<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 4<br />anc.loc: -1.43542883<br />time: 5<br />loc: 0.72229099<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 4<br />anc.loc: -1.43542883<br />time: 5<br />loc: 0.72229099<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 4<br />anc.loc: -1.30593630<br />time: 5<br />loc: -1.90573386<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 4<br />anc.loc: -1.30593630<br />time: 5<br />loc: -1.90573386<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 4<br />anc.loc: -1.30593630<br />time: 5<br />loc: -2.00048299<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 4<br />anc.loc: -1.30593630<br />time: 5<br />loc: -2.00048299<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 4<br />anc.loc: 0.31871251<br />time: 5<br />loc: 0.54263792<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 4<br />anc.loc: 0.31871251<br />time: 5<br />loc: 0.54263792<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 4<br />anc.loc: 0.58412356<br />time: 5<br />loc: -0.57209977<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 4<br />anc.loc: 0.58412356<br />time: 5<br />loc: -0.57209977<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 4<br />anc.loc: 0.31871251<br />time: 5<br />loc: 0.74113104<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 4<br />anc.loc: 0.31871251<br />time: 5<br />loc: 0.74113104<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 4<br />anc.loc: -0.36179834<br />time: 5<br />loc: -1.68655360<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 4<br />anc.loc: -0.36179834<br />time: 5<br />loc: -1.68655360<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 4<br />anc.loc: 1.56655135<br />time: 5<br />loc: 1.70763566<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 4<br />anc.loc: 1.56655135<br />time: 5<br />loc: 1.70763566<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 4<br />anc.loc: -1.30593630<br />time: 5<br />loc: -1.84198430<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 4<br />anc.loc: -1.30593630<br />time: 5<br />loc: -1.84198430<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 4<br />anc.loc: -0.36179834<br />time: 5<br />loc: -0.67340443<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 4<br />anc.loc: -0.36179834<br />time: 5<br />loc: -0.67340443<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 4<br />anc.loc: 1.56655135<br />time: 5<br />loc: 3.12266099<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 4<br />anc.loc: 1.56655135<br />time: 5<br />loc: 3.12266099<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 4<br />anc.loc: 0.31871251<br />time: 5<br />loc: -0.12932078<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 4<br />anc.loc: 0.31871251<br />time: 5<br />loc: -0.12932078<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 4<br />anc.loc: 0.31871251<br />time: 5<br />loc: 0.63983605<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 4<br />anc.loc: 0.31871251<br />time: 5<br />loc: 0.63983605<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 4<br />anc.loc: 1.24321084<br />time: 5<br />loc: 0.01303860<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 4<br />anc.loc: 1.24321084<br />time: 5<br />loc: 0.01303860<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 4<br />anc.loc: 1.34527615<br />time: 5<br />loc: 0.02121746<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 4<br />anc.loc: 1.34527615<br />time: 5<br />loc: 0.02121746<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 5<br />anc.loc: 0.74113104<br />time: 6<br />loc: 2.28599464<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 5<br />anc.loc: 0.74113104<br />time: 6<br />loc: 2.28599464<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 5<br />anc.loc: -0.67340443<br />time: 6<br />loc: 0.64804759<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 5<br />anc.loc: -0.67340443<br />time: 6<br />loc: 0.64804759<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 5<br />anc.loc: -1.68655360<br />time: 6<br />loc: -1.36440202<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 5<br />anc.loc: -1.68655360<br />time: 6<br />loc: -1.36440202<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 5<br />anc.loc: -0.57209977<br />time: 6<br />loc: 0.95885535<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 5<br />anc.loc: -0.57209977<br />time: 6<br />loc: 0.95885535<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 5<br />anc.loc: -0.57209977<br />time: 6<br />loc: -0.99333947<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 5<br />anc.loc: -0.57209977<br />time: 6<br />loc: -0.99333947<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 5<br />anc.loc: 0.63983605<br />time: 6<br />loc: -0.51898497<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 5<br />anc.loc: 0.63983605<br />time: 6<br />loc: -0.51898497<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 5<br />anc.loc: -0.12932078<br />time: 6<br />loc: -1.97468907<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 5<br />anc.loc: -0.12932078<br />time: 6<br />loc: -1.97468907<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 5<br />anc.loc: 0.60418975<br />time: 6<br />loc: 1.76151504<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 5<br />anc.loc: 0.60418975<br />time: 6<br />loc: 1.76151504<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 5<br />anc.loc: -0.57209977<br />time: 6<br />loc: -2.69564965<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 5<br />anc.loc: -0.57209977<br />time: 6<br />loc: -2.69564965<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 5<br />anc.loc: -0.67340443<br />time: 6<br />loc: -1.86943594<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 5<br />anc.loc: -0.67340443<br />time: 6<br />loc: -1.86943594<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 5<br />anc.loc: -0.67340443<br />time: 6<br />loc: 0.96878756<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 5<br />anc.loc: -0.67340443<br />time: 6<br />loc: 0.96878756<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 5<br />anc.loc: 0.60418975<br />time: 6<br />loc: 1.48784458<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 5<br />anc.loc: 0.60418975<br />time: 6<br />loc: 1.48784458<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 5<br />anc.loc: -2.00048299<br />time: 6<br />loc: -1.47560710<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 5<br />anc.loc: -2.00048299<br />time: 6<br />loc: -1.47560710<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 5<br />anc.loc: -1.68655360<br />time: 6<br />loc: -2.87121266<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 5<br />anc.loc: -1.68655360<br />time: 6<br />loc: -2.87121266<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 5<br />anc.loc: -0.57209977<br />time: 6<br />loc: 2.08368850<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 5<br />anc.loc: -0.57209977<br />time: 6<br />loc: 2.08368850<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 5<br />anc.loc: 0.54263792<br />time: 6<br />loc: -0.50527579<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 5<br />anc.loc: 0.54263792<br />time: 6<br />loc: -0.50527579<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 6<br />anc.loc: -0.51898497<br />time: 7<br />loc: -0.50312928<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 6<br />anc.loc: -0.51898497<br />time: 7<br />loc: -0.50312928<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 6<br />anc.loc: -2.69564965<br />time: 7<br />loc: -2.15548009<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 6<br />anc.loc: -2.69564965<br />time: 7<br />loc: -2.15548009<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 6<br />anc.loc: -1.36440202<br />time: 7<br />loc: -2.91169399<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 6<br />anc.loc: -1.36440202<br />time: 7<br />loc: -2.91169399<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 6<br />anc.loc: 0.64804759<br />time: 7<br />loc: 1.49770052<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 6<br />anc.loc: 0.64804759<br />time: 7<br />loc: 1.49770052<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 6<br />anc.loc: 0.96878756<br />time: 7<br />loc: 1.86480075<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 6<br />anc.loc: 0.96878756<br />time: 7<br />loc: 1.86480075<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 6<br />anc.loc: -0.50527579<br />time: 7<br />loc: -0.36658480<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 6<br />anc.loc: -0.50527579<br />time: 7<br />loc: -0.36658480<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 6<br />anc.loc: 0.64804759<br />time: 7<br />loc: -0.97128073<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 6<br />anc.loc: 0.64804759<br />time: 7<br />loc: -0.97128073<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 6<br />anc.loc: 1.48784458<br />time: 7<br />loc: 2.03624250<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 6<br />anc.loc: 1.48784458<br />time: 7<br />loc: 2.03624250<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 6<br />anc.loc: -0.50527579<br />time: 7<br />loc: -0.30999364<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 6<br />anc.loc: -0.50527579<br />time: 7<br />loc: -0.30999364<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 6<br />anc.loc: -1.47560710<br />time: 7<br />loc: -2.28210510<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 6<br />anc.loc: -1.47560710<br />time: 7<br />loc: -2.28210510<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 6<br />anc.loc: -0.50527579<br />time: 7<br />loc: -0.61390003<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 6<br />anc.loc: -0.50527579<br />time: 7<br />loc: -0.61390003<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 6<br />anc.loc: -0.50527579<br />time: 7<br />loc: -0.75622242<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 6<br />anc.loc: -0.50527579<br />time: 7<br />loc: -0.75622242<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 6<br />anc.loc: -0.51898497<br />time: 7<br />loc: 1.18036169<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 6<br />anc.loc: -0.51898497<br />time: 7<br />loc: 1.18036169<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 6<br />anc.loc: 0.95885535<br />time: 7<br />loc: 0.61455655<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 6<br />anc.loc: 0.95885535<br />time: 7<br />loc: 0.61455655<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 6<br />anc.loc: -0.50527579<br />time: 7<br />loc: -0.43750373<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 6<br />anc.loc: -0.50527579<br />time: 7<br />loc: -0.43750373<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 6<br />anc.loc: -1.47560710<br />time: 7<br />loc: -2.12617683<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 6<br />anc.loc: -1.47560710<br />time: 7<br />loc: -2.12617683<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 7<br />anc.loc: -0.43750373<br />time: 8<br />loc: 1.32681034<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 7<br />anc.loc: -0.43750373<br />time: 8<br />loc: 1.32681034<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 7<br />anc.loc: -0.75622242<br />time: 8<br />loc: -0.77990230<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 7<br />anc.loc: -0.75622242<br />time: 8<br />loc: -0.77990230<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 7<br />anc.loc: 0.61455655<br />time: 8<br />loc: 0.81447704<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 7<br />anc.loc: 0.61455655<br />time: 8<br />loc: 0.81447704<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 7<br />anc.loc: -0.50312928<br />time: 8<br />loc: 0.84406350<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 7<br />anc.loc: -0.50312928<br />time: 8<br />loc: 0.84406350<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 7<br />anc.loc: -0.50312928<br />time: 8<br />loc: -0.46705579<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 7<br />anc.loc: -0.50312928<br />time: 8<br />loc: -0.46705579<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 7<br />anc.loc: 2.03624250<br />time: 8<br />loc: 2.86082364<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 7<br />anc.loc: 2.03624250<br />time: 8<br />loc: 2.86082364<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 7<br />anc.loc: -0.43750373<br />time: 8<br />loc: -2.14017558<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 7<br />anc.loc: -0.43750373<br />time: 8<br />loc: -2.14017558<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 7<br />anc.loc: -0.30999364<br />time: 8<br />loc: 0.17095652<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 7<br />anc.loc: -0.30999364<br />time: 8<br />loc: 0.17095652<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 7<br />anc.loc: -0.36658480<br />time: 8<br />loc: 2.11696529<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 7<br />anc.loc: -0.36658480<br />time: 8<br />loc: 2.11696529<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 7<br />anc.loc: -0.36658480<br />time: 8<br />loc: 0.03478019<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 7<br />anc.loc: -0.36658480<br />time: 8<br />loc: 0.03478019<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 7<br />anc.loc: -0.50312928<br />time: 8<br />loc: -0.28795211<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 7<br />anc.loc: -0.50312928<br />time: 8<br />loc: -0.28795211<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 7<br />anc.loc: 1.18036169<br />time: 8<br />loc: -0.63535066<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 7<br />anc.loc: 1.18036169<br />time: 8<br />loc: -0.63535066<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 7<br />anc.loc: -0.75622242<br />time: 8<br />loc: -1.66796184<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 7<br />anc.loc: -0.75622242<br />time: 8<br />loc: -1.66796184<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 7<br />anc.loc: -0.75622242<br />time: 8<br />loc: -0.80526711<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 7<br />anc.loc: -0.75622242<br />time: 8<br />loc: -0.80526711<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 7<br />anc.loc: -0.30999364<br />time: 8<br />loc: -0.71538111<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 7<br />anc.loc: -0.30999364<br />time: 8<br />loc: -0.71538111<br />frame: 8<br />colour: ancestral line",null,"pmax(1, time - 1): 7<br />anc.loc: -0.30999364<br />time: 8<br />loc: 0.82038816<br />frame: 8<br />colour: ancestral line","pmax(1, time - 1): 7<br />anc.loc: -0.30999364<br />time: 8<br />loc: 0.82038816<br />frame: 8<br />colour: ancestral line"],"frame":"8","type":"scatter","mode":"lines","line":{"width":1.88976377952756,"color":"rgba(248,118,109,1)","dash":"solid"},"hoveron":"points","name":"ancestral line","legendgroup":"ancestral line","showlegend":true,"xaxis":"x","yaxis":"y","hoverinfo":"text","visible":true}],"traces":[0,1]},{"name":"9","data":[{"x":[1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,2,2,2,2,2,2,2,2,2,2,2,2,2,2,2,2,3,3,3,3,3,3,3,3,3,3,3,3,3,3,3,3,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,6,6,6,6,6,6,6,6,6,6,6,6,6,6,6,6,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,9,9,9,9,9,9,9,9,9,9,9,9,9,9,9,9],"y":[0.585528817843856,0.709466017509524,-0.109303314681054,-0.453497173462763,0.605887455840394,-1.81795596770373,0.630098551068391,-0.276184105225216,-0.284159743943371,-0.919322002474128,-0.116247806352002,1.81731204370422,0.370627864257954,0.520216457554957,-0.750531994502331,0.816899839520583,-1.87389362192153,2.43519606987921,-0.111019499436683,0.991007665556376,1.42902333217143,0.654588862602458,1.41776063439425,2.08058573999553,2.75865635491572,1.1789484660177,-0.665050809660074,1.20065429678208,-0.608246322680488,-1.29142237960068,1.65843053619191,-0.0835022660332458,2.00094828782994,-1.9718496431245,-0.62388833989535,1.86419039869879,-0.0574292290792283,-0.256583482125251,-1.96239891859462,-0.38709711485393,-0.600251106725797,0.74029306267284,3.8034955566722,-3.63836635796405,0.0660897145819629,-0.351523816173168,1.75395737228598,0.924912033035336,-1.30593630108152,3.07080502507755,1.3452761537882,0.318712510796175,1.56655134982527,-1.43542883008643,2.34043867945245,1.94617044051643,0.0214947343107265,1.1092332661334,-0.36179834177289,3.21740397963581,0.584123558183514,1.24321084467137,0.738132183596749,-0.908204487563135,0.604189748612507,0.722290986419984,-1.90573386490635,-2.00048299435227,0.542637918317463,-0.572099772057259,0.741131038583174,-1.68655359762182,1.70763566268555,-1.84198429957246,-0.673404426351037,3.12266099242653,-0.129320780498295,0.639836048290143,0.01303859779118,0.0212174616340499,2.28599464062077,0.648047590811593,-1.36440202225901,0.958855347677899,-0.993339465395833,-0.518984974527995,-1.97468906991976,1.76151503566965,-2.69564965307202,-1.86943594446187,0.968787564416303,1.48784458177452,-1.47560710331635,-2.87121266325259,2.08368850008554,-0.505275791900848,-0.503129281462436,-2.1554800853798,-2.91169398930471,1.49770052074003,1.86480074874875,-0.366584795091119,-0.971280731554545,2.03624250478892,-0.309993638089596,-2.28210509807982,-0.613900027107975,-0.756222415753161,1.18036169400518,0.61455655141293,-0.437503730496629,-2.12617683017041,1.32681033849769,-0.779902303906169,0.814477035240365,0.844063496177574,-0.467055793662738,2.86082363535566,-2.14017558210265,0.170956517178086,2.11696529423513,0.0347801924144859,-0.287952110715391,-0.635350658897616,-1.66796183998604,-0.805267106606783,-0.71538111477141,0.820388159757257,1.60718394915927,-0.478952902297727,0.594842791975961,1.61882193002937,2.17767077611212,-1.22347027200086,-1.25204189421636,-0.622045617121466,-2.07149636512469,-2.29722148724884,-0.223534028037399,0.423011574448362,1.64582585315539,0.92559997570959,-2.20876888812009,-0.160020112841558],"text":["time: 1<br />x: 0.58552882<br />frame: 9","time: 1<br />x: 0.70946602<br />frame: 9","time: 1<br />x: -0.10930331<br />frame: 9","time: 1<br />x: -0.45349717<br />frame: 9","time: 1<br />x: 0.60588746<br />frame: 9","time: 1<br />x: -1.81795597<br />frame: 9","time: 1<br />x: 0.63009855<br />frame: 9","time: 1<br />x: -0.27618411<br />frame: 9","time: 1<br />x: -0.28415974<br />frame: 9","time: 1<br />x: -0.91932200<br />frame: 9","time: 1<br />x: -0.11624781<br />frame: 9","time: 1<br />x: 1.81731204<br />frame: 9","time: 1<br />x: 0.37062786<br />frame: 9","time: 1<br />x: 0.52021646<br />frame: 9","time: 1<br />x: -0.75053199<br />frame: 9","time: 1<br />x: 0.81689984<br />frame: 9","time: 2<br />x: -1.87389362<br />frame: 9","time: 2<br />x: 2.43519607<br />frame: 9","time: 2<br />x: -0.11101950<br />frame: 9","time: 2<br />x: 0.99100767<br />frame: 9","time: 2<br />x: 1.42902333<br />frame: 9","time: 2<br />x: 0.65458886<br />frame: 9","time: 2<br />x: 1.41776063<br />frame: 9","time: 2<br />x: 2.08058574<br />frame: 9","time: 2<br />x: 2.75865635<br />frame: 9","time: 2<br />x: 1.17894847<br />frame: 9","time: 2<br />x: -0.66505081<br />frame: 9","time: 2<br />x: 1.20065430<br />frame: 9","time: 2<br />x: -0.60824632<br />frame: 9","time: 2<br />x: -1.29142238<br />frame: 9","time: 2<br />x: 1.65843054<br />frame: 9","time: 2<br />x: -0.08350227<br />frame: 9","time: 3<br />x: 2.00094829<br />frame: 9","time: 3<br />x: -1.97184964<br />frame: 9","time: 3<br />x: -0.62388834<br />frame: 9","time: 3<br />x: 1.86419040<br />frame: 9","time: 3<br />x: -0.05742923<br />frame: 9","time: 3<br />x: -0.25658348<br />frame: 9","time: 3<br />x: -1.96239892<br />frame: 9","time: 3<br />x: -0.38709711<br />frame: 9","time: 3<br />x: -0.60025111<br />frame: 9","time: 3<br />x: 0.74029306<br />frame: 9","time: 3<br />x: 3.80349556<br />frame: 9","time: 3<br />x: -3.63836636<br />frame: 9","time: 3<br />x: 0.06608971<br />frame: 9","time: 3<br />x: -0.35152382<br />frame: 9","time: 3<br />x: 1.75395737<br />frame: 9","time: 3<br />x: 0.92491203<br />frame: 9","time: 4<br />x: -1.30593630<br />frame: 9","time: 4<br />x: 3.07080503<br />frame: 9","time: 4<br />x: 1.34527615<br />frame: 9","time: 4<br />x: 0.31871251<br />frame: 9","time: 4<br />x: 1.56655135<br />frame: 9","time: 4<br />x: -1.43542883<br />frame: 9","time: 4<br />x: 2.34043868<br />frame: 9","time: 4<br />x: 1.94617044<br />frame: 9","time: 4<br />x: 0.02149473<br />frame: 9","time: 4<br />x: 1.10923327<br />frame: 9","time: 4<br />x: -0.36179834<br />frame: 9","time: 4<br />x: 3.21740398<br />frame: 9","time: 4<br />x: 0.58412356<br />frame: 9","time: 4<br />x: 1.24321084<br />frame: 9","time: 4<br />x: 0.73813218<br />frame: 9","time: 4<br />x: -0.90820449<br />frame: 9","time: 5<br />x: 0.60418975<br />frame: 9","time: 5<br />x: 0.72229099<br />frame: 9","time: 5<br />x: -1.90573386<br />frame: 9","time: 5<br />x: -2.00048299<br />frame: 9","time: 5<br />x: 0.54263792<br />frame: 9","time: 5<br />x: -0.57209977<br />frame: 9","time: 5<br />x: 0.74113104<br />frame: 9","time: 5<br />x: -1.68655360<br />frame: 9","time: 5<br />x: 1.70763566<br />frame: 9","time: 5<br />x: -1.84198430<br />frame: 9","time: 5<br />x: -0.67340443<br />frame: 9","time: 5<br />x: 3.12266099<br />frame: 9","time: 5<br />x: -0.12932078<br />frame: 9","time: 5<br />x: 0.63983605<br />frame: 9","time: 5<br />x: 0.01303860<br />frame: 9","time: 5<br />x: 0.02121746<br />frame: 9","time: 6<br />x: 2.28599464<br />frame: 9","time: 6<br />x: 0.64804759<br />frame: 9","time: 6<br />x: -1.36440202<br />frame: 9","time: 6<br />x: 0.95885535<br />frame: 9","time: 6<br />x: -0.99333947<br />frame: 9","time: 6<br />x: -0.51898497<br />frame: 9","time: 6<br />x: -1.97468907<br />frame: 9","time: 6<br />x: 1.76151504<br />frame: 9","time: 6<br />x: -2.69564965<br />frame: 9","time: 6<br />x: -1.86943594<br />frame: 9","time: 6<br />x: 0.96878756<br />frame: 9","time: 6<br />x: 1.48784458<br />frame: 9","time: 6<br />x: -1.47560710<br />frame: 9","time: 6<br />x: -2.87121266<br />frame: 9","time: 6<br />x: 2.08368850<br />frame: 9","time: 6<br />x: -0.50527579<br />frame: 9","time: 7<br />x: -0.50312928<br />frame: 9","time: 7<br />x: -2.15548009<br />frame: 9","time: 7<br />x: -2.91169399<br />frame: 9","time: 7<br />x: 1.49770052<br />frame: 9","time: 7<br />x: 1.86480075<br />frame: 9","time: 7<br />x: -0.36658480<br />frame: 9","time: 7<br />x: -0.97128073<br />frame: 9","time: 7<br />x: 2.03624250<br />frame: 9","time: 7<br />x: -0.30999364<br />frame: 9","time: 7<br />x: -2.28210510<br />frame: 9","time: 7<br />x: -0.61390003<br />frame: 9","time: 7<br />x: -0.75622242<br />frame: 9","time: 7<br />x: 1.18036169<br />frame: 9","time: 7<br />x: 0.61455655<br />frame: 9","time: 7<br />x: -0.43750373<br />frame: 9","time: 7<br />x: -2.12617683<br />frame: 9","time: 8<br />x: 1.32681034<br />frame: 9","time: 8<br />x: -0.77990230<br />frame: 9","time: 8<br />x: 0.81447704<br />frame: 9","time: 8<br />x: 0.84406350<br />frame: 9","time: 8<br />x: -0.46705579<br />frame: 9","time: 8<br />x: 2.86082364<br />frame: 9","time: 8<br />x: -2.14017558<br />frame: 9","time: 8<br />x: 0.17095652<br />frame: 9","time: 8<br />x: 2.11696529<br />frame: 9","time: 8<br />x: 0.03478019<br />frame: 9","time: 8<br />x: -0.28795211<br />frame: 9","time: 8<br />x: -0.63535066<br />frame: 9","time: 8<br />x: -1.66796184<br />frame: 9","time: 8<br />x: -0.80526711<br />frame: 9","time: 8<br />x: -0.71538111<br />frame: 9","time: 8<br />x: 0.82038816<br />frame: 9","time: 9<br />x: 1.60718395<br />frame: 9","time: 9<br />x: -0.47895290<br />frame: 9","time: 9<br />x: 0.59484279<br />frame: 9","time: 9<br />x: 1.61882193<br />frame: 9","time: 9<br />x: 2.17767078<br />frame: 9","time: 9<br />x: -1.22347027<br />frame: 9","time: 9<br />x: -1.25204189<br />frame: 9","time: 9<br />x: -0.62204562<br />frame: 9","time: 9<br />x: -2.07149637<br />frame: 9","time: 9<br />x: -2.29722149<br />frame: 9","time: 9<br />x: -0.22353403<br />frame: 9","time: 9<br />x: 0.42301157<br />frame: 9","time: 9<br />x: 1.64582585<br />frame: 9","time: 9<br />x: 0.92559998<br />frame: 9","time: 9<br />x: -2.20876889<br />frame: 9","time: 9<br />x: -0.16002011<br />frame: 9"],"frame":"9","type":"scatter","mode":"markers","marker":{"autocolorscale":false,"color":"rgba(0,0,0,1)","opacity":1,"size":5.66929133858268,"symbol":"circle","line":{"width":1.88976377952756,"color":"rgba(0,0,0,1)"}},"hoveron":"points","showlegend":false,"xaxis":"x","yaxis":"y","hoverinfo":"text","visible":true},{"x":[1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,2,3,null,2,3,null,2,3,null,2,3,null,2,3,null,2,3,null,2,3,null,2,3,null,2,3,null,2,3,null,2,3,null,2,3,null,2,3,null,2,3,null,2,3,null,2,3,null,3,4,null,3,4,null,3,4,null,3,4,null,3,4,null,3,4,null,3,4,null,3,4,null,3,4,null,3,4,null,3,4,null,3,4,null,3,4,null,3,4,null,3,4,null,3,4,null,4,5,null,4,5,null,4,5,null,4,5,null,4,5,null,4,5,null,4,5,null,4,5,null,4,5,null,4,5,null,4,5,null,4,5,null,4,5,null,4,5,null,4,5,null,4,5,null,5,6,null,5,6,null,5,6,null,5,6,null,5,6,null,5,6,null,5,6,null,5,6,null,5,6,null,5,6,null,5,6,null,5,6,null,5,6,null,5,6,null,5,6,null,5,6,null,6,7,null,6,7,null,6,7,null,6,7,null,6,7,null,6,7,null,6,7,null,6,7,null,6,7,null,6,7,null,6,7,null,6,7,null,6,7,null,6,7,null,6,7,null,6,7,null,7,8,null,7,8,null,7,8,null,7,8,null,7,8,null,7,8,null,7,8,null,7,8,null,7,8,null,7,8,null,7,8,null,7,8,null,7,8,null,7,8,null,7,8,null,7,8,null,8,9,null,8,9,null,8,9,null,8,9,null,8,9,null,8,9,null,8,9,null,8,9,null,8,9,null,8,9,null,8,9,null,8,9,null,8,9,null,8,9,null,8,9,null,8,9],"y":[0.585528817843856,0.585528817843856,null,0.709466017509524,0.709466017509524,null,-0.109303314681054,-0.109303314681054,null,-0.453497173462763,-0.453497173462763,null,0.605887455840394,0.605887455840394,null,-1.81795596770373,-1.81795596770373,null,0.630098551068391,0.630098551068391,null,-0.276184105225216,-0.276184105225216,null,-0.284159743943371,-0.284159743943371,null,-0.919322002474128,-0.919322002474128,null,-0.116247806352002,-0.116247806352002,null,1.81731204370422,1.81731204370422,null,0.370627864257954,0.370627864257954,null,0.520216457554957,0.520216457554957,null,-0.750531994502331,-0.750531994502331,null,0.816899839520583,0.816899839520583,null,-0.276184105225216,-1.87389362192153,null,0.630098551068391,2.43519606987921,null,0.370627864257954,-0.111019499436683,null,0.370627864257954,0.991007665556376,null,0.816899839520583,1.42902333217143,null,0.816899839520583,0.654588862602458,null,0.605887455840394,1.41776063439425,null,-0.116247806352002,2.08058573999553,null,0.709466017509524,2.75865635491572,null,-0.453497173462763,1.1789484660177,null,-0.919322002474128,-0.665050809660074,null,0.709466017509524,1.20065429678208,null,-0.284159743943371,-0.608246322680488,null,0.370627864257954,-1.29142237960068,null,-0.109303314681054,1.65843053619191,null,-0.109303314681054,-0.0835022660332458,null,1.41776063439425,2.00094828782994,null,-0.665050809660074,-1.9718496431245,null,-0.0835022660332458,-0.62388833989535,null,-0.0835022660332458,1.86419039869879,null,-0.111019499436683,-0.0574292290792283,null,-0.608246322680488,-0.256583482125251,null,-1.29142237960068,-1.96239891859462,null,-0.665050809660074,-0.38709711485393,null,-1.29142237960068,-0.600251106725797,null,-0.0835022660332458,0.74029306267284,null,1.65843053619191,3.8034955566722,null,-1.29142237960068,-3.63836635796405,null,-0.0835022660332458,0.0660897145819629,null,0.991007665556376,-0.351523816173168,null,1.20065429678208,1.75395737228598,null,-0.665050809660074,0.924912033035336,null,-0.256583482125251,-1.30593630108152,null,0.74029306267284,3.07080502507755,null,-0.0574292290792283,1.3452761537882,null,-0.62388833989535,0.318712510796175,null,0.74029306267284,1.56655134982527,null,-0.62388833989535,-1.43542883008643,null,1.86419039869879,2.34043867945245,null,0.924912033035336,1.94617044051643,null,-0.62388833989535,0.0214947343107265,null,0.0660897145819629,1.1092332661334,null,-0.0574292290792283,-0.36179834177289,null,0.74029306267284,3.21740397963581,null,-0.38709711485393,0.584123558183514,null,-0.62388833989535,1.24321084467137,null,0.0660897145819629,0.738132183596749,null,-0.600251106725797,-0.908204487563135,null,1.1092332661334,0.604189748612507,null,-1.43542883008643,0.722290986419984,null,-1.30593630108152,-1.90573386490635,null,-1.30593630108152,-2.00048299435227,null,0.318712510796175,0.542637918317463,null,0.584123558183514,-0.572099772057259,null,0.318712510796175,0.741131038583174,null,-0.36179834177289,-1.68655359762182,null,1.56655134982527,1.70763566268555,null,-1.30593630108152,-1.84198429957246,null,-0.36179834177289,-0.673404426351037,null,1.56655134982527,3.12266099242653,null,0.318712510796175,-0.129320780498295,null,0.318712510796175,0.639836048290143,null,1.24321084467137,0.01303859779118,null,1.3452761537882,0.0212174616340499,null,0.741131038583174,2.28599464062077,null,-0.673404426351037,0.648047590811593,null,-1.68655359762182,-1.36440202225901,null,-0.572099772057259,0.958855347677899,null,-0.572099772057259,-0.993339465395833,null,0.639836048290143,-0.518984974527995,null,-0.129320780498295,-1.97468906991976,null,0.604189748612507,1.76151503566965,null,-0.572099772057259,-2.69564965307202,null,-0.673404426351037,-1.86943594446187,null,-0.673404426351037,0.968787564416303,null,0.604189748612507,1.48784458177452,null,-2.00048299435227,-1.47560710331635,null,-1.68655359762182,-2.87121266325259,null,-0.572099772057259,2.08368850008554,null,0.542637918317463,-0.505275791900848,null,-0.518984974527995,-0.503129281462436,null,-2.69564965307202,-2.1554800853798,null,-1.36440202225901,-2.91169398930471,null,0.648047590811593,1.49770052074003,null,0.968787564416303,1.86480074874875,null,-0.505275791900848,-0.366584795091119,null,0.648047590811593,-0.971280731554545,null,1.48784458177452,2.03624250478892,null,-0.505275791900848,-0.309993638089596,null,-1.47560710331635,-2.28210509807982,null,-0.505275791900848,-0.613900027107975,null,-0.505275791900848,-0.756222415753161,null,-0.518984974527995,1.18036169400518,null,0.958855347677899,0.61455655141293,null,-0.505275791900848,-0.437503730496629,null,-1.47560710331635,-2.12617683017041,null,-0.437503730496629,1.32681033849769,null,-0.756222415753161,-0.779902303906169,null,0.61455655141293,0.814477035240365,null,-0.503129281462436,0.844063496177574,null,-0.503129281462436,-0.467055793662738,null,2.03624250478892,2.86082363535566,null,-0.437503730496629,-2.14017558210265,null,-0.309993638089596,0.170956517178086,null,-0.366584795091119,2.11696529423513,null,-0.366584795091119,0.0347801924144859,null,-0.503129281462436,-0.287952110715391,null,1.18036169400518,-0.635350658897616,null,-0.756222415753161,-1.66796183998604,null,-0.756222415753161,-0.805267106606783,null,-0.309993638089596,-0.71538111477141,null,-0.309993638089596,0.820388159757257,null,0.820388159757257,1.60718394915927,null,-0.779902303906169,-0.478952902297727,null,-0.71538111477141,0.594842791975961,null,0.820388159757257,1.61882193002937,null,1.32681033849769,2.17767077611212,null,-0.779902303906169,-1.22347027200086,null,-0.805267106606783,-1.25204189421636,null,-0.635350658897616,-0.622045617121466,null,-0.635350658897616,-2.07149636512469,null,-1.66796183998604,-2.29722148724884,null,-0.467055793662738,-0.223534028037399,null,-0.635350658897616,0.423011574448362,null,0.814477035240365,1.64582585315539,null,0.820388159757257,0.92559997570959,null,-0.467055793662738,-2.20876888812009,null,-0.805267106606783,-0.160020112841558],"text":["pmax(1, time - 1): 1<br />anc.loc: 0.58552882<br />time: 1<br />loc: 0.58552882<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.58552882<br />time: 1<br />loc: 0.58552882<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.70946602<br />time: 1<br />loc: 0.70946602<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.70946602<br />time: 1<br />loc: 0.70946602<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.10930331<br />time: 1<br />loc: -0.10930331<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.10930331<br />time: 1<br />loc: -0.10930331<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.45349717<br />time: 1<br />loc: -0.45349717<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.45349717<br />time: 1<br />loc: -0.45349717<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.60588746<br />time: 1<br />loc: 0.60588746<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.60588746<br />time: 1<br />loc: 0.60588746<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -1.81795597<br />time: 1<br />loc: -1.81795597<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -1.81795597<br />time: 1<br />loc: -1.81795597<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.63009855<br />time: 1<br />loc: 0.63009855<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.63009855<br />time: 1<br />loc: 0.63009855<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.27618411<br />time: 1<br />loc: -0.27618411<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.27618411<br />time: 1<br />loc: -0.27618411<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.28415974<br />time: 1<br />loc: -0.28415974<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.28415974<br />time: 1<br />loc: -0.28415974<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.91932200<br />time: 1<br />loc: -0.91932200<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.91932200<br />time: 1<br />loc: -0.91932200<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.11624781<br />time: 1<br />loc: -0.11624781<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.11624781<br />time: 1<br />loc: -0.11624781<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 1.81731204<br />time: 1<br />loc: 1.81731204<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 1.81731204<br />time: 1<br />loc: 1.81731204<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.37062786<br />time: 1<br />loc: 0.37062786<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.37062786<br />time: 1<br />loc: 0.37062786<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.52021646<br />time: 1<br />loc: 0.52021646<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.52021646<br />time: 1<br />loc: 0.52021646<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.75053199<br />time: 1<br />loc: -0.75053199<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.75053199<br />time: 1<br />loc: -0.75053199<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.81689984<br />time: 1<br />loc: 0.81689984<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.81689984<br />time: 1<br />loc: 0.81689984<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.27618411<br />time: 2<br />loc: -1.87389362<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.27618411<br />time: 2<br />loc: -1.87389362<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.63009855<br />time: 2<br />loc: 2.43519607<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.63009855<br />time: 2<br />loc: 2.43519607<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.37062786<br />time: 2<br />loc: -0.11101950<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.37062786<br />time: 2<br />loc: -0.11101950<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.37062786<br />time: 2<br />loc: 0.99100767<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.37062786<br />time: 2<br />loc: 0.99100767<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.81689984<br />time: 2<br />loc: 1.42902333<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.81689984<br />time: 2<br />loc: 1.42902333<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.81689984<br />time: 2<br />loc: 0.65458886<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.81689984<br />time: 2<br />loc: 0.65458886<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.60588746<br />time: 2<br />loc: 1.41776063<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.60588746<br />time: 2<br />loc: 1.41776063<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.11624781<br />time: 2<br />loc: 2.08058574<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.11624781<br />time: 2<br />loc: 2.08058574<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.70946602<br />time: 2<br />loc: 2.75865635<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.70946602<br />time: 2<br />loc: 2.75865635<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.45349717<br />time: 2<br />loc: 1.17894847<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.45349717<br />time: 2<br />loc: 1.17894847<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.91932200<br />time: 2<br />loc: -0.66505081<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.91932200<br />time: 2<br />loc: -0.66505081<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.70946602<br />time: 2<br />loc: 1.20065430<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.70946602<br />time: 2<br />loc: 1.20065430<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.28415974<br />time: 2<br />loc: -0.60824632<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.28415974<br />time: 2<br />loc: -0.60824632<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.37062786<br />time: 2<br />loc: -1.29142238<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.37062786<br />time: 2<br />loc: -1.29142238<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.10930331<br />time: 2<br />loc: 1.65843054<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.10930331<br />time: 2<br />loc: 1.65843054<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.10930331<br />time: 2<br />loc: -0.08350227<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.10930331<br />time: 2<br />loc: -0.08350227<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: 1.41776063<br />time: 3<br />loc: 2.00094829<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: 1.41776063<br />time: 3<br />loc: 2.00094829<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: -0.66505081<br />time: 3<br />loc: -1.97184964<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: -0.66505081<br />time: 3<br />loc: -1.97184964<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: -0.08350227<br />time: 3<br />loc: -0.62388834<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: -0.08350227<br />time: 3<br />loc: -0.62388834<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: -0.08350227<br />time: 3<br />loc: 1.86419040<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: -0.08350227<br />time: 3<br />loc: 1.86419040<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: -0.11101950<br />time: 3<br />loc: -0.05742923<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: -0.11101950<br />time: 3<br />loc: -0.05742923<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: -0.60824632<br />time: 3<br />loc: -0.25658348<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: -0.60824632<br />time: 3<br />loc: -0.25658348<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: -1.29142238<br />time: 3<br />loc: -1.96239892<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: -1.29142238<br />time: 3<br />loc: -1.96239892<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: -0.66505081<br />time: 3<br />loc: -0.38709711<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: -0.66505081<br />time: 3<br />loc: -0.38709711<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: -1.29142238<br />time: 3<br />loc: -0.60025111<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: -1.29142238<br />time: 3<br />loc: -0.60025111<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: -0.08350227<br />time: 3<br />loc: 0.74029306<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: -0.08350227<br />time: 3<br />loc: 0.74029306<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: 1.65843054<br />time: 3<br />loc: 3.80349556<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: 1.65843054<br />time: 3<br />loc: 3.80349556<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: -1.29142238<br />time: 3<br />loc: -3.63836636<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: -1.29142238<br />time: 3<br />loc: -3.63836636<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: -0.08350227<br />time: 3<br />loc: 0.06608971<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: -0.08350227<br />time: 3<br />loc: 0.06608971<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: 0.99100767<br />time: 3<br />loc: -0.35152382<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: 0.99100767<br />time: 3<br />loc: -0.35152382<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: 1.20065430<br />time: 3<br />loc: 1.75395737<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: 1.20065430<br />time: 3<br />loc: 1.75395737<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: -0.66505081<br />time: 3<br />loc: 0.92491203<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: -0.66505081<br />time: 3<br />loc: 0.92491203<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: -0.25658348<br />time: 4<br />loc: -1.30593630<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: -0.25658348<br />time: 4<br />loc: -1.30593630<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: 0.74029306<br />time: 4<br />loc: 3.07080503<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: 0.74029306<br />time: 4<br />loc: 3.07080503<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: -0.05742923<br />time: 4<br />loc: 1.34527615<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: -0.05742923<br />time: 4<br />loc: 1.34527615<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: -0.62388834<br />time: 4<br />loc: 0.31871251<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: -0.62388834<br />time: 4<br />loc: 0.31871251<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: 0.74029306<br />time: 4<br />loc: 1.56655135<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: 0.74029306<br />time: 4<br />loc: 1.56655135<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: -0.62388834<br />time: 4<br />loc: -1.43542883<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: -0.62388834<br />time: 4<br />loc: -1.43542883<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: 1.86419040<br />time: 4<br />loc: 2.34043868<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: 1.86419040<br />time: 4<br />loc: 2.34043868<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: 0.92491203<br />time: 4<br />loc: 1.94617044<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: 0.92491203<br />time: 4<br />loc: 1.94617044<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: -0.62388834<br />time: 4<br />loc: 0.02149473<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: -0.62388834<br />time: 4<br />loc: 0.02149473<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: 0.06608971<br />time: 4<br />loc: 1.10923327<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: 0.06608971<br />time: 4<br />loc: 1.10923327<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: -0.05742923<br />time: 4<br />loc: -0.36179834<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: -0.05742923<br />time: 4<br />loc: -0.36179834<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: 0.74029306<br />time: 4<br />loc: 3.21740398<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: 0.74029306<br />time: 4<br />loc: 3.21740398<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: -0.38709711<br />time: 4<br />loc: 0.58412356<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: -0.38709711<br />time: 4<br />loc: 0.58412356<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: -0.62388834<br />time: 4<br />loc: 1.24321084<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: -0.62388834<br />time: 4<br />loc: 1.24321084<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: 0.06608971<br />time: 4<br />loc: 0.73813218<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: 0.06608971<br />time: 4<br />loc: 0.73813218<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: -0.60025111<br />time: 4<br />loc: -0.90820449<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: -0.60025111<br />time: 4<br />loc: -0.90820449<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 4<br />anc.loc: 1.10923327<br />time: 5<br />loc: 0.60418975<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 4<br />anc.loc: 1.10923327<br />time: 5<br />loc: 0.60418975<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 4<br />anc.loc: -1.43542883<br />time: 5<br />loc: 0.72229099<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 4<br />anc.loc: -1.43542883<br />time: 5<br />loc: 0.72229099<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 4<br />anc.loc: -1.30593630<br />time: 5<br />loc: -1.90573386<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 4<br />anc.loc: -1.30593630<br />time: 5<br />loc: -1.90573386<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 4<br />anc.loc: -1.30593630<br />time: 5<br />loc: -2.00048299<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 4<br />anc.loc: -1.30593630<br />time: 5<br />loc: -2.00048299<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 4<br />anc.loc: 0.31871251<br />time: 5<br />loc: 0.54263792<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 4<br />anc.loc: 0.31871251<br />time: 5<br />loc: 0.54263792<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 4<br />anc.loc: 0.58412356<br />time: 5<br />loc: -0.57209977<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 4<br />anc.loc: 0.58412356<br />time: 5<br />loc: -0.57209977<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 4<br />anc.loc: 0.31871251<br />time: 5<br />loc: 0.74113104<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 4<br />anc.loc: 0.31871251<br />time: 5<br />loc: 0.74113104<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 4<br />anc.loc: -0.36179834<br />time: 5<br />loc: -1.68655360<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 4<br />anc.loc: -0.36179834<br />time: 5<br />loc: -1.68655360<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 4<br />anc.loc: 1.56655135<br />time: 5<br />loc: 1.70763566<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 4<br />anc.loc: 1.56655135<br />time: 5<br />loc: 1.70763566<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 4<br />anc.loc: -1.30593630<br />time: 5<br />loc: -1.84198430<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 4<br />anc.loc: -1.30593630<br />time: 5<br />loc: -1.84198430<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 4<br />anc.loc: -0.36179834<br />time: 5<br />loc: -0.67340443<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 4<br />anc.loc: -0.36179834<br />time: 5<br />loc: -0.67340443<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 4<br />anc.loc: 1.56655135<br />time: 5<br />loc: 3.12266099<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 4<br />anc.loc: 1.56655135<br />time: 5<br />loc: 3.12266099<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 4<br />anc.loc: 0.31871251<br />time: 5<br />loc: -0.12932078<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 4<br />anc.loc: 0.31871251<br />time: 5<br />loc: -0.12932078<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 4<br />anc.loc: 0.31871251<br />time: 5<br />loc: 0.63983605<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 4<br />anc.loc: 0.31871251<br />time: 5<br />loc: 0.63983605<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 4<br />anc.loc: 1.24321084<br />time: 5<br />loc: 0.01303860<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 4<br />anc.loc: 1.24321084<br />time: 5<br />loc: 0.01303860<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 4<br />anc.loc: 1.34527615<br />time: 5<br />loc: 0.02121746<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 4<br />anc.loc: 1.34527615<br />time: 5<br />loc: 0.02121746<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 5<br />anc.loc: 0.74113104<br />time: 6<br />loc: 2.28599464<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 5<br />anc.loc: 0.74113104<br />time: 6<br />loc: 2.28599464<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 5<br />anc.loc: -0.67340443<br />time: 6<br />loc: 0.64804759<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 5<br />anc.loc: -0.67340443<br />time: 6<br />loc: 0.64804759<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 5<br />anc.loc: -1.68655360<br />time: 6<br />loc: -1.36440202<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 5<br />anc.loc: -1.68655360<br />time: 6<br />loc: -1.36440202<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 5<br />anc.loc: -0.57209977<br />time: 6<br />loc: 0.95885535<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 5<br />anc.loc: -0.57209977<br />time: 6<br />loc: 0.95885535<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 5<br />anc.loc: -0.57209977<br />time: 6<br />loc: -0.99333947<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 5<br />anc.loc: -0.57209977<br />time: 6<br />loc: -0.99333947<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 5<br />anc.loc: 0.63983605<br />time: 6<br />loc: -0.51898497<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 5<br />anc.loc: 0.63983605<br />time: 6<br />loc: -0.51898497<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 5<br />anc.loc: -0.12932078<br />time: 6<br />loc: -1.97468907<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 5<br />anc.loc: -0.12932078<br />time: 6<br />loc: -1.97468907<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 5<br />anc.loc: 0.60418975<br />time: 6<br />loc: 1.76151504<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 5<br />anc.loc: 0.60418975<br />time: 6<br />loc: 1.76151504<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 5<br />anc.loc: -0.57209977<br />time: 6<br />loc: -2.69564965<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 5<br />anc.loc: -0.57209977<br />time: 6<br />loc: -2.69564965<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 5<br />anc.loc: -0.67340443<br />time: 6<br />loc: -1.86943594<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 5<br />anc.loc: -0.67340443<br />time: 6<br />loc: -1.86943594<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 5<br />anc.loc: -0.67340443<br />time: 6<br />loc: 0.96878756<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 5<br />anc.loc: -0.67340443<br />time: 6<br />loc: 0.96878756<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 5<br />anc.loc: 0.60418975<br />time: 6<br />loc: 1.48784458<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 5<br />anc.loc: 0.60418975<br />time: 6<br />loc: 1.48784458<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 5<br />anc.loc: -2.00048299<br />time: 6<br />loc: -1.47560710<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 5<br />anc.loc: -2.00048299<br />time: 6<br />loc: -1.47560710<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 5<br />anc.loc: -1.68655360<br />time: 6<br />loc: -2.87121266<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 5<br />anc.loc: -1.68655360<br />time: 6<br />loc: -2.87121266<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 5<br />anc.loc: -0.57209977<br />time: 6<br />loc: 2.08368850<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 5<br />anc.loc: -0.57209977<br />time: 6<br />loc: 2.08368850<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 5<br />anc.loc: 0.54263792<br />time: 6<br />loc: -0.50527579<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 5<br />anc.loc: 0.54263792<br />time: 6<br />loc: -0.50527579<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 6<br />anc.loc: -0.51898497<br />time: 7<br />loc: -0.50312928<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 6<br />anc.loc: -0.51898497<br />time: 7<br />loc: -0.50312928<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 6<br />anc.loc: -2.69564965<br />time: 7<br />loc: -2.15548009<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 6<br />anc.loc: -2.69564965<br />time: 7<br />loc: -2.15548009<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 6<br />anc.loc: -1.36440202<br />time: 7<br />loc: -2.91169399<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 6<br />anc.loc: -1.36440202<br />time: 7<br />loc: -2.91169399<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 6<br />anc.loc: 0.64804759<br />time: 7<br />loc: 1.49770052<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 6<br />anc.loc: 0.64804759<br />time: 7<br />loc: 1.49770052<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 6<br />anc.loc: 0.96878756<br />time: 7<br />loc: 1.86480075<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 6<br />anc.loc: 0.96878756<br />time: 7<br />loc: 1.86480075<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 6<br />anc.loc: -0.50527579<br />time: 7<br />loc: -0.36658480<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 6<br />anc.loc: -0.50527579<br />time: 7<br />loc: -0.36658480<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 6<br />anc.loc: 0.64804759<br />time: 7<br />loc: -0.97128073<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 6<br />anc.loc: 0.64804759<br />time: 7<br />loc: -0.97128073<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 6<br />anc.loc: 1.48784458<br />time: 7<br />loc: 2.03624250<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 6<br />anc.loc: 1.48784458<br />time: 7<br />loc: 2.03624250<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 6<br />anc.loc: -0.50527579<br />time: 7<br />loc: -0.30999364<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 6<br />anc.loc: -0.50527579<br />time: 7<br />loc: -0.30999364<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 6<br />anc.loc: -1.47560710<br />time: 7<br />loc: -2.28210510<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 6<br />anc.loc: -1.47560710<br />time: 7<br />loc: -2.28210510<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 6<br />anc.loc: -0.50527579<br />time: 7<br />loc: -0.61390003<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 6<br />anc.loc: -0.50527579<br />time: 7<br />loc: -0.61390003<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 6<br />anc.loc: -0.50527579<br />time: 7<br />loc: -0.75622242<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 6<br />anc.loc: -0.50527579<br />time: 7<br />loc: -0.75622242<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 6<br />anc.loc: -0.51898497<br />time: 7<br />loc: 1.18036169<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 6<br />anc.loc: -0.51898497<br />time: 7<br />loc: 1.18036169<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 6<br />anc.loc: 0.95885535<br />time: 7<br />loc: 0.61455655<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 6<br />anc.loc: 0.95885535<br />time: 7<br />loc: 0.61455655<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 6<br />anc.loc: -0.50527579<br />time: 7<br />loc: -0.43750373<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 6<br />anc.loc: -0.50527579<br />time: 7<br />loc: -0.43750373<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 6<br />anc.loc: -1.47560710<br />time: 7<br />loc: -2.12617683<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 6<br />anc.loc: -1.47560710<br />time: 7<br />loc: -2.12617683<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 7<br />anc.loc: -0.43750373<br />time: 8<br />loc: 1.32681034<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 7<br />anc.loc: -0.43750373<br />time: 8<br />loc: 1.32681034<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 7<br />anc.loc: -0.75622242<br />time: 8<br />loc: -0.77990230<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 7<br />anc.loc: -0.75622242<br />time: 8<br />loc: -0.77990230<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 7<br />anc.loc: 0.61455655<br />time: 8<br />loc: 0.81447704<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 7<br />anc.loc: 0.61455655<br />time: 8<br />loc: 0.81447704<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 7<br />anc.loc: -0.50312928<br />time: 8<br />loc: 0.84406350<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 7<br />anc.loc: -0.50312928<br />time: 8<br />loc: 0.84406350<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 7<br />anc.loc: -0.50312928<br />time: 8<br />loc: -0.46705579<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 7<br />anc.loc: -0.50312928<br />time: 8<br />loc: -0.46705579<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 7<br />anc.loc: 2.03624250<br />time: 8<br />loc: 2.86082364<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 7<br />anc.loc: 2.03624250<br />time: 8<br />loc: 2.86082364<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 7<br />anc.loc: -0.43750373<br />time: 8<br />loc: -2.14017558<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 7<br />anc.loc: -0.43750373<br />time: 8<br />loc: -2.14017558<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 7<br />anc.loc: -0.30999364<br />time: 8<br />loc: 0.17095652<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 7<br />anc.loc: -0.30999364<br />time: 8<br />loc: 0.17095652<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 7<br />anc.loc: -0.36658480<br />time: 8<br />loc: 2.11696529<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 7<br />anc.loc: -0.36658480<br />time: 8<br />loc: 2.11696529<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 7<br />anc.loc: -0.36658480<br />time: 8<br />loc: 0.03478019<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 7<br />anc.loc: -0.36658480<br />time: 8<br />loc: 0.03478019<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 7<br />anc.loc: -0.50312928<br />time: 8<br />loc: -0.28795211<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 7<br />anc.loc: -0.50312928<br />time: 8<br />loc: -0.28795211<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 7<br />anc.loc: 1.18036169<br />time: 8<br />loc: -0.63535066<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 7<br />anc.loc: 1.18036169<br />time: 8<br />loc: -0.63535066<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 7<br />anc.loc: -0.75622242<br />time: 8<br />loc: -1.66796184<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 7<br />anc.loc: -0.75622242<br />time: 8<br />loc: -1.66796184<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 7<br />anc.loc: -0.75622242<br />time: 8<br />loc: -0.80526711<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 7<br />anc.loc: -0.75622242<br />time: 8<br />loc: -0.80526711<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 7<br />anc.loc: -0.30999364<br />time: 8<br />loc: -0.71538111<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 7<br />anc.loc: -0.30999364<br />time: 8<br />loc: -0.71538111<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 7<br />anc.loc: -0.30999364<br />time: 8<br />loc: 0.82038816<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 7<br />anc.loc: -0.30999364<br />time: 8<br />loc: 0.82038816<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 8<br />anc.loc: 0.82038816<br />time: 9<br />loc: 1.60718395<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 8<br />anc.loc: 0.82038816<br />time: 9<br />loc: 1.60718395<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 8<br />anc.loc: -0.77990230<br />time: 9<br />loc: -0.47895290<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 8<br />anc.loc: -0.77990230<br />time: 9<br />loc: -0.47895290<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 8<br />anc.loc: -0.71538111<br />time: 9<br />loc: 0.59484279<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 8<br />anc.loc: -0.71538111<br />time: 9<br />loc: 0.59484279<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 8<br />anc.loc: 0.82038816<br />time: 9<br />loc: 1.61882193<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 8<br />anc.loc: 0.82038816<br />time: 9<br />loc: 1.61882193<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 8<br />anc.loc: 1.32681034<br />time: 9<br />loc: 2.17767078<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 8<br />anc.loc: 1.32681034<br />time: 9<br />loc: 2.17767078<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 8<br />anc.loc: -0.77990230<br />time: 9<br />loc: -1.22347027<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 8<br />anc.loc: -0.77990230<br />time: 9<br />loc: -1.22347027<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 8<br />anc.loc: -0.80526711<br />time: 9<br />loc: -1.25204189<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 8<br />anc.loc: -0.80526711<br />time: 9<br />loc: -1.25204189<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 8<br />anc.loc: -0.63535066<br />time: 9<br />loc: -0.62204562<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 8<br />anc.loc: -0.63535066<br />time: 9<br />loc: -0.62204562<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 8<br />anc.loc: -0.63535066<br />time: 9<br />loc: -2.07149637<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 8<br />anc.loc: -0.63535066<br />time: 9<br />loc: -2.07149637<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 8<br />anc.loc: -1.66796184<br />time: 9<br />loc: -2.29722149<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 8<br />anc.loc: -1.66796184<br />time: 9<br />loc: -2.29722149<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 8<br />anc.loc: -0.46705579<br />time: 9<br />loc: -0.22353403<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 8<br />anc.loc: -0.46705579<br />time: 9<br />loc: -0.22353403<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 8<br />anc.loc: -0.63535066<br />time: 9<br />loc: 0.42301157<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 8<br />anc.loc: -0.63535066<br />time: 9<br />loc: 0.42301157<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 8<br />anc.loc: 0.81447704<br />time: 9<br />loc: 1.64582585<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 8<br />anc.loc: 0.81447704<br />time: 9<br />loc: 1.64582585<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 8<br />anc.loc: 0.82038816<br />time: 9<br />loc: 0.92559998<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 8<br />anc.loc: 0.82038816<br />time: 9<br />loc: 0.92559998<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 8<br />anc.loc: -0.46705579<br />time: 9<br />loc: -2.20876889<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 8<br />anc.loc: -0.46705579<br />time: 9<br />loc: -2.20876889<br />frame: 9<br />colour: ancestral line",null,"pmax(1, time - 1): 8<br />anc.loc: -0.80526711<br />time: 9<br />loc: -0.16002011<br />frame: 9<br />colour: ancestral line","pmax(1, time - 1): 8<br />anc.loc: -0.80526711<br />time: 9<br />loc: -0.16002011<br />frame: 9<br />colour: ancestral line"],"frame":"9","type":"scatter","mode":"lines","line":{"width":1.88976377952756,"color":"rgba(248,118,109,1)","dash":"solid"},"hoveron":"points","name":"ancestral line","legendgroup":"ancestral line","showlegend":true,"xaxis":"x","yaxis":"y","hoverinfo":"text","visible":true}],"traces":[0,1]},{"name":"10","data":[{"x":[1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,2,2,2,2,2,2,2,2,2,2,2,2,2,2,2,2,3,3,3,3,3,3,3,3,3,3,3,3,3,3,3,3,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,6,6,6,6,6,6,6,6,6,6,6,6,6,6,6,6,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,9,9,9,9,9,9,9,9,9,9,9,9,9,9,9,9,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10],"y":[0.585528817843856,0.709466017509524,-0.109303314681054,-0.453497173462763,0.605887455840394,-1.81795596770373,0.630098551068391,-0.276184105225216,-0.284159743943371,-0.919322002474128,-0.116247806352002,1.81731204370422,0.370627864257954,0.520216457554957,-0.750531994502331,0.816899839520583,-1.87389362192153,2.43519606987921,-0.111019499436683,0.991007665556376,1.42902333217143,0.654588862602458,1.41776063439425,2.08058573999553,2.75865635491572,1.1789484660177,-0.665050809660074,1.20065429678208,-0.608246322680488,-1.29142237960068,1.65843053619191,-0.0835022660332458,2.00094828782994,-1.9718496431245,-0.62388833989535,1.86419039869879,-0.0574292290792283,-0.256583482125251,-1.96239891859462,-0.38709711485393,-0.600251106725797,0.74029306267284,3.8034955566722,-3.63836635796405,0.0660897145819629,-0.351523816173168,1.75395737228598,0.924912033035336,-1.30593630108152,3.07080502507755,1.3452761537882,0.318712510796175,1.56655134982527,-1.43542883008643,2.34043867945245,1.94617044051643,0.0214947343107265,1.1092332661334,-0.36179834177289,3.21740397963581,0.584123558183514,1.24321084467137,0.738132183596749,-0.908204487563135,0.604189748612507,0.722290986419984,-1.90573386490635,-2.00048299435227,0.542637918317463,-0.572099772057259,0.741131038583174,-1.68655359762182,1.70763566268555,-1.84198429957246,-0.673404426351037,3.12266099242653,-0.129320780498295,0.639836048290143,0.01303859779118,0.0212174616340499,2.28599464062077,0.648047590811593,-1.36440202225901,0.958855347677899,-0.993339465395833,-0.518984974527995,-1.97468906991976,1.76151503566965,-2.69564965307202,-1.86943594446187,0.968787564416303,1.48784458177452,-1.47560710331635,-2.87121266325259,2.08368850008554,-0.505275791900848,-0.503129281462436,-2.1554800853798,-2.91169398930471,1.49770052074003,1.86480074874875,-0.366584795091119,-0.971280731554545,2.03624250478892,-0.309993638089596,-2.28210509807982,-0.613900027107975,-0.756222415753161,1.18036169400518,0.61455655141293,-0.437503730496629,-2.12617683017041,1.32681033849769,-0.779902303906169,0.814477035240365,0.844063496177574,-0.467055793662738,2.86082363535566,-2.14017558210265,0.170956517178086,2.11696529423513,0.0347801924144859,-0.287952110715391,-0.635350658897616,-1.66796183998604,-0.805267106606783,-0.71538111477141,0.820388159757257,1.60718394915927,-0.478952902297727,0.594842791975961,1.61882193002937,2.17767077611212,-1.22347027200086,-1.25204189421636,-0.622045617121466,-2.07149636512469,-2.29722148724884,-0.223534028037399,0.423011574448362,1.64582585315539,0.92559997570959,-2.20876888812009,-0.160020112841558,1.25513617012079,-2.20115530944284,-1.53978325637266,-0.154588429900398,-0.384220151635263,-1.86703260501039,-0.373724316245719,0.154229781657321,1.31237913884958,-0.579773852518272,1.96836963981539,0.435194080572735,1.95712843883276,-0.544753800714326,-0.54360808437254,-0.939281183271253],"text":["time: 1<br />x: 0.58552882<br />frame: 10","time: 1<br />x: 0.70946602<br />frame: 10","time: 1<br />x: -0.10930331<br />frame: 10","time: 1<br />x: -0.45349717<br />frame: 10","time: 1<br />x: 0.60588746<br />frame: 10","time: 1<br />x: -1.81795597<br />frame: 10","time: 1<br />x: 0.63009855<br />frame: 10","time: 1<br />x: -0.27618411<br />frame: 10","time: 1<br />x: -0.28415974<br />frame: 10","time: 1<br />x: -0.91932200<br />frame: 10","time: 1<br />x: -0.11624781<br />frame: 10","time: 1<br />x: 1.81731204<br />frame: 10","time: 1<br />x: 0.37062786<br />frame: 10","time: 1<br />x: 0.52021646<br />frame: 10","time: 1<br />x: -0.75053199<br />frame: 10","time: 1<br />x: 0.81689984<br />frame: 10","time: 2<br />x: -1.87389362<br />frame: 10","time: 2<br />x: 2.43519607<br />frame: 10","time: 2<br />x: -0.11101950<br />frame: 10","time: 2<br />x: 0.99100767<br />frame: 10","time: 2<br />x: 1.42902333<br />frame: 10","time: 2<br />x: 0.65458886<br />frame: 10","time: 2<br />x: 1.41776063<br />frame: 10","time: 2<br />x: 2.08058574<br />frame: 10","time: 2<br />x: 2.75865635<br />frame: 10","time: 2<br />x: 1.17894847<br />frame: 10","time: 2<br />x: -0.66505081<br />frame: 10","time: 2<br />x: 1.20065430<br />frame: 10","time: 2<br />x: -0.60824632<br />frame: 10","time: 2<br />x: -1.29142238<br />frame: 10","time: 2<br />x: 1.65843054<br />frame: 10","time: 2<br />x: -0.08350227<br />frame: 10","time: 3<br />x: 2.00094829<br />frame: 10","time: 3<br />x: -1.97184964<br />frame: 10","time: 3<br />x: -0.62388834<br />frame: 10","time: 3<br />x: 1.86419040<br />frame: 10","time: 3<br />x: -0.05742923<br />frame: 10","time: 3<br />x: -0.25658348<br />frame: 10","time: 3<br />x: -1.96239892<br />frame: 10","time: 3<br />x: -0.38709711<br />frame: 10","time: 3<br />x: -0.60025111<br />frame: 10","time: 3<br />x: 0.74029306<br />frame: 10","time: 3<br />x: 3.80349556<br />frame: 10","time: 3<br />x: -3.63836636<br />frame: 10","time: 3<br />x: 0.06608971<br />frame: 10","time: 3<br />x: -0.35152382<br />frame: 10","time: 3<br />x: 1.75395737<br />frame: 10","time: 3<br />x: 0.92491203<br />frame: 10","time: 4<br />x: -1.30593630<br />frame: 10","time: 4<br />x: 3.07080503<br />frame: 10","time: 4<br />x: 1.34527615<br />frame: 10","time: 4<br />x: 0.31871251<br />frame: 10","time: 4<br />x: 1.56655135<br />frame: 10","time: 4<br />x: -1.43542883<br />frame: 10","time: 4<br />x: 2.34043868<br />frame: 10","time: 4<br />x: 1.94617044<br />frame: 10","time: 4<br />x: 0.02149473<br />frame: 10","time: 4<br />x: 1.10923327<br />frame: 10","time: 4<br />x: -0.36179834<br />frame: 10","time: 4<br />x: 3.21740398<br />frame: 10","time: 4<br />x: 0.58412356<br />frame: 10","time: 4<br />x: 1.24321084<br />frame: 10","time: 4<br />x: 0.73813218<br />frame: 10","time: 4<br />x: -0.90820449<br />frame: 10","time: 5<br />x: 0.60418975<br />frame: 10","time: 5<br />x: 0.72229099<br />frame: 10","time: 5<br />x: -1.90573386<br />frame: 10","time: 5<br />x: -2.00048299<br />frame: 10","time: 5<br />x: 0.54263792<br />frame: 10","time: 5<br />x: -0.57209977<br />frame: 10","time: 5<br />x: 0.74113104<br />frame: 10","time: 5<br />x: -1.68655360<br />frame: 10","time: 5<br />x: 1.70763566<br />frame: 10","time: 5<br />x: -1.84198430<br />frame: 10","time: 5<br />x: -0.67340443<br />frame: 10","time: 5<br />x: 3.12266099<br />frame: 10","time: 5<br />x: -0.12932078<br />frame: 10","time: 5<br />x: 0.63983605<br />frame: 10","time: 5<br />x: 0.01303860<br />frame: 10","time: 5<br />x: 0.02121746<br />frame: 10","time: 6<br />x: 2.28599464<br />frame: 10","time: 6<br />x: 0.64804759<br />frame: 10","time: 6<br />x: -1.36440202<br />frame: 10","time: 6<br />x: 0.95885535<br />frame: 10","time: 6<br />x: -0.99333947<br />frame: 10","time: 6<br />x: -0.51898497<br />frame: 10","time: 6<br />x: -1.97468907<br />frame: 10","time: 6<br />x: 1.76151504<br />frame: 10","time: 6<br />x: -2.69564965<br />frame: 10","time: 6<br />x: -1.86943594<br />frame: 10","time: 6<br />x: 0.96878756<br />frame: 10","time: 6<br />x: 1.48784458<br />frame: 10","time: 6<br />x: -1.47560710<br />frame: 10","time: 6<br />x: -2.87121266<br />frame: 10","time: 6<br />x: 2.08368850<br />frame: 10","time: 6<br />x: -0.50527579<br />frame: 10","time: 7<br />x: -0.50312928<br />frame: 10","time: 7<br />x: -2.15548009<br />frame: 10","time: 7<br />x: -2.91169399<br />frame: 10","time: 7<br />x: 1.49770052<br />frame: 10","time: 7<br />x: 1.86480075<br />frame: 10","time: 7<br />x: -0.36658480<br />frame: 10","time: 7<br />x: -0.97128073<br />frame: 10","time: 7<br />x: 2.03624250<br />frame: 10","time: 7<br />x: -0.30999364<br />frame: 10","time: 7<br />x: -2.28210510<br />frame: 10","time: 7<br />x: -0.61390003<br />frame: 10","time: 7<br />x: -0.75622242<br />frame: 10","time: 7<br />x: 1.18036169<br />frame: 10","time: 7<br />x: 0.61455655<br />frame: 10","time: 7<br />x: -0.43750373<br />frame: 10","time: 7<br />x: -2.12617683<br />frame: 10","time: 8<br />x: 1.32681034<br />frame: 10","time: 8<br />x: -0.77990230<br />frame: 10","time: 8<br />x: 0.81447704<br />frame: 10","time: 8<br />x: 0.84406350<br />frame: 10","time: 8<br />x: -0.46705579<br />frame: 10","time: 8<br />x: 2.86082364<br />frame: 10","time: 8<br />x: -2.14017558<br />frame: 10","time: 8<br />x: 0.17095652<br />frame: 10","time: 8<br />x: 2.11696529<br />frame: 10","time: 8<br />x: 0.03478019<br />frame: 10","time: 8<br />x: -0.28795211<br />frame: 10","time: 8<br />x: -0.63535066<br />frame: 10","time: 8<br />x: -1.66796184<br />frame: 10","time: 8<br />x: -0.80526711<br />frame: 10","time: 8<br />x: -0.71538111<br />frame: 10","time: 8<br />x: 0.82038816<br />frame: 10","time: 9<br />x: 1.60718395<br />frame: 10","time: 9<br />x: -0.47895290<br />frame: 10","time: 9<br />x: 0.59484279<br />frame: 10","time: 9<br />x: 1.61882193<br />frame: 10","time: 9<br />x: 2.17767078<br />frame: 10","time: 9<br />x: -1.22347027<br />frame: 10","time: 9<br />x: -1.25204189<br />frame: 10","time: 9<br />x: -0.62204562<br />frame: 10","time: 9<br />x: -2.07149637<br />frame: 10","time: 9<br />x: -2.29722149<br />frame: 10","time: 9<br />x: -0.22353403<br />frame: 10","time: 9<br />x: 0.42301157<br />frame: 10","time: 9<br />x: 1.64582585<br />frame: 10","time: 9<br />x: 0.92559998<br />frame: 10","time: 9<br />x: -2.20876889<br />frame: 10","time: 9<br />x: -0.16002011<br />frame: 10","time: 10<br />x: 1.25513617<br />frame: 10","time: 10<br />x: -2.20115531<br />frame: 10","time: 10<br />x: -1.53978326<br />frame: 10","time: 10<br />x: -0.15458843<br />frame: 10","time: 10<br />x: -0.38422015<br />frame: 10","time: 10<br />x: -1.86703261<br />frame: 10","time: 10<br />x: -0.37372432<br />frame: 10","time: 10<br />x: 0.15422978<br />frame: 10","time: 10<br />x: 1.31237914<br />frame: 10","time: 10<br />x: -0.57977385<br />frame: 10","time: 10<br />x: 1.96836964<br />frame: 10","time: 10<br />x: 0.43519408<br />frame: 10","time: 10<br />x: 1.95712844<br />frame: 10","time: 10<br />x: -0.54475380<br />frame: 10","time: 10<br />x: -0.54360808<br />frame: 10","time: 10<br />x: -0.93928118<br />frame: 10"],"frame":"10","type":"scatter","mode":"markers","marker":{"autocolorscale":false,"color":"rgba(0,0,0,1)","opacity":1,"size":5.66929133858268,"symbol":"circle","line":{"width":1.88976377952756,"color":"rgba(0,0,0,1)"}},"hoveron":"points","showlegend":false,"xaxis":"x","yaxis":"y","hoverinfo":"text","visible":true},{"x":[1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,1,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,1,2,null,2,3,null,2,3,null,2,3,null,2,3,null,2,3,null,2,3,null,2,3,null,2,3,null,2,3,null,2,3,null,2,3,null,2,3,null,2,3,null,2,3,null,2,3,null,2,3,null,3,4,null,3,4,null,3,4,null,3,4,null,3,4,null,3,4,null,3,4,null,3,4,null,3,4,null,3,4,null,3,4,null,3,4,null,3,4,null,3,4,null,3,4,null,3,4,null,4,5,null,4,5,null,4,5,null,4,5,null,4,5,null,4,5,null,4,5,null,4,5,null,4,5,null,4,5,null,4,5,null,4,5,null,4,5,null,4,5,null,4,5,null,4,5,null,5,6,null,5,6,null,5,6,null,5,6,null,5,6,null,5,6,null,5,6,null,5,6,null,5,6,null,5,6,null,5,6,null,5,6,null,5,6,null,5,6,null,5,6,null,5,6,null,6,7,null,6,7,null,6,7,null,6,7,null,6,7,null,6,7,null,6,7,null,6,7,null,6,7,null,6,7,null,6,7,null,6,7,null,6,7,null,6,7,null,6,7,null,6,7,null,7,8,null,7,8,null,7,8,null,7,8,null,7,8,null,7,8,null,7,8,null,7,8,null,7,8,null,7,8,null,7,8,null,7,8,null,7,8,null,7,8,null,7,8,null,7,8,null,8,9,null,8,9,null,8,9,null,8,9,null,8,9,null,8,9,null,8,9,null,8,9,null,8,9,null,8,9,null,8,9,null,8,9,null,8,9,null,8,9,null,8,9,null,8,9,null,9,10,null,9,10,null,9,10,null,9,10,null,9,10,null,9,10,null,9,10,null,9,10,null,9,10,null,9,10,null,9,10,null,9,10,null,9,10,null,9,10,null,9,10,null,9,10],"y":[0.585528817843856,0.585528817843856,null,0.709466017509524,0.709466017509524,null,-0.109303314681054,-0.109303314681054,null,-0.453497173462763,-0.453497173462763,null,0.605887455840394,0.605887455840394,null,-1.81795596770373,-1.81795596770373,null,0.630098551068391,0.630098551068391,null,-0.276184105225216,-0.276184105225216,null,-0.284159743943371,-0.284159743943371,null,-0.919322002474128,-0.919322002474128,null,-0.116247806352002,-0.116247806352002,null,1.81731204370422,1.81731204370422,null,0.370627864257954,0.370627864257954,null,0.520216457554957,0.520216457554957,null,-0.750531994502331,-0.750531994502331,null,0.816899839520583,0.816899839520583,null,-0.276184105225216,-1.87389362192153,null,0.630098551068391,2.43519606987921,null,0.370627864257954,-0.111019499436683,null,0.370627864257954,0.991007665556376,null,0.816899839520583,1.42902333217143,null,0.816899839520583,0.654588862602458,null,0.605887455840394,1.41776063439425,null,-0.116247806352002,2.08058573999553,null,0.709466017509524,2.75865635491572,null,-0.453497173462763,1.1789484660177,null,-0.919322002474128,-0.665050809660074,null,0.709466017509524,1.20065429678208,null,-0.284159743943371,-0.608246322680488,null,0.370627864257954,-1.29142237960068,null,-0.109303314681054,1.65843053619191,null,-0.109303314681054,-0.0835022660332458,null,1.41776063439425,2.00094828782994,null,-0.665050809660074,-1.9718496431245,null,-0.0835022660332458,-0.62388833989535,null,-0.0835022660332458,1.86419039869879,null,-0.111019499436683,-0.0574292290792283,null,-0.608246322680488,-0.256583482125251,null,-1.29142237960068,-1.96239891859462,null,-0.665050809660074,-0.38709711485393,null,-1.29142237960068,-0.600251106725797,null,-0.0835022660332458,0.74029306267284,null,1.65843053619191,3.8034955566722,null,-1.29142237960068,-3.63836635796405,null,-0.0835022660332458,0.0660897145819629,null,0.991007665556376,-0.351523816173168,null,1.20065429678208,1.75395737228598,null,-0.665050809660074,0.924912033035336,null,-0.256583482125251,-1.30593630108152,null,0.74029306267284,3.07080502507755,null,-0.0574292290792283,1.3452761537882,null,-0.62388833989535,0.318712510796175,null,0.74029306267284,1.56655134982527,null,-0.62388833989535,-1.43542883008643,null,1.86419039869879,2.34043867945245,null,0.924912033035336,1.94617044051643,null,-0.62388833989535,0.0214947343107265,null,0.0660897145819629,1.1092332661334,null,-0.0574292290792283,-0.36179834177289,null,0.74029306267284,3.21740397963581,null,-0.38709711485393,0.584123558183514,null,-0.62388833989535,1.24321084467137,null,0.0660897145819629,0.738132183596749,null,-0.600251106725797,-0.908204487563135,null,1.1092332661334,0.604189748612507,null,-1.43542883008643,0.722290986419984,null,-1.30593630108152,-1.90573386490635,null,-1.30593630108152,-2.00048299435227,null,0.318712510796175,0.542637918317463,null,0.584123558183514,-0.572099772057259,null,0.318712510796175,0.741131038583174,null,-0.36179834177289,-1.68655359762182,null,1.56655134982527,1.70763566268555,null,-1.30593630108152,-1.84198429957246,null,-0.36179834177289,-0.673404426351037,null,1.56655134982527,3.12266099242653,null,0.318712510796175,-0.129320780498295,null,0.318712510796175,0.639836048290143,null,1.24321084467137,0.01303859779118,null,1.3452761537882,0.0212174616340499,null,0.741131038583174,2.28599464062077,null,-0.673404426351037,0.648047590811593,null,-1.68655359762182,-1.36440202225901,null,-0.572099772057259,0.958855347677899,null,-0.572099772057259,-0.993339465395833,null,0.639836048290143,-0.518984974527995,null,-0.129320780498295,-1.97468906991976,null,0.604189748612507,1.76151503566965,null,-0.572099772057259,-2.69564965307202,null,-0.673404426351037,-1.86943594446187,null,-0.673404426351037,0.968787564416303,null,0.604189748612507,1.48784458177452,null,-2.00048299435227,-1.47560710331635,null,-1.68655359762182,-2.87121266325259,null,-0.572099772057259,2.08368850008554,null,0.542637918317463,-0.505275791900848,null,-0.518984974527995,-0.503129281462436,null,-2.69564965307202,-2.1554800853798,null,-1.36440202225901,-2.91169398930471,null,0.648047590811593,1.49770052074003,null,0.968787564416303,1.86480074874875,null,-0.505275791900848,-0.366584795091119,null,0.648047590811593,-0.971280731554545,null,1.48784458177452,2.03624250478892,null,-0.505275791900848,-0.309993638089596,null,-1.47560710331635,-2.28210509807982,null,-0.505275791900848,-0.613900027107975,null,-0.505275791900848,-0.756222415753161,null,-0.518984974527995,1.18036169400518,null,0.958855347677899,0.61455655141293,null,-0.505275791900848,-0.437503730496629,null,-1.47560710331635,-2.12617683017041,null,-0.437503730496629,1.32681033849769,null,-0.756222415753161,-0.779902303906169,null,0.61455655141293,0.814477035240365,null,-0.503129281462436,0.844063496177574,null,-0.503129281462436,-0.467055793662738,null,2.03624250478892,2.86082363535566,null,-0.437503730496629,-2.14017558210265,null,-0.309993638089596,0.170956517178086,null,-0.366584795091119,2.11696529423513,null,-0.366584795091119,0.0347801924144859,null,-0.503129281462436,-0.287952110715391,null,1.18036169400518,-0.635350658897616,null,-0.756222415753161,-1.66796183998604,null,-0.756222415753161,-0.805267106606783,null,-0.309993638089596,-0.71538111477141,null,-0.309993638089596,0.820388159757257,null,0.820388159757257,1.60718394915927,null,-0.779902303906169,-0.478952902297727,null,-0.71538111477141,0.594842791975961,null,0.820388159757257,1.61882193002937,null,1.32681033849769,2.17767077611212,null,-0.779902303906169,-1.22347027200086,null,-0.805267106606783,-1.25204189421636,null,-0.635350658897616,-0.622045617121466,null,-0.635350658897616,-2.07149636512469,null,-1.66796183998604,-2.29722148724884,null,-0.467055793662738,-0.223534028037399,null,-0.635350658897616,0.423011574448362,null,0.814477035240365,1.64582585315539,null,0.820388159757257,0.92559997570959,null,-0.467055793662738,-2.20876888812009,null,-0.805267106606783,-0.160020112841558,null,0.594842791975961,1.25513617012079,null,-0.478952902297727,-2.20115530944284,null,0.594842791975961,-1.53978325637266,null,-0.223534028037399,-0.154588429900398,null,-1.25204189421636,-0.384220151635263,null,0.423011574448362,-1.86703260501039,null,-0.223534028037399,-0.373724316245719,null,0.423011574448362,0.154229781657321,null,-0.478952902297727,1.31237913884958,null,-1.25204189421636,-0.579773852518272,null,2.17767077611212,1.96836963981539,null,0.423011574448362,0.435194080572735,null,0.423011574448362,1.95712843883276,null,-0.622045617121466,-0.544753800714326,null,-0.622045617121466,-0.54360808437254,null,-0.160020112841558,-0.939281183271253],"text":["pmax(1, time - 1): 1<br />anc.loc: 0.58552882<br />time: 1<br />loc: 0.58552882<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.58552882<br />time: 1<br />loc: 0.58552882<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.70946602<br />time: 1<br />loc: 0.70946602<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.70946602<br />time: 1<br />loc: 0.70946602<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.10930331<br />time: 1<br />loc: -0.10930331<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.10930331<br />time: 1<br />loc: -0.10930331<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.45349717<br />time: 1<br />loc: -0.45349717<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.45349717<br />time: 1<br />loc: -0.45349717<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.60588746<br />time: 1<br />loc: 0.60588746<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.60588746<br />time: 1<br />loc: 0.60588746<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -1.81795597<br />time: 1<br />loc: -1.81795597<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -1.81795597<br />time: 1<br />loc: -1.81795597<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.63009855<br />time: 1<br />loc: 0.63009855<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.63009855<br />time: 1<br />loc: 0.63009855<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.27618411<br />time: 1<br />loc: -0.27618411<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.27618411<br />time: 1<br />loc: -0.27618411<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.28415974<br />time: 1<br />loc: -0.28415974<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.28415974<br />time: 1<br />loc: -0.28415974<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.91932200<br />time: 1<br />loc: -0.91932200<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.91932200<br />time: 1<br />loc: -0.91932200<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.11624781<br />time: 1<br />loc: -0.11624781<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.11624781<br />time: 1<br />loc: -0.11624781<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 1.81731204<br />time: 1<br />loc: 1.81731204<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 1.81731204<br />time: 1<br />loc: 1.81731204<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.37062786<br />time: 1<br />loc: 0.37062786<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.37062786<br />time: 1<br />loc: 0.37062786<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.52021646<br />time: 1<br />loc: 0.52021646<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.52021646<br />time: 1<br />loc: 0.52021646<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.75053199<br />time: 1<br />loc: -0.75053199<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.75053199<br />time: 1<br />loc: -0.75053199<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.81689984<br />time: 1<br />loc: 0.81689984<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.81689984<br />time: 1<br />loc: 0.81689984<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.27618411<br />time: 2<br />loc: -1.87389362<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.27618411<br />time: 2<br />loc: -1.87389362<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.63009855<br />time: 2<br />loc: 2.43519607<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.63009855<br />time: 2<br />loc: 2.43519607<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.37062786<br />time: 2<br />loc: -0.11101950<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.37062786<br />time: 2<br />loc: -0.11101950<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.37062786<br />time: 2<br />loc: 0.99100767<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.37062786<br />time: 2<br />loc: 0.99100767<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.81689984<br />time: 2<br />loc: 1.42902333<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.81689984<br />time: 2<br />loc: 1.42902333<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.81689984<br />time: 2<br />loc: 0.65458886<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.81689984<br />time: 2<br />loc: 0.65458886<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.60588746<br />time: 2<br />loc: 1.41776063<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.60588746<br />time: 2<br />loc: 1.41776063<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.11624781<br />time: 2<br />loc: 2.08058574<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.11624781<br />time: 2<br />loc: 2.08058574<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.70946602<br />time: 2<br />loc: 2.75865635<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.70946602<br />time: 2<br />loc: 2.75865635<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.45349717<br />time: 2<br />loc: 1.17894847<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.45349717<br />time: 2<br />loc: 1.17894847<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.91932200<br />time: 2<br />loc: -0.66505081<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.91932200<br />time: 2<br />loc: -0.66505081<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.70946602<br />time: 2<br />loc: 1.20065430<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.70946602<br />time: 2<br />loc: 1.20065430<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.28415974<br />time: 2<br />loc: -0.60824632<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.28415974<br />time: 2<br />loc: -0.60824632<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: 0.37062786<br />time: 2<br />loc: -1.29142238<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: 0.37062786<br />time: 2<br />loc: -1.29142238<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.10930331<br />time: 2<br />loc: 1.65843054<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.10930331<br />time: 2<br />loc: 1.65843054<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 1<br />anc.loc: -0.10930331<br />time: 2<br />loc: -0.08350227<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 1<br />anc.loc: -0.10930331<br />time: 2<br />loc: -0.08350227<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: 1.41776063<br />time: 3<br />loc: 2.00094829<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: 1.41776063<br />time: 3<br />loc: 2.00094829<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: -0.66505081<br />time: 3<br />loc: -1.97184964<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: -0.66505081<br />time: 3<br />loc: -1.97184964<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: -0.08350227<br />time: 3<br />loc: -0.62388834<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: -0.08350227<br />time: 3<br />loc: -0.62388834<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: -0.08350227<br />time: 3<br />loc: 1.86419040<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: -0.08350227<br />time: 3<br />loc: 1.86419040<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: -0.11101950<br />time: 3<br />loc: -0.05742923<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: -0.11101950<br />time: 3<br />loc: -0.05742923<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: -0.60824632<br />time: 3<br />loc: -0.25658348<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: -0.60824632<br />time: 3<br />loc: -0.25658348<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: -1.29142238<br />time: 3<br />loc: -1.96239892<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: -1.29142238<br />time: 3<br />loc: -1.96239892<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: -0.66505081<br />time: 3<br />loc: -0.38709711<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: -0.66505081<br />time: 3<br />loc: -0.38709711<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: -1.29142238<br />time: 3<br />loc: -0.60025111<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: -1.29142238<br />time: 3<br />loc: -0.60025111<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: -0.08350227<br />time: 3<br />loc: 0.74029306<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: -0.08350227<br />time: 3<br />loc: 0.74029306<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: 1.65843054<br />time: 3<br />loc: 3.80349556<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: 1.65843054<br />time: 3<br />loc: 3.80349556<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: -1.29142238<br />time: 3<br />loc: -3.63836636<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: -1.29142238<br />time: 3<br />loc: -3.63836636<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: -0.08350227<br />time: 3<br />loc: 0.06608971<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: -0.08350227<br />time: 3<br />loc: 0.06608971<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: 0.99100767<br />time: 3<br />loc: -0.35152382<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: 0.99100767<br />time: 3<br />loc: -0.35152382<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: 1.20065430<br />time: 3<br />loc: 1.75395737<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: 1.20065430<br />time: 3<br />loc: 1.75395737<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 2<br />anc.loc: -0.66505081<br />time: 3<br />loc: 0.92491203<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 2<br />anc.loc: -0.66505081<br />time: 3<br />loc: 0.92491203<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: -0.25658348<br />time: 4<br />loc: -1.30593630<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: -0.25658348<br />time: 4<br />loc: -1.30593630<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: 0.74029306<br />time: 4<br />loc: 3.07080503<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: 0.74029306<br />time: 4<br />loc: 3.07080503<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: -0.05742923<br />time: 4<br />loc: 1.34527615<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: -0.05742923<br />time: 4<br />loc: 1.34527615<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: -0.62388834<br />time: 4<br />loc: 0.31871251<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: -0.62388834<br />time: 4<br />loc: 0.31871251<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: 0.74029306<br />time: 4<br />loc: 1.56655135<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: 0.74029306<br />time: 4<br />loc: 1.56655135<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: -0.62388834<br />time: 4<br />loc: -1.43542883<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: -0.62388834<br />time: 4<br />loc: -1.43542883<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: 1.86419040<br />time: 4<br />loc: 2.34043868<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: 1.86419040<br />time: 4<br />loc: 2.34043868<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: 0.92491203<br />time: 4<br />loc: 1.94617044<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: 0.92491203<br />time: 4<br />loc: 1.94617044<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: -0.62388834<br />time: 4<br />loc: 0.02149473<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: -0.62388834<br />time: 4<br />loc: 0.02149473<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: 0.06608971<br />time: 4<br />loc: 1.10923327<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: 0.06608971<br />time: 4<br />loc: 1.10923327<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: -0.05742923<br />time: 4<br />loc: -0.36179834<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: -0.05742923<br />time: 4<br />loc: -0.36179834<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: 0.74029306<br />time: 4<br />loc: 3.21740398<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: 0.74029306<br />time: 4<br />loc: 3.21740398<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: -0.38709711<br />time: 4<br />loc: 0.58412356<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: -0.38709711<br />time: 4<br />loc: 0.58412356<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: -0.62388834<br />time: 4<br />loc: 1.24321084<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: -0.62388834<br />time: 4<br />loc: 1.24321084<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: 0.06608971<br />time: 4<br />loc: 0.73813218<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: 0.06608971<br />time: 4<br />loc: 0.73813218<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 3<br />anc.loc: -0.60025111<br />time: 4<br />loc: -0.90820449<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 3<br />anc.loc: -0.60025111<br />time: 4<br />loc: -0.90820449<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 4<br />anc.loc: 1.10923327<br />time: 5<br />loc: 0.60418975<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 4<br />anc.loc: 1.10923327<br />time: 5<br />loc: 0.60418975<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 4<br />anc.loc: -1.43542883<br />time: 5<br />loc: 0.72229099<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 4<br />anc.loc: -1.43542883<br />time: 5<br />loc: 0.72229099<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 4<br />anc.loc: -1.30593630<br />time: 5<br />loc: -1.90573386<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 4<br />anc.loc: -1.30593630<br />time: 5<br />loc: -1.90573386<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 4<br />anc.loc: -1.30593630<br />time: 5<br />loc: -2.00048299<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 4<br />anc.loc: -1.30593630<br />time: 5<br />loc: -2.00048299<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 4<br />anc.loc: 0.31871251<br />time: 5<br />loc: 0.54263792<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 4<br />anc.loc: 0.31871251<br />time: 5<br />loc: 0.54263792<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 4<br />anc.loc: 0.58412356<br />time: 5<br />loc: -0.57209977<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 4<br />anc.loc: 0.58412356<br />time: 5<br />loc: -0.57209977<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 4<br />anc.loc: 0.31871251<br />time: 5<br />loc: 0.74113104<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 4<br />anc.loc: 0.31871251<br />time: 5<br />loc: 0.74113104<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 4<br />anc.loc: -0.36179834<br />time: 5<br />loc: -1.68655360<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 4<br />anc.loc: -0.36179834<br />time: 5<br />loc: -1.68655360<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 4<br />anc.loc: 1.56655135<br />time: 5<br />loc: 1.70763566<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 4<br />anc.loc: 1.56655135<br />time: 5<br />loc: 1.70763566<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 4<br />anc.loc: -1.30593630<br />time: 5<br />loc: -1.84198430<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 4<br />anc.loc: -1.30593630<br />time: 5<br />loc: -1.84198430<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 4<br />anc.loc: -0.36179834<br />time: 5<br />loc: -0.67340443<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 4<br />anc.loc: -0.36179834<br />time: 5<br />loc: -0.67340443<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 4<br />anc.loc: 1.56655135<br />time: 5<br />loc: 3.12266099<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 4<br />anc.loc: 1.56655135<br />time: 5<br />loc: 3.12266099<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 4<br />anc.loc: 0.31871251<br />time: 5<br />loc: -0.12932078<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 4<br />anc.loc: 0.31871251<br />time: 5<br />loc: -0.12932078<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 4<br />anc.loc: 0.31871251<br />time: 5<br />loc: 0.63983605<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 4<br />anc.loc: 0.31871251<br />time: 5<br />loc: 0.63983605<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 4<br />anc.loc: 1.24321084<br />time: 5<br />loc: 0.01303860<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 4<br />anc.loc: 1.24321084<br />time: 5<br />loc: 0.01303860<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 4<br />anc.loc: 1.34527615<br />time: 5<br />loc: 0.02121746<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 4<br />anc.loc: 1.34527615<br />time: 5<br />loc: 0.02121746<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 5<br />anc.loc: 0.74113104<br />time: 6<br />loc: 2.28599464<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 5<br />anc.loc: 0.74113104<br />time: 6<br />loc: 2.28599464<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 5<br />anc.loc: -0.67340443<br />time: 6<br />loc: 0.64804759<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 5<br />anc.loc: -0.67340443<br />time: 6<br />loc: 0.64804759<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 5<br />anc.loc: -1.68655360<br />time: 6<br />loc: -1.36440202<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 5<br />anc.loc: -1.68655360<br />time: 6<br />loc: -1.36440202<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 5<br />anc.loc: -0.57209977<br />time: 6<br />loc: 0.95885535<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 5<br />anc.loc: -0.57209977<br />time: 6<br />loc: 0.95885535<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 5<br />anc.loc: -0.57209977<br />time: 6<br />loc: -0.99333947<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 5<br />anc.loc: -0.57209977<br />time: 6<br />loc: -0.99333947<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 5<br />anc.loc: 0.63983605<br />time: 6<br />loc: -0.51898497<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 5<br />anc.loc: 0.63983605<br />time: 6<br />loc: -0.51898497<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 5<br />anc.loc: -0.12932078<br />time: 6<br />loc: -1.97468907<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 5<br />anc.loc: -0.12932078<br />time: 6<br />loc: -1.97468907<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 5<br />anc.loc: 0.60418975<br />time: 6<br />loc: 1.76151504<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 5<br />anc.loc: 0.60418975<br />time: 6<br />loc: 1.76151504<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 5<br />anc.loc: -0.57209977<br />time: 6<br />loc: -2.69564965<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 5<br />anc.loc: -0.57209977<br />time: 6<br />loc: -2.69564965<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 5<br />anc.loc: -0.67340443<br />time: 6<br />loc: -1.86943594<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 5<br />anc.loc: -0.67340443<br />time: 6<br />loc: -1.86943594<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 5<br />anc.loc: -0.67340443<br />time: 6<br />loc: 0.96878756<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 5<br />anc.loc: -0.67340443<br />time: 6<br />loc: 0.96878756<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 5<br />anc.loc: 0.60418975<br />time: 6<br />loc: 1.48784458<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 5<br />anc.loc: 0.60418975<br />time: 6<br />loc: 1.48784458<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 5<br />anc.loc: -2.00048299<br />time: 6<br />loc: -1.47560710<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 5<br />anc.loc: -2.00048299<br />time: 6<br />loc: -1.47560710<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 5<br />anc.loc: -1.68655360<br />time: 6<br />loc: -2.87121266<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 5<br />anc.loc: -1.68655360<br />time: 6<br />loc: -2.87121266<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 5<br />anc.loc: -0.57209977<br />time: 6<br />loc: 2.08368850<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 5<br />anc.loc: -0.57209977<br />time: 6<br />loc: 2.08368850<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 5<br />anc.loc: 0.54263792<br />time: 6<br />loc: -0.50527579<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 5<br />anc.loc: 0.54263792<br />time: 6<br />loc: -0.50527579<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 6<br />anc.loc: -0.51898497<br />time: 7<br />loc: -0.50312928<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 6<br />anc.loc: -0.51898497<br />time: 7<br />loc: -0.50312928<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 6<br />anc.loc: -2.69564965<br />time: 7<br />loc: -2.15548009<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 6<br />anc.loc: -2.69564965<br />time: 7<br />loc: -2.15548009<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 6<br />anc.loc: -1.36440202<br />time: 7<br />loc: -2.91169399<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 6<br />anc.loc: -1.36440202<br />time: 7<br />loc: -2.91169399<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 6<br />anc.loc: 0.64804759<br />time: 7<br />loc: 1.49770052<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 6<br />anc.loc: 0.64804759<br />time: 7<br />loc: 1.49770052<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 6<br />anc.loc: 0.96878756<br />time: 7<br />loc: 1.86480075<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 6<br />anc.loc: 0.96878756<br />time: 7<br />loc: 1.86480075<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 6<br />anc.loc: -0.50527579<br />time: 7<br />loc: -0.36658480<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 6<br />anc.loc: -0.50527579<br />time: 7<br />loc: -0.36658480<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 6<br />anc.loc: 0.64804759<br />time: 7<br />loc: -0.97128073<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 6<br />anc.loc: 0.64804759<br />time: 7<br />loc: -0.97128073<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 6<br />anc.loc: 1.48784458<br />time: 7<br />loc: 2.03624250<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 6<br />anc.loc: 1.48784458<br />time: 7<br />loc: 2.03624250<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 6<br />anc.loc: -0.50527579<br />time: 7<br />loc: -0.30999364<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 6<br />anc.loc: -0.50527579<br />time: 7<br />loc: -0.30999364<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 6<br />anc.loc: -1.47560710<br />time: 7<br />loc: -2.28210510<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 6<br />anc.loc: -1.47560710<br />time: 7<br />loc: -2.28210510<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 6<br />anc.loc: -0.50527579<br />time: 7<br />loc: -0.61390003<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 6<br />anc.loc: -0.50527579<br />time: 7<br />loc: -0.61390003<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 6<br />anc.loc: -0.50527579<br />time: 7<br />loc: -0.75622242<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 6<br />anc.loc: -0.50527579<br />time: 7<br />loc: -0.75622242<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 6<br />anc.loc: -0.51898497<br />time: 7<br />loc: 1.18036169<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 6<br />anc.loc: -0.51898497<br />time: 7<br />loc: 1.18036169<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 6<br />anc.loc: 0.95885535<br />time: 7<br />loc: 0.61455655<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 6<br />anc.loc: 0.95885535<br />time: 7<br />loc: 0.61455655<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 6<br />anc.loc: -0.50527579<br />time: 7<br />loc: -0.43750373<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 6<br />anc.loc: -0.50527579<br />time: 7<br />loc: -0.43750373<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 6<br />anc.loc: -1.47560710<br />time: 7<br />loc: -2.12617683<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 6<br />anc.loc: -1.47560710<br />time: 7<br />loc: -2.12617683<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 7<br />anc.loc: -0.43750373<br />time: 8<br />loc: 1.32681034<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 7<br />anc.loc: -0.43750373<br />time: 8<br />loc: 1.32681034<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 7<br />anc.loc: -0.75622242<br />time: 8<br />loc: -0.77990230<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 7<br />anc.loc: -0.75622242<br />time: 8<br />loc: -0.77990230<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 7<br />anc.loc: 0.61455655<br />time: 8<br />loc: 0.81447704<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 7<br />anc.loc: 0.61455655<br />time: 8<br />loc: 0.81447704<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 7<br />anc.loc: -0.50312928<br />time: 8<br />loc: 0.84406350<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 7<br />anc.loc: -0.50312928<br />time: 8<br />loc: 0.84406350<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 7<br />anc.loc: -0.50312928<br />time: 8<br />loc: -0.46705579<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 7<br />anc.loc: -0.50312928<br />time: 8<br />loc: -0.46705579<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 7<br />anc.loc: 2.03624250<br />time: 8<br />loc: 2.86082364<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 7<br />anc.loc: 2.03624250<br />time: 8<br />loc: 2.86082364<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 7<br />anc.loc: -0.43750373<br />time: 8<br />loc: -2.14017558<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 7<br />anc.loc: -0.43750373<br />time: 8<br />loc: -2.14017558<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 7<br />anc.loc: -0.30999364<br />time: 8<br />loc: 0.17095652<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 7<br />anc.loc: -0.30999364<br />time: 8<br />loc: 0.17095652<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 7<br />anc.loc: -0.36658480<br />time: 8<br />loc: 2.11696529<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 7<br />anc.loc: -0.36658480<br />time: 8<br />loc: 2.11696529<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 7<br />anc.loc: -0.36658480<br />time: 8<br />loc: 0.03478019<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 7<br />anc.loc: -0.36658480<br />time: 8<br />loc: 0.03478019<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 7<br />anc.loc: -0.50312928<br />time: 8<br />loc: -0.28795211<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 7<br />anc.loc: -0.50312928<br />time: 8<br />loc: -0.28795211<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 7<br />anc.loc: 1.18036169<br />time: 8<br />loc: -0.63535066<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 7<br />anc.loc: 1.18036169<br />time: 8<br />loc: -0.63535066<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 7<br />anc.loc: -0.75622242<br />time: 8<br />loc: -1.66796184<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 7<br />anc.loc: -0.75622242<br />time: 8<br />loc: -1.66796184<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 7<br />anc.loc: -0.75622242<br />time: 8<br />loc: -0.80526711<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 7<br />anc.loc: -0.75622242<br />time: 8<br />loc: -0.80526711<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 7<br />anc.loc: -0.30999364<br />time: 8<br />loc: -0.71538111<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 7<br />anc.loc: -0.30999364<br />time: 8<br />loc: -0.71538111<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 7<br />anc.loc: -0.30999364<br />time: 8<br />loc: 0.82038816<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 7<br />anc.loc: -0.30999364<br />time: 8<br />loc: 0.82038816<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 8<br />anc.loc: 0.82038816<br />time: 9<br />loc: 1.60718395<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 8<br />anc.loc: 0.82038816<br />time: 9<br />loc: 1.60718395<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 8<br />anc.loc: -0.77990230<br />time: 9<br />loc: -0.47895290<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 8<br />anc.loc: -0.77990230<br />time: 9<br />loc: -0.47895290<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 8<br />anc.loc: -0.71538111<br />time: 9<br />loc: 0.59484279<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 8<br />anc.loc: -0.71538111<br />time: 9<br />loc: 0.59484279<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 8<br />anc.loc: 0.82038816<br />time: 9<br />loc: 1.61882193<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 8<br />anc.loc: 0.82038816<br />time: 9<br />loc: 1.61882193<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 8<br />anc.loc: 1.32681034<br />time: 9<br />loc: 2.17767078<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 8<br />anc.loc: 1.32681034<br />time: 9<br />loc: 2.17767078<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 8<br />anc.loc: -0.77990230<br />time: 9<br />loc: -1.22347027<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 8<br />anc.loc: -0.77990230<br />time: 9<br />loc: -1.22347027<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 8<br />anc.loc: -0.80526711<br />time: 9<br />loc: -1.25204189<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 8<br />anc.loc: -0.80526711<br />time: 9<br />loc: -1.25204189<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 8<br />anc.loc: -0.63535066<br />time: 9<br />loc: -0.62204562<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 8<br />anc.loc: -0.63535066<br />time: 9<br />loc: -0.62204562<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 8<br />anc.loc: -0.63535066<br />time: 9<br />loc: -2.07149637<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 8<br />anc.loc: -0.63535066<br />time: 9<br />loc: -2.07149637<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 8<br />anc.loc: -1.66796184<br />time: 9<br />loc: -2.29722149<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 8<br />anc.loc: -1.66796184<br />time: 9<br />loc: -2.29722149<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 8<br />anc.loc: -0.46705579<br />time: 9<br />loc: -0.22353403<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 8<br />anc.loc: -0.46705579<br />time: 9<br />loc: -0.22353403<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 8<br />anc.loc: -0.63535066<br />time: 9<br />loc: 0.42301157<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 8<br />anc.loc: -0.63535066<br />time: 9<br />loc: 0.42301157<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 8<br />anc.loc: 0.81447704<br />time: 9<br />loc: 1.64582585<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 8<br />anc.loc: 0.81447704<br />time: 9<br />loc: 1.64582585<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 8<br />anc.loc: 0.82038816<br />time: 9<br />loc: 0.92559998<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 8<br />anc.loc: 0.82038816<br />time: 9<br />loc: 0.92559998<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 8<br />anc.loc: -0.46705579<br />time: 9<br />loc: -2.20876889<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 8<br />anc.loc: -0.46705579<br />time: 9<br />loc: -2.20876889<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 8<br />anc.loc: -0.80526711<br />time: 9<br />loc: -0.16002011<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 8<br />anc.loc: -0.80526711<br />time: 9<br />loc: -0.16002011<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 9<br />anc.loc: 0.59484279<br />time: 10<br />loc: 1.25513617<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 9<br />anc.loc: 0.59484279<br />time: 10<br />loc: 1.25513617<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 9<br />anc.loc: -0.47895290<br />time: 10<br />loc: -2.20115531<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 9<br />anc.loc: -0.47895290<br />time: 10<br />loc: -2.20115531<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 9<br />anc.loc: 0.59484279<br />time: 10<br />loc: -1.53978326<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 9<br />anc.loc: 0.59484279<br />time: 10<br />loc: -1.53978326<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 9<br />anc.loc: -0.22353403<br />time: 10<br />loc: -0.15458843<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 9<br />anc.loc: -0.22353403<br />time: 10<br />loc: -0.15458843<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 9<br />anc.loc: -1.25204189<br />time: 10<br />loc: -0.38422015<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 9<br />anc.loc: -1.25204189<br />time: 10<br />loc: -0.38422015<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 9<br />anc.loc: 0.42301157<br />time: 10<br />loc: -1.86703261<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 9<br />anc.loc: 0.42301157<br />time: 10<br />loc: -1.86703261<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 9<br />anc.loc: -0.22353403<br />time: 10<br />loc: -0.37372432<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 9<br />anc.loc: -0.22353403<br />time: 10<br />loc: -0.37372432<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 9<br />anc.loc: 0.42301157<br />time: 10<br />loc: 0.15422978<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 9<br />anc.loc: 0.42301157<br />time: 10<br />loc: 0.15422978<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 9<br />anc.loc: -0.47895290<br />time: 10<br />loc: 1.31237914<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 9<br />anc.loc: -0.47895290<br />time: 10<br />loc: 1.31237914<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 9<br />anc.loc: -1.25204189<br />time: 10<br />loc: -0.57977385<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 9<br />anc.loc: -1.25204189<br />time: 10<br />loc: -0.57977385<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 9<br />anc.loc: 2.17767078<br />time: 10<br />loc: 1.96836964<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 9<br />anc.loc: 2.17767078<br />time: 10<br />loc: 1.96836964<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 9<br />anc.loc: 0.42301157<br />time: 10<br />loc: 0.43519408<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 9<br />anc.loc: 0.42301157<br />time: 10<br />loc: 0.43519408<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 9<br />anc.loc: 0.42301157<br />time: 10<br />loc: 1.95712844<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 9<br />anc.loc: 0.42301157<br />time: 10<br />loc: 1.95712844<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 9<br />anc.loc: -0.62204562<br />time: 10<br />loc: -0.54475380<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 9<br />anc.loc: -0.62204562<br />time: 10<br />loc: -0.54475380<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 9<br />anc.loc: -0.62204562<br />time: 10<br />loc: -0.54360808<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 9<br />anc.loc: -0.62204562<br />time: 10<br />loc: -0.54360808<br />frame: 10<br />colour: ancestral line",null,"pmax(1, time - 1): 9<br />anc.loc: -0.16002011<br />time: 10<br />loc: -0.93928118<br />frame: 10<br />colour: ancestral line","pmax(1, time - 1): 9<br />anc.loc: -0.16002011<br />time: 10<br />loc: -0.93928118<br />frame: 10<br />colour: ancestral line"],"frame":"10","type":"scatter","mode":"lines","line":{"width":1.88976377952756,"color":"rgba(248,118,109,1)","dash":"solid"},"hoveron":"points","name":"ancestral line","legendgroup":"ancestral line","showlegend":true,"xaxis":"x","yaxis":"y","hoverinfo":"text","visible":true}],"traces":[0,1]}],"shinyEvents":["plotly_hover","plotly_click","plotly_selected","plotly_relayout","plotly_brushed","plotly_brushing","plotly_clickannotation","plotly_doubleclick","plotly_deselect","plotly_afterplot","plotly_sunburstclick"],"base_url":"https://plot.ly"},"evals":[],"jsHooks":[]}</script> --- # A little bit of theory For simplicity, assume `\(G_1,\ldots,G_n\)` and `\(f\)` are bounded. **Lack of bias of `\(Z_n^N\)`**: `\(\mathbb{E}[Z_n^N] = Z_n\)`. **Law of large numbers**: `\(Z_n^N \overset{\rm a.s.}{\to} Z_n\)` and `\(\eta_n^N(f) \overset{\rm a.s.}{\to} \eta_n(f)\)` as `\(N \to \infty\)`. **Asymptotic normality**: `\(N^{1/2}(Z_n^N - Z_n)\)` and `\(N^{1/2}(\eta_n^N(f) - \eta_n(f))\)` converge in distribution to (different) normal random variables. **Time uniform convergence**: Under special assumptions one has `$$\sup_{n \geq 1} \mathbb{E}\left [ | \eta_n^N(f) - \eta_n(f) |^2 \right ]^{1/2} \leq \frac{E(f)}{\sqrt{N}}.$$` **Stability of `\(Z_n^N\)` with `\(N \propto n\)`**: Under similar assumptions, `$${\rm var}\left (\frac{Z_n^N}{Z_n} \right ) \leq (1+C/N)^n.$$` --- # Extensions to paths One can also define the measure `$$\bar{\gamma}_n({\rm d}x_1,\ldots,{\rm d}x_n) = \mu({\rm d}x_1)\prod_{p=2}^n G_{p-1}(x_{p-1})M_p(x_{p-1}, {\rm d}x_p).$$` In an HMM: this has density `\(f(x_{1:n}, y_{1:n-1}) \propto f(x_{1:n} \mid y_{1:n-1})\)`. -- By tracing an ancestral line from each of the time `\(n\)` particles, one can define a particle (path) approximation of `\(\bar{\gamma}_n\)`. `$$\bar{\gamma}_n^N(f) = Z_{n-1}^N \frac{1}{N} \sum_{i=1}^N f(\zeta_{1:n}^{\text{path }i}),$$` where the `\(i\)`th path arises by tracing the ancestors of `\(\zeta_n^i\)`. These approximations are unbiased and consistent. A corresponding `\(\bar{\eta}_n^N(f)\)` is obtained by omitting the `\(Z_{n-1}^N\)` term, which is biased but consistent. --- # Ancestral lineages, `\(N=16\)` particles <!-- --> --- # Ancestral lineages, `\(N=256\)` particles <!-- --> --- class: inverse, center, middle # Arbitrary target distributions --- # Flow of distributions The algorithm does not necessarily require a HMM interpretation. One defines `\(\eta_1 = \mu\)` and for `\(p=2,\ldots,n\)` `$$\eta_p = \frac{\eta_{p-1}\cdot G_{p-1}}{\eta_{p-1}(G_{p-1})} M_p = \Phi_p(\eta_{p-1}).$$` -- <br/> <br/> <br/> In order to use SMC for approximating `\(\pi(f)\)` for an arbitrary `\(\pi\)` we just need to construct an appropriate flow from `\(\mu\)` to `\(\pi\)`, where `\(\mu\)` is easy to sample from. --- # An SMC sampler Define `\(\mu = \eta_1 \gg \ldots \gg \eta_n = \pi\)` directly. Then let `$$G_{p-1} \propto \frac{{\rm d}\eta_p}{{\rm d}\eta_{p-1}},$$` and each `\(M_p\)` be a `\(\eta_p\)`-invariant Markov kernel, i.e. `\(\eta_p M_p = \eta_p\)`. -- We can then verify that for `\(p=2,\ldots,n\)`, `$$\frac{\eta_{p-1} \cdot G_{p-1}}{\eta_{p-1}(G_{p-1})}M_p = \eta_p M_p = \eta_p,$$` so the flow is valid. -- In practice, one might choose some `\(\mu \gg \pi\)` and let `$$\eta_p(x) \propto \mu(x)^{1-\beta_p} \pi(x)^{\beta_p},$$` for some `\(0=\beta_1 < \ldots < \beta_n = 1\)`. --- class: inverse, center, middle # Twisted flows --- # Twisting flows A "good" flow has small associated variances. Once you have a flow, you can also *twist* it. A given `\(\mu\)`, `\(M_1,\ldots,M_n\)`, `\(G_1,\ldots,G_n\)`, can be twisted. Notation: `\(M(f)(x) = \int f(x')M(x,{\rm d}x')\)`. -- For some positive functions `\(\psi_1,\ldots,\psi_{n}\)` define `$$\mu^\psi = \frac{\mu \cdot \psi_1}{\mu(\psi_1)}, \qquad G_1^\psi(x) := \frac{G_1(x)M_2(\psi_2)(x)}{\psi_1(x)} \mu(\psi_1),$$` and for `\(p \in \{2,\ldots,n\}\)` `$$M_p^\psi(x, {\rm d}x'):=\frac{M_p(x, {\rm d}x') \cdot \psi_p(x')}{M_p(\psi_p)(x)},\qquad G_p^\psi(x) = \frac{G_p(x)M_{p+1}(\psi_{p+1})(x)}{\psi_p(x)}.$$` Calculations give `\(\eta_p^\psi \propto \eta_p \cdot \psi_p\)` for each `\(p\)`. --- # Optimal twisting functions To obtain a zero variance approximation of `\(Z_n\)`, one should use `$$\psi_p(x) = G_p(x) M_{p+1}(\psi_{p+1})(x).$$` -- In the HMM context, this corresponds to `$$\psi_p(x_p) = f(y_{p:n} \mid x_p).$$` Intuition: particles are weighted by how they explain the "future" as well as the present. -- The optimal `\(\psi\)` functions are typically intractable. But one can use any reasonable approximation. Example: for `\(\psi_p = G_p\)`, one obtains the fully-adapted auxiliary particle filter. Caution: the intermediate distributions are changed by twisting. --- class: inverse, center, middle # Variance estimation --- # How accurate are our SMC approximations? There is substantial theory on how variable SMC approximations are. Especially for the unbiased, "unnormalized" approximations. -- In practice this is not *that* helpful for assessing approximation quality. A simple estimate of the variance can be phrased in terms of the time `\(n\)` particles and the *Eve* indices of those particles: i.e. the index of their time `\(1\)` ancestors, `\(E_n^1,\ldots,E_n^N\)`. <!-- --> --- # Estimate of variance of `\(Z_{n-1}^N\)` Let `\(E_n^j\)` denote the index of the ancestor of `\(\zeta_n^j\)`. Then an estimate of `\({\rm var}(Z_{n-1}^N/Z_{n-1})\)` is `$$V_n^N = 1 - \left (\frac{N}{N-1} \right)^n \left( 1 - \frac{1}{N^2} \sum_{i=1}^N | \{j : E_n^j = i \} |^2 \right)$$` -- If for some `\(k\)`, `\(E_n^1 = \cdots = E_n^N = k\)` then `\(V_n^N = 1\)`. We have lack-of-bias in the sense that `$$\mathbb{E}[(Z_{n-1}^N)^2 V_n^N] = {\rm var}(Z_{n-1}^N).$$` We have consistency in the sense that `$$N V_n^N \overset{P}{\to} \lim_{N \to \infty} N {\rm var}(Z_{n-1}^N/Z_{n-1}).$$` --- # R code ```r VnN <- function(as) { n <- dim(as)[1] + 1 N <- dim(as)[2] eves <- 1:N # recursively update the Eve indices for (p in 1:(n-1)) { eves <- eves[as[p,]] } 1 - (N/(N-1))^n*(1 - sum(table(eves)^2)/N^2) } ``` The estimate depends only on the Eve indices, which depend only on the ancestors. Approximating the variance of `\(\eta_n^N(f)\)` or `\(Z^N_{n-1}\eta_n^N(f)\)` is only a little bit more complicate, and does depend on particle values. --- ```r lZ <- -12.4395996645203368302645685616880655 trials <- 1e3 lZs <- rep(0, trials) Vs <- rep(0, trials) for (i in 1:trials) { out <- smc(mu, M, G, 10, 128) lZs[i] <- out$log.Zs[9] Vs[i] <- VnN(out$as) } mean(exp(lZs-lZ)) # lack-of-bias: should be close to 1 ``` ``` ## [1] 0.9941002 ``` ```r var(exp(lZs-lZ)) # sample relative variance of Zs ``` ``` ## [1] 0.02712202 ``` ```r mean(exp(2*(lZs-lZ))*Vs) # unbiased estimate of relative variance ``` ``` ## [1] 0.02755751 ``` ```r mean(Vs) # biased estimate of relative variance ``` ``` ## [1] 0.02746865 ``` --- <!-- --> ``` ## [1] "VnN(out$as) = 0.032616368188082" ``` --- class: inverse, center, middle # Remarks --- # Remarks SMC is useful for approximating integrals with a particular structure. It can be very computationally efficient in suitable scenarios, e.g. time-uniform convergence. One can also transform complex, high-dimensional integrals into integrals with this structure. Not covered: - Particle MCMC: use of SMC within MCMC, e.g. to infer parameters. - Specific smoothing algorithms. - Refined theoretical results. --- class: center, middle # Thanks!