APTS Statistical Computing: Preliminary Notes

1 Introduction

The APTS Statistical Computing course aims to introduce some rudiments of numerical analysis, which it is helpful to know in order to do good statistics research. To get the most out of the course you need to arrive with a reasonable knowledge of undergraduate statistics/mathematics (see next section), and you need to be able to do some basic R programming.

If you do not have good prior knowledge of R then you should work through Ruth Ripley’s R programming for APTS students notes before these notes. If you are fluent in R it is still a good idea to look through the notes, even if only briefly. Once you are sufficiently well prepared in R programming, please go through the orientiation exercises provided below, which are designed to help you get the most out of the Statistical Computing module. If you can complete the exercises reasonably well, then you are likely to be well prepared for the course. Some pointers to other material on R are also given, along with a list of mathematical and statistical things that the course assumes you already know.

A reasonable attempt at at least exercises 1 through 4 below is essential in order to get the most out of the lab sessions during the APTS week. Just being comfortable that in principle you could do them is not enough in this case. Your solutions to the exercises may help with the labs.

For the lab sessions it will be assumed that you have access to an internet-connected computer running R, and that you can install R packages on it. R is available here. You may also like to install RStudio.

2 Mathematical and statistical preliminaries

Here is a check-list of basic mathematical/statistical concepts that it might help to brush up on if you are a little rusty on any of them:

- The multivariate version of Taylor’s theorem.

- The chain rule for differentiation.

- The multivariate normal distribution and its p.d.f.

- Linear models and the notion of a random effect.

- How to multiply matrices (by each other and with vectors). Matrix addition.

- The meaning of: square matrix, matrix transposition; upper/lower triangular matrix; symmetric matrix; diagonal matrix; trace of a matrix; identity matrix; positive (semi-) definite matrix.

- The notion of a matrix inverse and its relationship to solving the matrix equation \({\bf Ax} = {\bf y}\) for \(\bf x\), where \(\bf A\) is a square matrix and \(\bf y\) and \(\bf x\) are vectors.

- The definition of eigenvectors and eigenvalues.

- The determinant of a matrix.

- Orthogonal matrices. The fact that if \(\bf Q\) is an orthogonal matrix then \({\bf Qy}\) is a rotation/reflection of vector \(\bf y\).

- The rank of a matrix and the problems associated with not being full rank.

Most textbooks with titles like `Mathematics for Engineers/Scientists/Science students’ will provide all that is necessary: pick the one you like best, or is nearest to hand. At time of writing, the Wikipedia entries on the above material looked fairly reasonable.

The course will assume that you know the following basic matrix terminology:

\[ \text{Square:}\ \left ( \begin{array}{ccc} x_{11} & x_{12} & x_{13} \\ x_{21} & x_{22} & x_{23} \\ x_{31} & x_{32} & x_{33} \\ \end{array}\right ) \]

\[ \text{Symmetric:}\ \left ( \begin{array}{ccc} x_{11} & x_{12} & x_{13} \\ x_{12} & x_{22} & x_{23} \\ x_{13} & x_{23} & x_{33} \\ \end{array}\right ) \]

\[ \text{Positive (semi-)definite:}\ {\begin{array}{c}{\bf y}^{\sf T}{\bf Xy} > 0 \ \forall\ {\bf y}\ne {\bf 0}\\ \left ({\bf y}^{\sf T}{\bf Xy} \ge 0 \ \forall\ {\bf y} \ne {\bf 0}\right )\end{array}} \]

\[ \text{Lower triangular:}\ \left ( \begin{array}{ccc} x_{11} & 0 & 0 \\ x_{21} & x_{22} & 0 \\ x_{31} & x_{32} & x_{33} \\ \end{array}\right ) \]

\[ \text{Upper triangular:}\ \left ( \begin{array}{ccc} x_{11} & x_{12} & x_{13} \\ 0 & x_{22} & x_{23} \\ 0 & 0 & x_{33} \\ \end{array}\right ) \]

\[ \text{Diagonal:}\ \left ( \begin{array}{ccc} x_{11} & 0 & 0 \\ 0 & x_{22} & 0 \\ 0 & 0 & x_{33} \\ \end{array}\right ) \]

\(\bf Q\) is an orthogonal matrix iff \({\bf Q} ^{\sf T}{\bf Q} = {\bf QQ} ^{\sf T}= {\bf I}\).

3 Getting help with R

If you are not already familiar with R then work through the R programming for APTS students before doing anything else. In fact, even if you are an expert in R, the notes are worth reviewing.

Here are some pointers to further information:

- An accessible introduction to R is The Art of R programming, which might be available in your university’s library.

- For more advanced material see Advanced R, which is freely available online.

- An introduction to R is another good place to start. See the list of R manuals developed by the R Development Core Team.

- See also this and this list of books on R.

- From the R command line, typing

help.start()will launch an html version of the R help system: this is probably the best way to access the online help when starting out.

4 Some exercises

The solutions to these exercises will appear on the APTS web site one week before the start of the course. You will get most out of the exercises by attempting them before looking at the solutions.

Computers do not represent most real numbers exactly. Rather, a real number is approximated by the nearest real number that can be represented exactly (floating point number), given some scheme for representing real numbers as fixed length binary sequences. Often the approximation is not noticeable, but in some cases it can make a big difference relative to exact arithmetic (imagine that you want to know the difference between 2 distinct real numbers that are approximated by the same binary sequence, for example).

One consequence of working in finite precision arithmetic is that for any number \(x\), there is a small number \(\epsilon\) for which \(x+\epsilon\) is indistinguishable from \(x\) (with any number smaller than \(\epsilon\) having the same property).

- Try out the following code to find the size of this number, when \(x=1\).

eps <- 1 x <- 1 while (x+eps != x) eps <- eps/2 eps/x- Confirm that the final

epshere is close to the largest \(\epsilon\) for which \(x\) and \(x+\epsilon\) give rise to the same floating point number. 2*epsis stored in R as.Machine$double.eps. Confirm this.- Confirm that you get the same

eps/xvalue whenxequals 1/8,1/4,1/2,1,2, 4 or 8. - Now try some numbers which are not exactly representable as modest powers of 2, and note the difference.

- In terms of decimal digits, roughly how accurately are real numbers numbers being represented here?

R is an interpreted language. Instructions are interpreted on the fly. This tends to mean that it is efficient to code in such a way that many calculations are performed per interpreted instruction. Often this implies that loops should be avoided, otherwise R can spend much more time interpreting the instructions to carry out a calculation than on performing the calculation itself.

Re-write the following to eliminate the loops, first using

applyand then usingrowSumsX <- matrix(runif(100000),1000,1000) z <- rep(0,1000) for (i in 1:1000) { for (j in 1:1000) z[i] <- z[i] + X[i,j] }Confirm that all three versions give the same answers, but that your re-writes are much faster than the original.

system.timeis a useful function for this.Re-write the following, replacing the loop with efficient (i.e. vectorised) code.

n <- 500000 z <- rnorm(n) zneg <- 0 j <- 1 for (i in 1:n) { if (z[i] < 0) { zneg[j] <- z[i] j <- j + 1 } }Confirm that your re-write is faster but gives the same result.

Note that while it is important to avoid loops which perform a few computationally cheap operations at each step, little will be gained by removing loops where each pass of the loop is itself irreducibly expensive.

Run the following code

set.seed(1) n <- 1000 A <- matrix(runif(n*n),n,n) x <- runif(n)Evaluate \({\bf x}^{\sf T}{\bf A}{\bf x}\), \({\rm tr}({\bf A})\) and \({\rm tr}({\bf A}^{\sf T}{\bf WA})\) where \(\bf W\) is the diagonal matrix such that \(W_{ii} = x_i\).

Consider solving the matrix equation \({\bf Ax} = {\bf y}\) for \(\bf x\), where \(\bf y\) is a known \(n\) vector and \(\bf A\) a known \(n \times n\) matrix. The formal solution to the problem is \({\bf x} = {\bf A}^{-1} {\bf y}\), but it is possible to solve the equation directly, without actually forming \({\bf A}^{-1}\). This question will explore this a little. Read the help file for

solvebefore trying it.- First create an \(\bf A\), \(\bf x\) and \(\bf y\) satisfying \({\bf Ax} = {\bf y}\).

set.seed(0) n <- 5000 A <- matrix(runif(n*n),n,n) x.true <- runif(n) y <- A%*%x.trueThe idea is to experiment with solving the \({\bf Ax} = {\bf y}\) for \(\bf x\), but with a known truth to compare the answer to.

Using

solveto compute the matrix \({\bf A}^{-1}\) explicitly, and then form \({\bf x}_1 = {\bf A}^{-1} {\bf y}\). Note how long this takes. Also assess the mean absolute difference betweenx1andx.true(the approximate mean absolute error in the solution).Now use

solveto directly solve \({\bf Ax} = {\bf y}\) for \(\bf x\), without forming \({\bf A}^{-1}\). Note how long this takes and assess the mean absolute error of the result.What do you conclude?

The empirical cumulative distribution function for measurements \(x_1,\ldots,x_n\) is \[ \hat F(x) = \frac{| \{i : x_i < x \} |}{n} \] where \(| \{i : x_i < x \} |\) denotes the number of \(x_i\) values less than \(x\).

When answering the following, try to ensure that your code is commented, clearly structured, and tested. To test your code, generate random samples using

rnorm,runifetc.Write an R function which takes an un-ordered vector of observations

xand returns the values of the empirical c.d.f. for each value, in the order corresponding to the originalxvector. (See?sort.int.)Modify your function to take an extra argument

plot.cdf, which whenTRUEwill cause the empirical c.d.f. to be plotted as a step function, over a suitable \(x\) range.

This (slightly more challenging) question is about function writing and about quadratic approximations, which play an important part in statistics. Review Taylor’s theorem (multivariate) before starting.

The Rosenbrock’s function is a simple and useful test function for optimization methods: \[ f(x,z) = 100(z-x^2)^2 + (1-x)^2 \]

Write an R function,

rb, which takes as arguments the equal length vectorsxandzand returns the vector of values of Rosenbrock’s function at eachx[i],z[i].Produce a contour plot of the function over the rectangle: \(-1.5 < x < 1.5\), \(-0.5 < z < 1.5\). Functions

contourandouterare useful here.For visualization purposes it may be more useful to contour the

log10of the function, and to adjust the defaultlevelsargument.Write an R function

rb.gradwhich takes single values for each of \(x\) and \(z\) as arguments, and returns the gradient vector of Rosenbrock’s function, i.e. a vector of length 2 containing \(\partial{f}/\partial{x}\) and \(\partial{f}/\partial{z}\) evaluated at the supplied \(x,z\) values (you need to first differentiate \(f\) algebraically to do this).Test

rb.gradby finite differencing. That is by comparing the derivatives it produces to approximations calculated using using \[ \frac{\partial{f}}{\partial{x}} \simeq \frac{f(x+\Delta) - f(x) }{\Delta} \] (and similar for \(z\)). Use \(\Delta = 10^{-7}\) (Delta <- 1e-7).Write an R function

rb.hesswhich takes single values for each of \(x\) and \(z\) as arguments, and returns a \(2 \times 2\) Hessian matrix of Rosenbrock’s function, \({\bf H}\), where \[ H_{11} = \frac{\partial^2{f}}{\partial{x^2}},~~~ H_{22} = \frac{\partial^2{f}}{\partial{z^2}}, {\rm ~~and~~} H_{12} = H_{21} = \frac{\partial^2{f}}{\partial{x}\partial{z}}. \] (evaluated at the supplied \(x,z\) values, of course).Test

rb.hessby finite differencing the results fromrb.grad.Taylor’s theorem implies that you can use

rb,rb.gradandrb.hessto produce a quadratic function approximating \(f(x,z)\) in the neigbourhood of any particular point \(x^*, z^*\). Write an R function to find such an approximation, given a point \(x^*, z^*\), and to add a contour plot of the approximation to an existing contour plot of \(f\) (seeaddargument ofcontour). Your function should accept an argumentcolallowing you to set the colour of the contours of the quadratic approximation. Do make sure that the same transformation (if any) and levels are used when contouring the approximation and \(f\) itself.Plot the quadratic approximations centred on (-1,0.5), (0,0) and the function’s minimum (1,1). Note the somewhat limited region over which the quadratic approximation is reasonable, in each case.

The quadratic approximations in the last part all had well defined minima. When this is the case, the vector from the approximation point, \(x^*, z^*\), to that minimum is a `descent direction’— moving along this direction from the approximation point leads to decrease of the function \(f\), at least in the vicinity of \(x^*, z^*\) (provided we are not already at the function minimum, in which case minima of approximation and function coincide). The quadratic approximation is not always so well behaved. Try plotting the approximation at (.5,.5), for example (you may need to adjust the plotting scales to do this — the approximation is no longer always positive).

By inspection, Rosenbrock’s function has a minimum of 0 at \((1,1)\), but it is useful test function for optimization methods. As an introduction to numerical optimization it is instructive to try out some of the optimization methods supplied in the R function

optim.Read the help file

?optim, noting in particular the required arguments, how to select the optimization method, and what the function returns.Write a version of Rosenbrock’s function in which arguments \(x\) and \(z\) are supplied as first and second elements of a single vector, so that this function is suitable for passing as the

fnargument ofoptim. Do the same for the gradient function for Rosenbrock’s function, so that it is suitable for supplying as thegrargument tooptim. The easiest way to do this is to write simple `wrapper’ functions that callrbandrb.gradcreated in the previous question.From the initial point \(x=-0.5\), \(z = 1\) use

optimto minimize Rosenbrock’s function, using the default Nelder-Mead method. Check whether the method appears to have converged properly, and note the accuracy with which the minimum has been located.From the same starting point, retry the optimization using the

BFGSmethod. Do not supply agrfunction, for the moment (which means thatoptimwill have to approximate the derivatives required by the method). Note the number of function evaluations used, and the apparent accuracy with which the minimum has been located.Repeat the optimization in (d) but this time provide a

grargument tooptim. Compare the number of evaluations used this time, and the accuracy of the solution.Now repeat the optimization once more using the

CGmethod (withgrargument supplied). How far do you have to increase themaxitargument ofoptimto get convergence?

Nelder-Mead and the BFGS method will be covered in the course. The CG (Conjugate Gradient) method will not be (although it is sometimes the only feasible method for very large scale optimization problems).

Once you have completed the exercises, read on. The solutions will be posted on the APTS website.

5 Why bother learning about numerical computation?

In combination, modern computer hardware, algorithms and numerical methods are quite impressive. Take a moment to consider some simple examples, all using R.

Simulate a million random numbers and then sort them into ascending order.

x <- runif(1000000) system.time(xs <- sort(x))The sorting operation took 0.1 seconds on the laptop used to prepare these notes. If each of the numbers in

xhad been written on its own playing card, the resulting stack of cards would have been 40 metres high. Imagine trying to sortxwithout computers and clever algorithms.Here is a matrix calculation of comparatively modest dimension, by modern statistical standards.

set.seed(2) n <- 1000 A <- matrix(runif(n*n),n,n) system.time(Ai <- solve(A)) ## invert A range(Ai%*%A-diag(n)) ## check accuracy of resultThe matrix inversion took under half a second to perform, and the inverse satisfied its defining equations to around one part in \(10^{12}\). It involved around 2 billion (\(2 \times 10^9\)) basic arithmetic calculations (additions, subtractions multiplications or divisions). At a very optimistic 10 seconds per calculation this would take 86 average working lives to perform without a computer (and that’s assuming we use the same algorithm as the computer, rather than Cramer’s rule, and made no mistakes). Checking the result by multiplying the

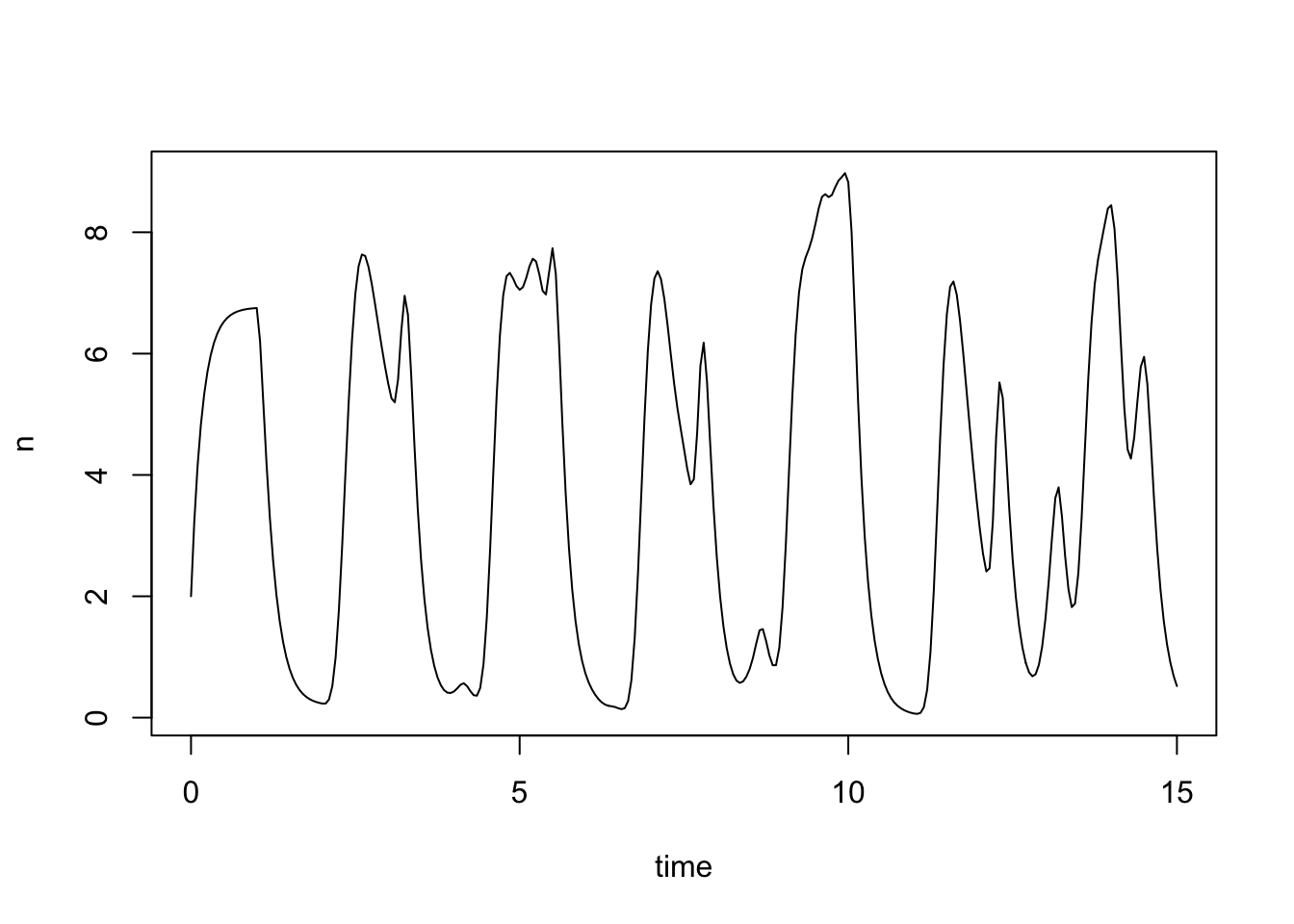

Aby the resulting inverse would take another 86 working lives.The preceding two examples are problems in which smaller versions of the same problem can be solved more or less exactly without computers and special algorithms or numerical methods. In these cases, computers allow much more useful sizes of problem to be solved very quickly. Not all problems are like this, and some problems can really only be solved by computer. For example, here is a scaled version of a simple delay differential equation model of adult population size, \(n\), in a laboratory blowfly culture \[ \frac{{\rm d}n}{{\rm d}t} = P n(t-1) e^{-n(t-1)} - \delta n(t). \] The dynamic behaviour of the solutions to this model is governed by parameter groups \(P\) and \(\delta\), but the equation cannot be solved analytically, from any interesting set of initial conditions. To see what solutions actually look like we must use numerical methods.

#install.packages("PBSddesolve") ## install package from CRAN library(PBSddesolve) bfly <- function(t,y,parms) { ## function defining rate of change of blowfly population, y, at ## time t, given y, t, lagged values of y form `pastvalue' and ## the parameters in `parms'. See `ddesolve' docs for more details. ## making names more useful ... P <- parms$P; d <- parms$delta; y0 <- parms$yinit if (t<=1){ y1 <- P*y0*exp(-y0)-d*y } else { ## initial phase ylag <- pastvalue(t-1) ## getting lagged y y1 <- P*ylag*exp(-ylag) - d*y ## the gradient } y1 ## return gradient } ## set parameters... parms <- list(P=150,delta=6,yinit=2.001) ## solve model... yout <- dde(y=parms$yinit,times=seq(0,15,0.05),func=bfly,parms=parms) ## plot result... plot(yout$time,yout$y1,type="l",ylab="n",xlab="time")

Here the solution of the model (to an accuracy of 1 part in \(10^8\)), which took a quarter of a second. See, e.g., here for more details on this model and data.

It is not unusual for statistical inference to involve the computation of integrals. For example, the posterior mean of some statistical parameter. To avoid distracting statistical motivation, consider how you might approximate numerically the integral \[ \int_{[0,\frac{\pi}{2}]^d} f (x_1,\ldots,x_d) ~{\rm d}x_{1:d} \] with only the ability to compute \(f\) at arbitrary points. For a concrete example, one could consider the integral \[ \int_{[0,\frac{\pi}{2}]^d} \sin \left ( \sum_{i=1}^d x_i \right ) {\rm d}x_{1:d}, \] which happens to be analytically tractable for a given \(d\) using iterated integrals but is otherwise a decent example of a relatively simple integral that can be challenging but not impossible for numerical methods to tackle. For small \(d\), we will see in this course that the smoothness of the \(\sin\) function allows one to obtain very accurate approximations using quadrature methods. For large \(d\) such approaches are no longer suitable and (simple) Monte Carlo methods are more appropriate. For example, one can rewrite the integral as \[ \int_{[0,\frac{\pi}{2}]^d} \sin \left ( \sum_{i=1}^d x_i \right ) {\rm d}x_{1:d} = \left ( \frac{\pi}{2} \right )^d \mathbb{E} \left [ \sin \left (\sum_{i=1}^d X_i \right ) \right ], \] where \(X_1,\ldots,X_d\) are i.i.d. \({\rm Uniform}(0,\pi/2)\) random variables, and approximate the expectation using appropriate random variates. For \(d=17\), we obtain the following approximation in a few seconds

d <- 17 N <- 1e6 s <- 0 for (i in 1:N) { x <- runif(d)*pi/2 s <- s + sin(sum(x)) } (pi/2)^d*s/N[1] 254.7524which gives a pretty good approximation of the true value, which is \(256\).

5.1 Things can go wrong

The amazing combination of speed and accuracy apparent in many computer algorithms and numerical methods can lead to a false sense of security and the wrong impression that, when translating mathematical and statistical ideas into computer code, we can simply treat the numerical methods and algorithms we use as infallible ‘black boxes’. This is not the case, as the following three simple examples should illustrate. You may be able to figure out the cause of the problem in each case, but don’t worry if not: we will return to each of the examples during the course.

The

system.timefunction in R measures how long a particular operation takes to execute. First generate two \(2000 \times 2000\) matrices, \(\bf A\) and \(\bf B\) and a vector, \(\bf y\).n <- 2000 A <- matrix(runif(n*n),n,n) B <- matrix(runif(n*n),n,n) y <- runif(n)Now form the product \(\bf ABy\) in two slightly different ways

system.time(f0 <- A%*%B%*%y)user system elapsed 0.062 0.005 0.037system.time(f1 <- A%*%(B%*%y))user system elapsed 0.005 0.000 0.005The vectors

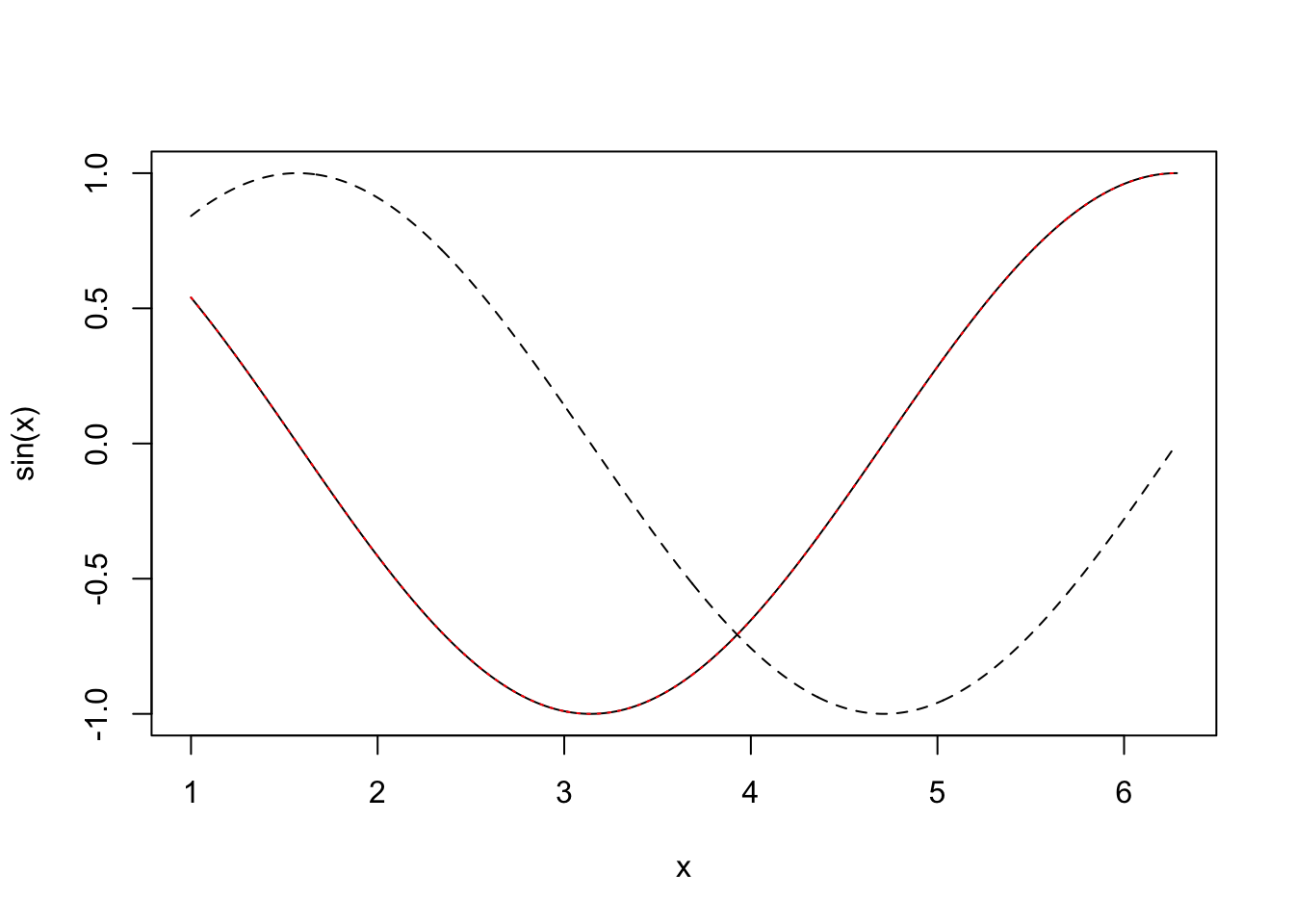

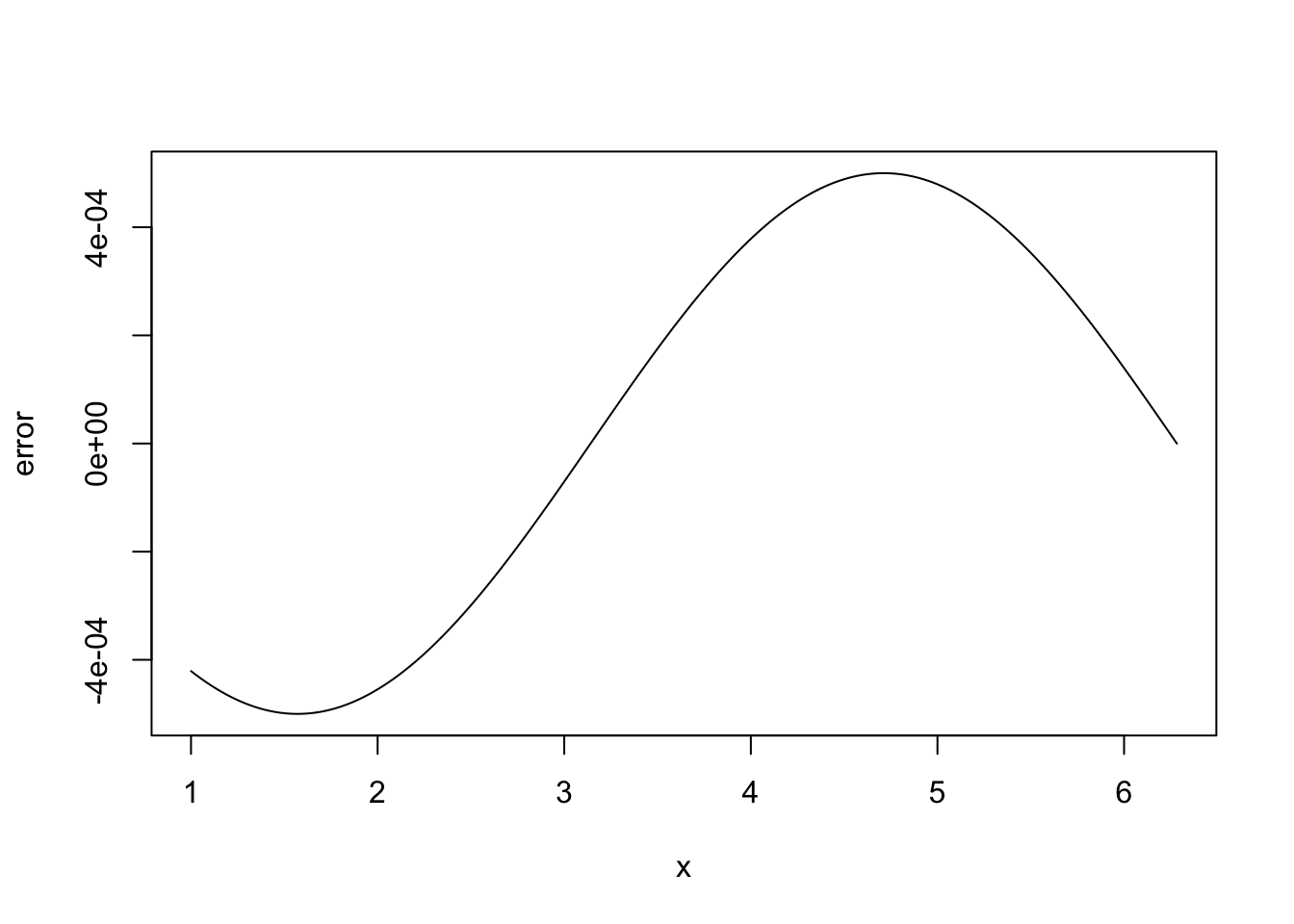

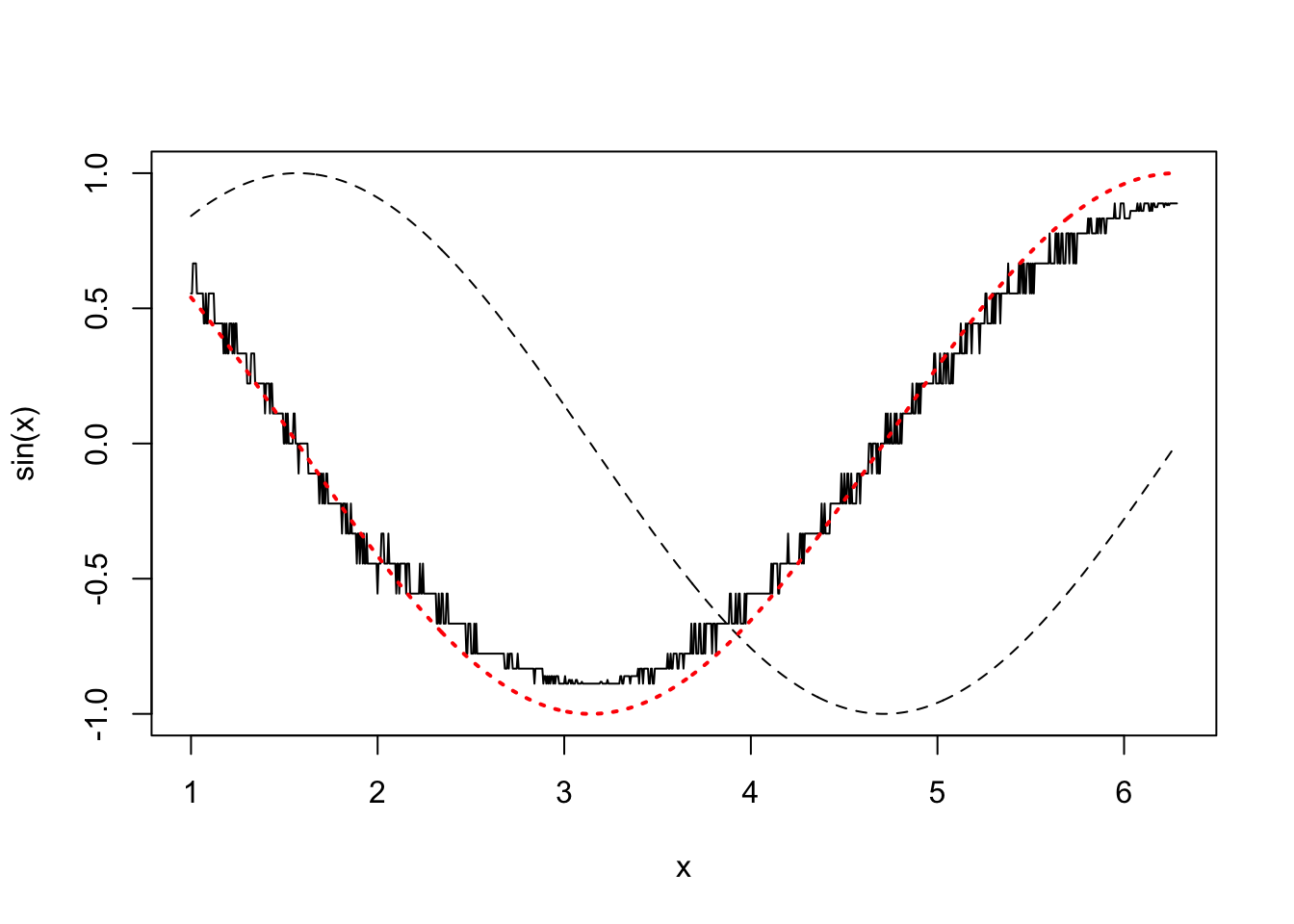

f0andf1are identical (to machine precision), so why is one calculation so much quicker than the other? Clearly anyone wanting to compute with matrices had better know the answer to this.It is not unusual to require derivatives of complicated functions, for example when fitting models to data. Such derivatives can be very tedious or difficult to calculate directly, but can be approximated quite easily by `finite differencing’. e.g. if \(f\) is a function of \(x\) then \[ \frac{\partial{f}}{\partial{x}} \simeq \frac{f(x+\Delta) - f(x)}{\Delta} \] where \(\Delta\) is a small interval. Under assumptions on the smoothness of \(f\), Taylor’s theorem implies that the right hand side tends to the left hand side as \(\Delta \to 0\). It is check this on an example where the answer is known, so consider differentiating \(\sin(x)\) w.r.t. \(x\).

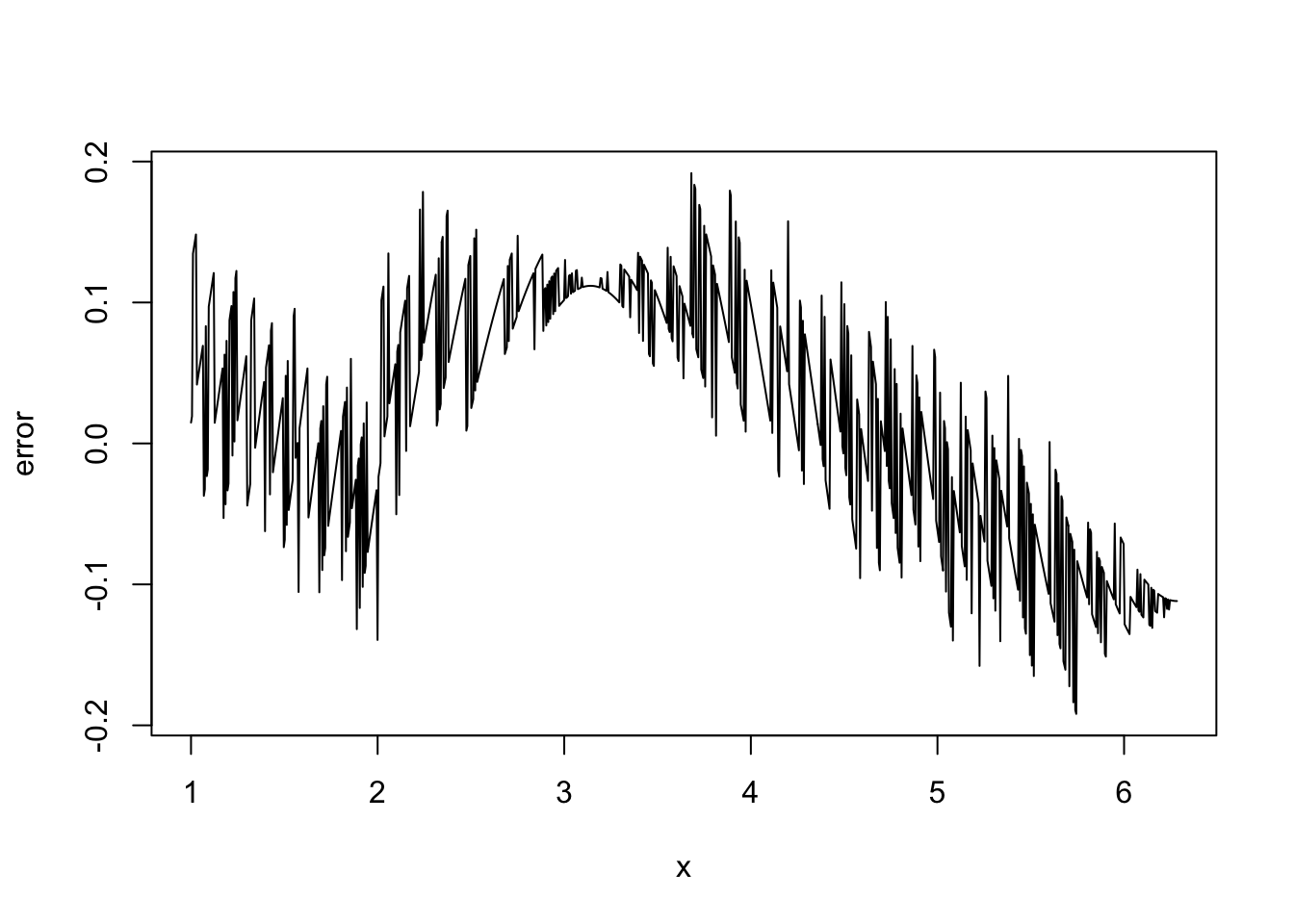

x <- seq(1,2*pi,length=1000) delta <- 1e-3 ## FD interval dsin.dx <- (sin(x+delta)-sin(x))/delta ## FD derivative error <- dsin.dx-cos(x) ## error in FD derivativeThe following code plots the results.

plot(x,sin(x),type="l", lty = 2) lines(x,dsin.dx) lines(x,cos(x),lty="dotted",col="red")

plot(x,error,type="l")

In the first plot, above, we show \(\sin(x)\) (dashed black), it’s true derivative \(\cos(x)\) (dotted red) and the FD derivative (solid black). The second plot shows the difference between the FD approximation and the truth. Note that the error appears to be of about the same magnitude as the finite difference interval \(\Delta\). Perhaps we could reduce the error to around the machine precision by using a much smaller \(\Delta\). Here is the equivalent of the previous plot, when

deltais1e-15

Clearly there is something wrong with the idea that decreasing \(\Delta\) must always increase accuracy here.

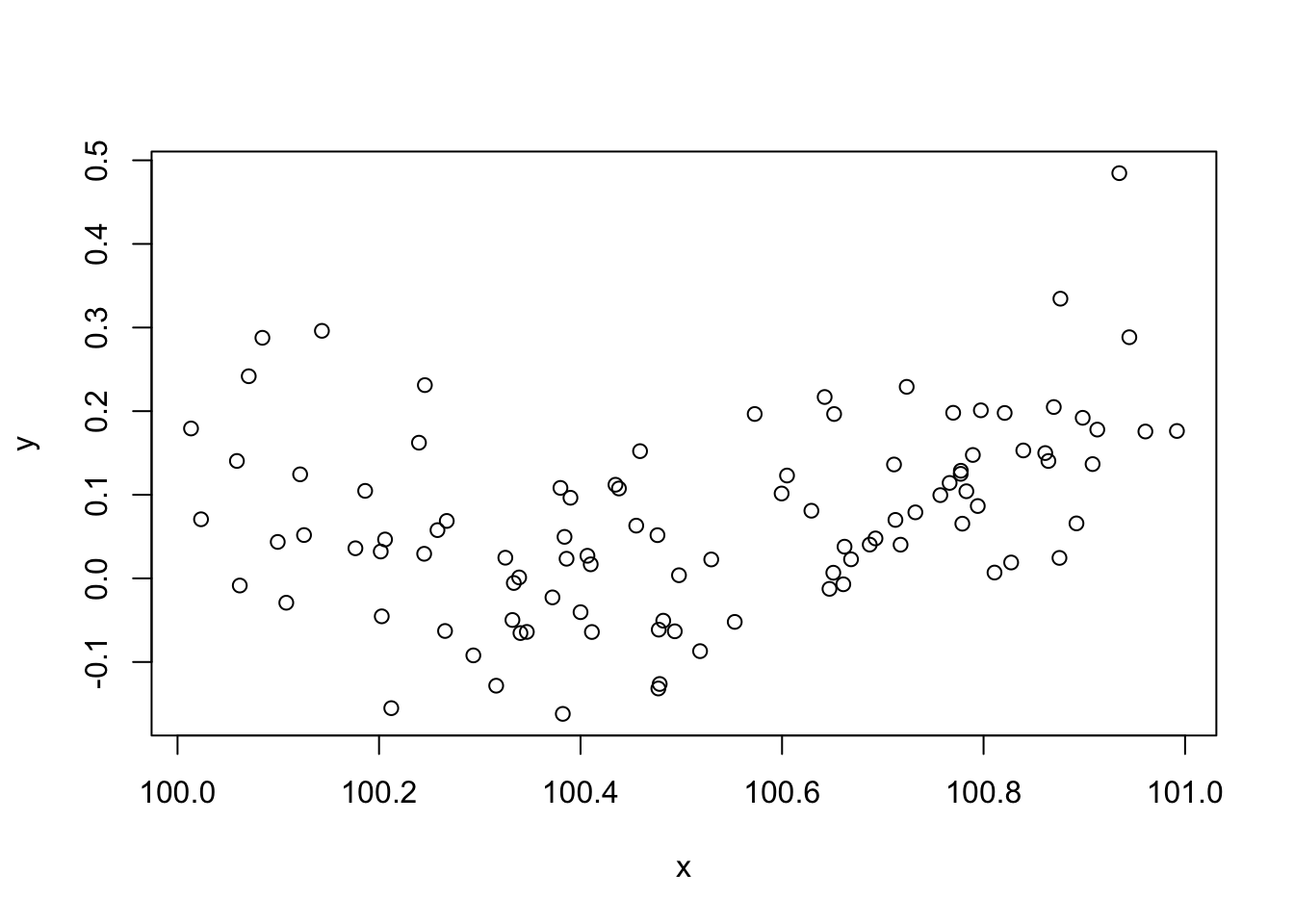

Consider the data generated by this code:

set.seed(1); n <- 100 xx <- sort(runif(n)) y <- 0.2*(xx-0.5)+(xx-0.5)^2 + rnorm(n)*0.1 x <- xx+100 plot(x,y)

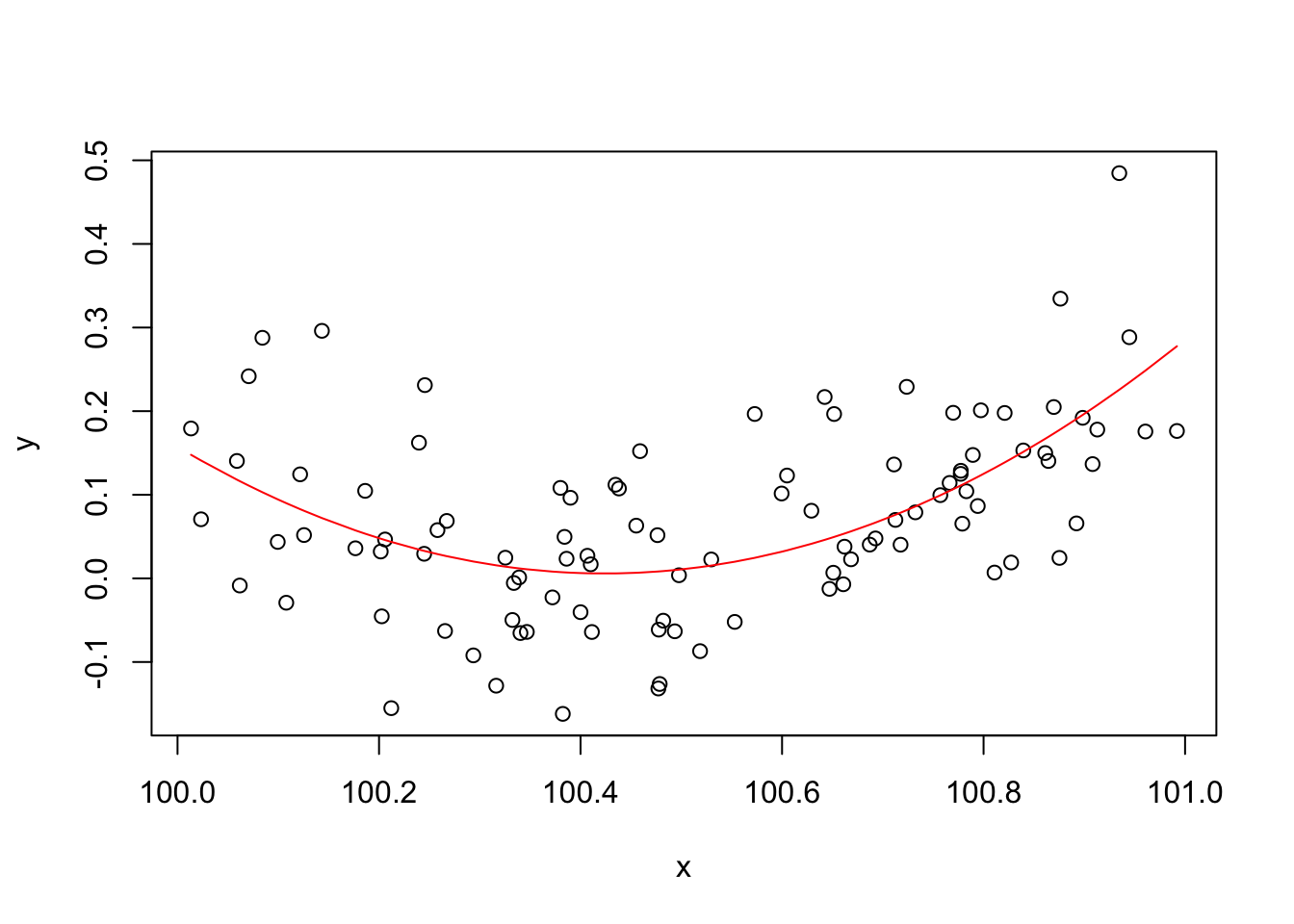

An appropriate and innocuous linear model for these data is \(y_i = \beta_0 + \beta_1 x_i + \beta_2 x_i^2 + \epsilon_i\), where the \(\epsilon_i\) are independent, zero mean constant variance r.v.s and the \(\beta_j\) are parameters. This can be fitted in R, and the curve plotted, with

b <- lm(y~x+I(x^2)) plot(x,y) lines(x,b$fitted.values,col="red")

From basic statistical modelling courses, recall that the least squares parameter estimates for a linear model are given by \(\hat {\boldsymbol{\beta}}= ({\bf X}^{\sf T}{\bf X})^{-1}{\bf X}^{\sf T}{\bf y}\) where \({\bf X}\) is the model matrix. An alternative to use of

lmwould to use the above expression for \(\hat {\boldsymbol{\beta}}\) directly, as done by the following code:X <- model.matrix(b) ## get same model matrix used above, see next line head(X)(Intercept) x I(x^2) 1 1 100.0134 10002.68 2 1 100.0233 10004.67 3 1 100.0589 10011.79 4 1 100.0618 10012.36 5 1 100.0707 10014.14 6 1 100.0842 10016.86## direct solution of the so-called `normal equations' beta.hat <- solve(t(X)%*%X, t(X)%*%y)Error in solve.default(t(X) %*% X, t(X) %*% y): system is computationally singular: reciprocal condition number = 3.98648e-19Something has gone badly wrong here, and this is a rather straightforward model. So, it’s important to understand what has happened before trying to write code to apply more sophisticated approaches to more complicated models.

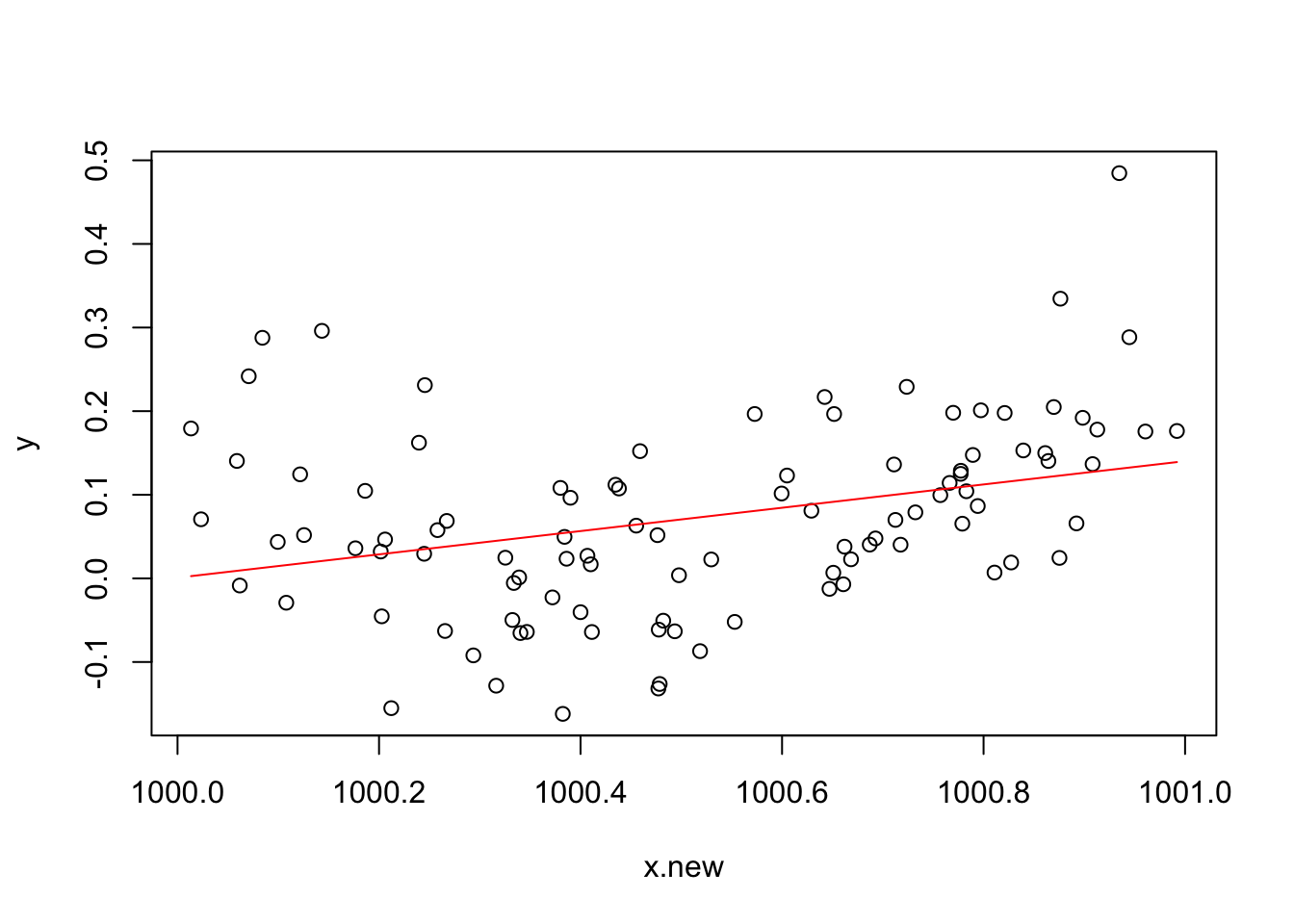

In case you are wondering, one can make

lmfail too. Mathematically it is easy to show that the model fitted values should be unchanged if we simply add 900 to thexvalues, but if we refit the model to such shifted data usinglm, we get the followingx.new <- x+900 b <- lm(y~x.new+I(x.new^2)) plot(x.new,y) lines(x.new,b$fitted.values,col="red")

The shape of the fit has clearly changed, which not be correct. In this particular,

lmfailed to estimate the regression coefficient of \(x^2\), as can be checked by typing:coef(b)(Intercept) x.new I(x.new^2) -139.3926937 0.1393935 NAand the fit stored in

b$fitted.valuesis based only on the first two regression coefficients.

6 Further reading

The course itself is supposed to provide an introduction to some numerical analysis that is useful in statistics, but if you can’t wait to get started than here is a list of some possibilities for finding out more.

Burden, R & J.D. Faires (2005) Numerical Analysis (8th ed) Brooks Cole. This is an undergraduate text covering some of the material in the course: the initial chapter is a good place to start for a discussion of computer arithmetic.

Press WH, SA Teukolsky, WT Vetterling and BP Flannery (2007) Numerical Recipes (3rd ed), Cambridge. This covers most of what we will cover in an easily digested form, but, as the name suggests, it is structured for those wanting to perform a particular numerical task, rather than for providing overview. The brief introduction to Error, Accuracy and Stability of numerical methods is well worth reading. (For random number generation the 3rd edition is a better bet than earlier ones).

Monahan, J.F. (2001) Numerical Methods of Statistics, Cambridge. This covers most of the course material and more, in a bit more depth than the previous books. Chapters 1 and 2 definitely provide useful background on good coding practice and the problems inherent in finite precision arithmetic. The rest of the book provides added depth on many of the other topics that the course will cover.

Lange, K. (2000) Numerical Analysis for Statisticians, Springer. This covers a more comprehensive set of material than the previous book, at a similar level, although with less discussion of the computational nitty-gritty.

If you want more depth still:

Golub, GH and CF Van Loan (1996) Matrix Computations 3rd ed. John Hopkins. This is the reference for numerical linear algebra, but not the easiest introduction.

Watkins, DS (2002) Fundamentals of Matrix Computations 2nd ed. Wiley. This is a more accessible book, but still aimed at those who really want to understand the material (for example, the author encourages you to write your own SVD routine, and with this book you can).

Nocedal and Wright (2006) Numerical Optimization 2nd ed. Springer, is a very clear and up to date text on optimization, which also covers automatic differentiation with great clarity.

Gill, P.E. , W. Murray and M.H. Wright (1981) Practical Optimization Academic Press, is a classic, particularly on constrained optimization. Chapter 2 is also an excellent introduction to computer arithmetic, numerical linear algebra and stability.

Ripley, B.D. (1987) Stochastic Simulation Wiley (re-published 2006). A classic on the subject. Chapters 2 and 3 are particularly relevant.

Davis, P. J., and P. Rabinowitz (1984). Methods of Numerical Integration. Academic Press Inc.

Liu, J (2001). Monte Carlo Strategies in Scientific Computing. Springer.

Robert, CP (1999). Monte Carlo Statistical Methods. Springer–Verlag.

Cormen, T.H., C.E. Leiserson, R.L. Rivest and C. Stein (2001) Introduction to Algorithms (2nd ed.) MIT. Working with graphs, trees, complex data structures? Need to sort or search efficiently? This book covers algorithms for these sorts of problems (and a good deal more) in some depth.